1993–

90’s saw a buzz in data mining, the days when tech publishers started part series on mining techniques and approaches. Courses were introduced in colleges and multiple researches produced to ride on this wave of data mining.

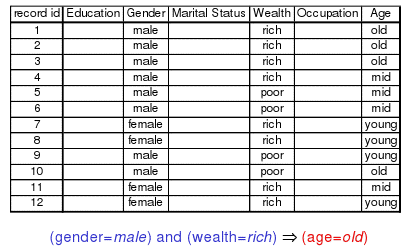

Data mining essentially meant employing clustering or machine learning techniques to draw out conclusions based on data samples that were stored in traditional databases. These conclusions were then used to build rules and associations, but then there was only so much done on that front. More so because the usages were limited within the medical field or research labs to derive insights from data patterns.

2003–

Mining data soon offspring-ed into Business Intelligence and topics of interest converted to Data Mining for Business Intelligence.

Simply put, data mining was not just used for discovering patterns in data but for real-world analysis of data generated within enterprises.

2009–

Let’s make an interesting observation now. In the first paragraph, replace “90’s” with the current decade and “data mining” with “big data” and see how inferences remain unchanged. That’s technology trends for you. During this time when more people had a virtual presence, data volumes peaked up and businesses tried their luck at taming the big elephant.

The Google fame technique of “crawling” was controlling the data acquisition scenario on the web. Even smaller players realized the need of the hour and started getting into this niche game. They gathered data for bigger enterprises who desired to aggregate data from scattered sources on the web. Each such source was individually crawled and extracted HTML’s were delivered.

2010–

While crawlers were being built in the enterprise space, tech enthusiasts realized that HTML’s were laborious to handle and clumsy to interpret. There was an emerging need to convert the unstructured HTML into something more structured and suited to an enterprise’s internal processes. A new trend came in where HTML’s were converted to XML’s/CSV’s/PDF’s that could be readily used by analyst’s for its ultimate intended purpose. This dramatically reduced time, efforts and hence costs required to analyze data. This method, quite a sibling of data mining, came to be known as data extraction/data structuring/data cleansing.

2013; current state-

Data volumes are at their all-time high and big elephant seems to be around for a while with most credit to user-generated data (mobile has a big hand). By reducing costs of acquiring data from web sources, technologists are hopping onto new avenues post-data structuring like named entity recognition, near duplicate detection and data indexing solutions. These techniques do not require to travel farther than your boundaries and yet produce immense value to your customer. There are efforts in the mainstream too to add further value in the competitive market by employing low-latency techniques for web crawl and more generic means of data extraction.

Data mining in essence still exists, but the sources involved and approaches taken have evolved unimaginably. Its interesting to note that with increasing internet penetration, technologies are not just evolving, but evolving faster. So as we get more overwhelmed with data, don’t be shocked to see new technologies being invented and continue to feel great about being a part of a historical data era.