In today’s world, data is everywhere, and businesses rely heavily on it for their growth and success. However, collecting and analyzing data can be a daunting task, especially when dealing with large amounts of data. This is where data crawling services, data scraping services, and data extraction come in.

What is Data Crawling

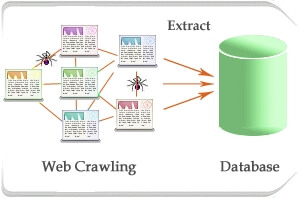

Data crawling refers to the process of collecting data from non-web sources, such as internal databases, legacy systems, and other data repositories. It involves using specialized software tools or programming languages to gather data from multiple sources and build a comprehensive database that can be used for analysis and decision-making. Data crawling services help businesses automate data collection.

Data crawling services are often used in industries such as marketing, finance, and healthcare, where large amounts of data need to be collected and analyzed quickly and efficiently. By automating the data collection process, businesses can save time and resources while gaining insights that can help them make better decisions.

Web crawling is a specific type of data crawling that involves automatically extracting data from web pages. Web crawlers are automated software programs that browse the internet and systematically collect data from web pages. The process typically involves following hyperlinks from one page to another, and indexing the content of each page for later use. Web crawling is used for a variety of purposes, such as search engine indexing, website monitoring, and data mining. For example, search engines use web crawlers to index web pages and build their search results, while companies may use web crawling to monitor competitor websites, track prices, or gather customer feedback.

What is Data Scraping

Data scraping, also known as web scraping, is the process of extracting data from websites. This technique involves the use of software or scripts to automatically access the web and collect information from web pages. The data collected can then be analyzed, stored, and used for various purposes. Data scraping is commonly used for:

Data Collection and Analysis

Organizations and individuals scrape data from the web to gather information on various topics, such as market trends, competitive analysis, customer reviews, and social media sentiment. This data is then analyzed to inform business decisions, research, and strategy development.

Content Aggregation

Data scraping is used to aggregate content from multiple sources for websites that compile news, articles, or other information from across the web. This allows users to access a centralized source of information on specific topics.

E-commerce and Price Comparison

Retailers and consumers use data scraping to monitor e-commerce websites for price changes, product availability, and new products. This information is used for competitive pricing strategies, market analysis, or finding the best deals.

Lead Generation

Companies scrape contact information from various websites to compile lists of potential leads for sales and marketing efforts.

Real Estate and Job Listings

Data scraping is used to collect listings from real estate or job posting sites, providing aggregated platforms where users can search for properties or job opportunities.

Research and Development

Researchers in academia and industry use web scraping to collect data sets for analysis in projects ranging from natural language processing to market research.

While data scraping can be a powerful tool for gathering and utilizing web data, it’s important to consider the ethical and legal aspects. Websites often have terms of service that restrict automated data collection, and laws like the General Data Protection Regulation (GDPR) in Europe set strict guidelines on how personal data can be collected and used. As such, it’s crucial to respect website policies and legal requirements when performing data scraping activities.

Web Scraping vs Web Crawling – Key Differences

1. Difference between Data Scraping & Data Crawling

Scraping data does not necessarily involve the web. Data scraping tools that help in data scraping could refer to extracting information from a local machine, a database. Even if it is from the internet, a mere “Save as” link on the page is also a subset of the data scraping universe.

Data crawling, on the other hand, differs immensely in scale as well as in range. Firstly, crawling = web crawling which means on the web, we can only “crawl” data. Programs that perform this incredible job are called crawl agents or bots or spiders (please leave the other spider in spiderman’s world).

Some web spiders are algorithmically designed to reach the maximum depth of a page and crawl them iteratively (did we ever say crawl?). While both seem different, web scraping vs web crawling is mostly the same.

2. Data Deduplication in Web Data Crawling

The web is an open world and the quintessential practicing platform of our right to freedom. Thus a lot of content gets created and then duplicated. For instance, the same blog might be posted on different pages and our spiders don’t understand that.

Hence, data de-duplication (affectionately dedup) is an integral part of web data crawling service. This is done to achieve two things — to keep our clients happy by not flooding their machines with the same data more than once; and saving our servers some space. However, deduplication is not necessarily a part of web data scraping.

3. Coordinating Successive Crawls

One of the most challenging things in the web crawling space is to deal with the coordination of successive crawls. Our spiders have to be polite with the servers so that they do not piss them off when hit. This creates an interesting situation to handle. Over some time, our spiders have to get more intelligent (and not crazy!).

They get to develop learning to know when and how much to hit a server, and how to crawl data feeds on its web pages while complying with its politeness policies. While both seem different, web scraping vs web crawling is mostly the same.

4. Conflict-Free Crawling

Finally, different crawl agents are used to crawling different websites, and hence you need to ensure they don’t conflict with each other in the process. This situation never arises when you intend to just crawl data.

| Data Scraping | Data Crawling |

|---|---|

| Involves extracting data from various sources including web | Refers to download pages from the web |

| Can be done at any scale | Mostly done at a large scale |

| Deduplication is not necessarily a part | Deduplication is an essential part |

| Needs crawl agent and parser | Needs only crawl agent |

Data Scraping vs Data Crawling

Data crawling vs data scraping, while often used interchangeably, are not the same thing. Similarly, data scraping vs data mining have many differences.

Data scraping refers to the process of extracting data from websites or other sources using specialized software tools. This process involves identifying and retrieving specific data points, such as product prices, product information or customer reviews, from web pages or other sources. Scraping tools use various techniques, such as web scraping, screen scraping, or API scraping, to extract data from different types of sources.

Data scraping is often used for market research, lead generation, and content aggregation. Businesses in various industries such as travel, finance, hotels, ecommerce etc. can use web scraping tools to extract information such as:

- Product information: Price, description, features, and reviews.

- Customer information: Demographics, preferences, and sentiments.

- Competitor information: Prices, product features, and market share.

Data crawling, on the other hand, involves the automated process of systematically browsing the web or other sources to discover and index content. This process is typically performed by software tools called crawlers or spiders. Crawlers follow links and visit web pages, collecting information about the content, structure, and relationships between pages. The purpose of crawling is often to create an index or catalog of data, which can then be searched or analyzed.

Crawlers are often used by search engines to index websites and their contents, and businesses can also use them for various purposes, such as:

- Market research: Collecting data on market trends, competition, and consumer behavior.

- Content aggregation: Gathering data from different sources to create a comprehensive database.

- Web scraping: Using crawlers to extract data from specific web pages.

Data crawling is a broader process of systematically exploring and indexing data sources, while data scraping is a more specific process of extracting targeted data from those sources. Both techniques can be used together to extract data from websites, databases, or other sources.

What is the Difference Between Data Scraping and Data Extraction

Data scraping and data extraction are two related concepts that involve collecting and organizing data from various sources. Although these terms are often used interchangeably, there are some differences between them.

Data scraping generally refers to the process of automatically extracting data from websites or other sources using specialized software tools. This process may involve identifying specific data points, such as product information, customer reviews, pricing data, and more, and extracting them from web pages or other digital sources using techniques such as web scraping, screen scraping, or API scraping. Data scraping is often used to collect large volumes of data quickly and efficiently, and it may be used to scrape information such as:

- Product information: Price, description, features, and reviews.

- Customer information: Demographics, preferences, and behavior.

- Competitor information: Prices, product features, and market share.

Data extraction, on the other hand, is a broader term that can refer to the process of collecting data from any source, including databases, files, or documents. Data extraction involves identifying the relevant data, cleaning and organizing it, and then exporting it to a format that can be used for analysis or further processing. Data extraction may involve manual or automated processes, and it is often used by businesses to:

- Analyze data from different sources: Combining data from multiple sources into a single database for analysis and decision-making.

- Migration of data: Extracting data from an old system to move it to a new one.

- Automated data processing: Extracting data from emails, forms, and other sources to automatically process it into the business’s workflows.

Conclusion

Data crawling, scraping, and extraction are critical tools for businesses to gather, analyze, and utilize data effectively. Each method has its strengths and limitations, and the best approach depends on the business’s specific needs and objectives.

Understanding the differences between data crawling, scraping, and extraction can help businesses make informed decisions about the best approach to collect and analyze data. By utilizing these techniques, businesses can gain insights into their operations, optimize their strategies, and stay ahead of their competitors in today’s data-driven marketplace. Web scraping and data crawling companies like PromptCloud can help you with large scale data extraction. Get in touch with us at sales@promptcloud.com to explore web data crawling solutions.

Here are some related articles which you will find helpful:

Frequently Asked Questions (FAQs)

How do ethical considerations differ between data scraping and data crawling?

Ethical considerations between data scraping and data crawling involve understanding the source of the data, the purpose of its use, and obtaining necessary permissions or conforming to legal requirements. Ethical data scraping often requires explicit consent from the website owner or adherence to the site’s terms of service, especially when personal or proprietary information is involved. Data crawling, being more about indexing public information, still necessitates respect for robots.txt files and privacy policies to avoid unauthorized access to restricted areas.

What are the specific challenges in scaling data crawling and scraping operations?

Scaling data crawling and scraping operations presents unique challenges, including managing bandwidth to avoid overloading servers, handling IP bans and rate limiting through proxy rotation, and dealing with the dynamic nature of web content that requires constant updates to scraping scripts. Efficient scaling also involves sophisticated data storage solutions to handle the large volumes of data collected and implementing robust error-handing mechanisms to manage failed requests or parse errors.

Can data scraping and crawling techniques be integrated into a single workflow, and if so, how?

Integrating data scraping and crawling into a single workflow is possible and can be highly effective for comprehensive data collection strategies. This integration involves using crawling to systematically browse through web pages to identify relevant information and then applying scraping techniques to extract specific data from these pages. Such a workflow can benefit from automated processes that determine when to scrape data based on crawling results, using a combination of both methods to navigate through and extract data from complex website structures efficiently. This approach optimizes data collection efforts by leveraging the strengths of both techniques for targeted and extensive data gathering.

What is a crawl data?

Crawl data refers to the information that has been collected by systematically browsing the internet and extracting information from websites. This process is done using automated programs known as web crawlers, spiders, or bots. These bots navigate the web, following links from page to page, and gather data from websites according to specified criteria. The collected data can then be used for various purposes, such as web indexing by search engines, data analysis for market research, content aggregation for news or research, and more. In the context of PromptCloud or similar data-as-a-service providers, crawl data typically signifies the raw, structured, or semi-structured data extracted from targeted web sources for clients, tailored to their specific data needs and use cases.

What is the difference between crawling and scraping data?

Crawling and scraping are related but distinct processes involved in gathering data from the web. Here’s how they differ:

Web Crawling:

- Purpose: The primary goal of web crawling is to index the content of websites across the internet. Search engines like Google use crawlers (also known as spiders or bots) to discover and index web pages.

- Process: Crawlers systematically browse the web by following links from one page to another. They are mainly concerned with discovering and mapping out the structure of the web.

- Scope: Web crawling covers a broad range of websites and is not typically focused on extracting specific data from these sites. It’s more about understanding what pages exist and how they are interconnected.

Web Scraping:

- Purpose: Web scraping is focused on extracting specific data from web pages. It is used to gather particular information from websites, such as product prices, stock levels, articles, and more.

- Process: Scraping involves fetching web pages and then extracting meaningful information from them. This process often requires parsing the HTML of the page to retrieve the data you’re interested in.

- Scope: Web scraping is targeted and selective. It’s about getting detailed information from specific pages rather than understanding the web’s structure.

In summary, crawling is about finding and indexing web pages, while scraping is about extracting specific data from those pages. Crawling provides the roadmap of what’s on the web, which can be used to perform targeted scraping operations on specific sites to gather detailed information.

Is it legal to crawl data?

The legality of crawling data from the web can vary significantly based on several factors, including the methods used for crawling, the nature of the data being crawled, the source website’s terms of service, and the jurisdiction under which the crawling activity occurs. Here are some general considerations:

Compliance with Terms of Service

- Many websites outline conditions for using their services, including whether or not automated crawling is permitted, in their Terms of Service (ToS) or robots.txt file. Adhering to these guidelines is crucial to ensure legal compliance.

Purpose of Data Use

- The intended use of the crawled data can also affect the legality of crawling. Using data for personal, non-commercial purposes is generally more acceptable than using it for commercial gain, especially if the latter competes with the source’s business.

Copyright Issues

- Copyright laws protect original content published on the web. Crawling and reproducing copyrighted material without permission may infringe on these rights, especially if the content is republished or used commercially.

Privacy Considerations

- Data protection and privacy laws, such as the General Data Protection Regulation (GDPR) in Europe, impose strict rules on the collection and use of personal data. Crawling data that includes personal information without consent can lead to legal issues.

Bot Behavior and Server Load

- Ethical crawling practices, such as respecting a site’s robots.txt file, avoiding excessive server load, and identifying the crawler through the User-Agent string, are important to maintain the integrity of web services and avoid potential legal action.

Jurisdiction

- Laws regarding data crawling can vary by country or region. It’s essential to consider the legal framework of both the source website’s location and the location from which you are crawling.

Given these considerations, it’s advisable to consult with legal professionals before engaging in large-scale or commercial data crawling activities to ensure compliance with relevant laws and regulations.

How does crawl work?

The process of web crawling involves several steps, each designed to systematically discover and index the content of the web. Here’s an overview of how crawling works:

1. Starting with Seeds

- The crawl begins with a list of initial URLs known as “seed” URLs. These seeds serve as the starting points for the crawl. They are often chosen because they are known to be rich in links and content, such as popular website homepages.

2. Fetching Web Pages

- The crawler, also known as a spider or bot, visits the seed URLs and requests the web pages from the server, just like a browser does when a user visits a site. The server responds by sending the HTML content of the page to the crawler.

3. Parsing Content

- Once a page is fetched, the crawler parses the HTML content to extract links to other pages. This parsing process also allows the crawler to identify and categorize the content of the page, such as text, images, and other media.

4. Following Links

- The links discovered during the parsing step are added to a list of URLs to visit next. This list is often managed in a queue, with new URLs being added and visited URLs being removed or marked as visited to prevent the crawler from revisiting the same pages.

5. Respecting Robots.txt

- Before fetching a new page, the crawler checks the website’s robots.txt file. This file, located at the root of a website, specifies which parts of the site should not be accessed by crawlers. Ethical crawlers respect these rules to avoid overloading websites and to respect the wishes of website owners.

6. Managing Crawl Rate and Depth

- Crawlers manage the rate at which they visit pages (crawl rate) to avoid overwhelming web servers. They also decide how deep into a site’s link hierarchy to go (crawl depth), which can depend on the crawler’s purpose and the resources available.

7. Indexing and Storing Data

- The content and data extracted during the crawl are indexed and stored in databases. This information can then be used for various purposes, such as powering search engines, analyzing web content, or feeding into machine learning models.

8. Continuous and Incremental Crawling

- The web is dynamic, with new content being added and old content being changed or removed constantly. Crawlers often revisit sites periodically to update their databases with the latest content. This process is known as incremental crawling.

Web crawling is a complex, resource-intensive process that requires sophisticated algorithms to manage scale, efficiency, and politeness to web servers. It’s the foundational technology behind search engines and many other web services that rely on up-to-date data from across the Internet.

What does crawling of data mean?

Crawling of data refers to the automated process where a program, known as a web crawler, spider, or bot, systematically browses the World Wide Web in order to collect data from websites. This process involves the crawler starting from a set of initial web pages (seed URLs) and using the links found in these pages to discover new pages, fetching their content, and continuing this pattern to traverse the web. The primary aim is to index the content of websites, allowing for the data to be processed and organized in a way that it can be easily searched and retrieved, typically for use in web search engines, data analysis, market research, and various other applications.

Crawling is foundational to the operation of search engines, which rely on these processes to gather up-to-date information from the web so that they can provide relevant and timely search results to users. The process is carefully managed to respect website guidelines (such as those specified in robots.txt files), to avoid overloading servers, and to ensure that the collected data is used ethically and legally.

What does data scraping do?

Data scraping automates the extraction of information from websites. This process involves using software or scripts to access the internet, retrieve data from web pages, and then process it for various purposes. Here’s a breakdown of what data scraping does:

Automates Data Collection

Instead of manually copying and pasting information from websites, data scraping tools automatically navigate through web pages, identify relevant data based on predefined criteria, and collect it much more efficiently.

Structures Unstructured Data

Web pages are designed for human readers, not for automated processing. Data scraping helps convert the unstructured data displayed on websites (like text and images) into a structured format (such as CSV, JSON, or XML files) that can be easily stored, searched, and analyzed.

Enables Large-scale Data Extraction

Data scraping tools can collect information from many pages or sites quickly, allowing for the aggregation of large volumes of data that would be impractical to gather manually.

Facilitates Real-time Data Access

Some data scraping setups are designed to collect information in real-time, enabling users to access up-to-date data. This is particularly useful for monitoring dynamic information, such as stock prices, weather conditions, or social media trends.

Supports Data Analysis and Decision-making

The data collected through scraping can be analyzed to uncover trends, patterns, and insights. Businesses and researchers use these analyses to make informed decisions, understand market dynamics, or drive strategic planning.

Enhances Productivity

By automating the tedious and time-consuming task of data collection, data scraping allows individuals and organizations to focus their efforts on analyzing and using the data rather than on gathering it.

Serves Various Industries and Domains

Data scraping is utilized across a wide range of fields, including e-commerce (for price monitoring and comparison), finance (for market analysis and tracking financial data), marketing (for lead generation and social media monitoring), real estate (for aggregating listings), and academic research, among others.

Despite its many benefits, it’s important to approach data scraping with consideration for legal constraints and ethical guidelines. Websites may have terms of service that limit how their data can be used, and there are regulations, like the GDPR, that govern how personal data can be collected and handled. Properly navigating these considerations is essential for responsible and lawful data scraping practices.

Is it legal to scrape data?

The legality of data scraping depends on several factors, including the jurisdiction, the nature of the data being scraped, how the data is used, and the specific terms of service of the website being scraped. There isn’t a one-size-fits-all answer, as laws and regulations vary significantly across different countries and regions. Here are some key considerations:

Terms of Service

Many websites include clauses in their terms of service that explicitly prohibit scraping. Violating these terms could potentially lead to legal action, though the enforceability and legal implications can vary.

Copyright Law

Data scraping could infringe on copyright laws if the scraped data is copyrighted material and the use of that data violates copyright. The legality under copyright law can depend on the amount and substantiality of the data scraped, among other factors.

Data Protection and Privacy Laws

In jurisdictions with strict data protection laws, like the European Union’s General Data Protection Regulation (GDPR), scraping personal data without consent can be illegal. It’s crucial to understand and comply with these laws, especially when handling identifiable information about individuals.

Computer Fraud and Abuse Act (CFAA) in the United States

The CFAA prohibits accessing a computer without authorization or in excess of authorization. There has been legal debate about whether scraping public-facing websites constitutes a violation of the CFAA, and interpretations can vary by case.

Recent Legal Precedents

Several high-profile legal cases have addressed data scraping, with varying outcomes. These cases often hinge on specific details such as whether the scraper bypassed technical barriers, the nature of the scraped data, and how the data was used. Legal precedents can help guide what is considered acceptable or unacceptable practice, but they can also vary widely.

Legal and Ethical Considerations

Beyond legality, ethical considerations should also guide decisions about scraping data. This includes respecting user privacy, avoiding overloading servers with requests, and considering the impact of scraping on the data source.

Given these complexities, it’s advisable to consult with legal experts familiar with the relevant laws and regulations in your jurisdiction before engaging in data scraping, especially if you’re scraping at scale or dealing with potentially sensitive or personal data. Compliance with website terms of service, copyright laws, and data protection regulations is crucial to ensure that scraping activities remain legal and ethical.

Is data scraping easy to learn?

Data scraping can be relatively easy to learn for those with some background in programming and web technologies, though the ease of learning can vary widely depending on the complexity of the tasks you aim to perform and the tools you choose to use. Here are a few factors that can influence how easy or difficult you might find learning data scraping:

Basic Programming Skills

Having a foundational understanding of programming concepts and familiarity with at least one programming language (commonly Python or JavaScript for web scraping) is crucial. Python, in particular, is popular for data scraping due to its readability and the powerful libraries available (like Beautiful Soup and Scrapy), which simplify the process.

Understanding of HTML and the Web

Understanding how web pages are structured (HTML) and how client-server communication works (HTTP/HTTPS) are essential for effective scraping. Knowing how to identify the data you need within the structure of a web page (using tools like web developer tools in browsers) can make learning data scraping much more manageable.

Availability of Libraries and Tools

There are many libraries, frameworks, and tools designed to facilitate data scraping, ranging from simple browser extensions for non-programmers to sophisticated libraries for developers. The availability of these resources makes it easier to start learning and executing scraping projects.

Complexity of the Target Websites

The difficulty of scraping can increase significantly with the complexity of the website from which you’re trying to extract data. Sites that heavily use JavaScript, require login authentication, or use anti-scraping measures can present additional challenges that require advanced techniques to overcome, such as using headless browsers or managing sessions and cookies.

Legal and Ethical Considerations

Understanding the legal and ethical aspects of scraping (such as compliance with terms of service, copyright laws, and privacy regulations) is crucial. This knowledge is necessary not only for conducting scraping responsibly but also for ensuring that your scraping activities are legal.

Data Scraping Meaning

Data scraping, also known as web scraping, refers to the process of extracting data from websites. This technique involves using software or automated scripts to access the internet, navigate web pages, and systematically collect information from those pages. The extracted data can then be processed, analyzed, stored, or used in various applications. Data scraping is commonly used for purposes such as:

- Market Research: Gathering data on market trends, customer preferences, and competitive strategies.

- Price Monitoring: Tracking product prices across different e-commerce platforms for competitive analysis or price optimization.

- Lead Generation: Extracting contact information of potential customers or clients for sales and marketing efforts.

- Content Aggregation: Compiling news, blog posts, or other content from multiple sources into a single platform.

- Social Media Analysis: Collecting data from social media platforms to analyze public sentiment, trends, or influencers.

- Real Estate Listings: Aggregating property listings from various real estate websites for analysis or to create a consolidated property search platform.

- Job Boards: Aggregating job listings from various online job boards to create a comprehensive job search platform.

Data scraping can significantly automate and expedite the process of data collection, allowing for the efficient handling of large volumes of information that would be impractical to collect manually. However, it’s important to consider legal and ethical issues, including respecting website terms of use, copyright laws, and privacy regulations, when conducting data scraping activities.