The financial sector is changing at lightning speed. Whether it’s automated trading, digital payments, or safeguarding financial institutions from problematic activities, the backbone of innovation often lies in the quality and breadth of the data used. In recent years, web data aggregation has emerged as a powerful mechanism for feeding machine learning algorithms with the real-time inputs they need to make adaptive, high-impact decisions.

When we consider the challenges in modern banking and investment, advanced algorithms are no longer just a competitive advantage – they’re becoming the norm. Complex modeling techniques, combined with the growing reliance on streaming data, create a fertile ground for developing autonomous systems capable of analyzing customer behavior, anticipating market fluctuations, and scaling compliance efforts. Nowhere is this more evident than in certain sensitive areas that call for advanced oversight. These scenarios, sometimes touched upon in aml finance contexts, require robust data pipelines to ensure that institutions can respond to evolving regulations and threats.

Below, we’ll explore how web data aggregation boosts autonomous machine learning in financial applications, why a holistic approach to external data is crucial, and how responsible data solutions can set your organization on the path toward better risk mitigation and deeper market insight. Although we’ll steer clear of an overly technical deep-dive, we’ll underline the necessary considerations for those who wish to harness large-scale web data for next-level financial analytics, especially in use cases tied to aml finance, risk management, and algorithmic decision-making.

The Evolution of Autonomous ML in Financial Services

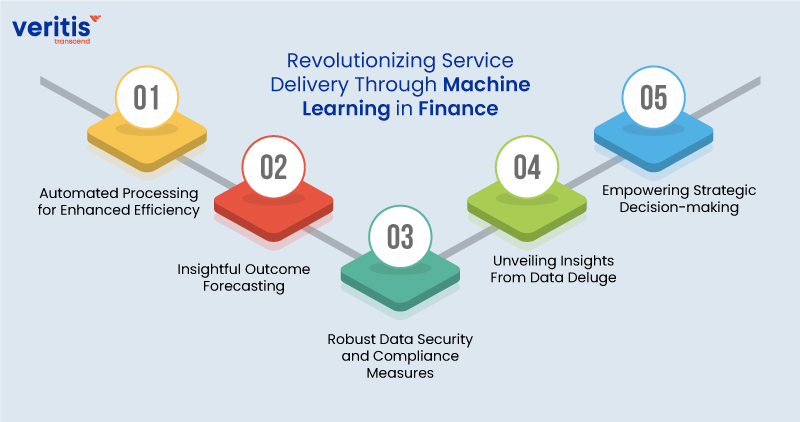

Source: https://www.veritis.com/

Not too long ago, financial institutions relied primarily on static models built by data teams in quarterly or annual cycles. As markets became more volatile and consumer expectations shifted, a new era of rapid iteration and real-time analysis emerged. Machine learning models that could update their parameters automatically, based on live data, were no longer a luxury but a strategic necessity.

This transformation, frequently noted in discussions related to aml finance efforts, goes beyond simple predictions of creditworthiness or asset pricing. It involves a new operational mindset: data is never “final.” Instead, data flows continuously into algorithms that refine themselves in near real-time. The shift from static to dynamic ML requires constant and rich data feeds, spanning everything from social media sentiment to commodity price trackers and beyond.

Why Web Data Aggregation Matters for Business Growth?

Autonomous machine learning systems hunger for timely, accurate, and wide-ranging information. In the finance sector, capturing this data from public digital sources is often referred to as web data aggregation. By systematically collecting information across multiple online platforms—news outlets, government portals, corporate websites, or e-commerce marketplaces – firms can build a robust mosaic of market realities.

1. Real-Time Market Updates

Stock tickers and commodity prices aren’t the only indicators that matter. Forum discussions, regulatory updates, and consumer trends can all shift the trajectory of an investment. In contexts parallel to aml finance, sudden announcements or red flags can dramatically alter a model’s decisions.

2. Customer and Competitor Insight

By analyzing social media sentiment or third-party review platforms, financial institutions can detect early warnings about a company’s financial health or changing consumer preferences. These signals are invaluable to predictive models that fuel autonomous ML applications—helping them respond faster and more accurately to evolving risks.

3. Creditworthiness and Risk Modeling

Traditional risk assessments rely heavily on internal data – loan histories, payment records, credit scores. But web data aggregation can reveal new trends that internal data might miss. In certain environments akin to aml finance, it’s often external intelligence about a company’s or individual’s behavior that triggers a re-assessment of credit or counterparty risk.

4. Automation at Scale

Manual data collection breaks down quickly when we talk about hundreds of thousands of data points that need to be refreshed hourly or daily. Web scraping and data extraction solutions are designed to automate this process, ensuring that autonomous ML models receive fresh data without major lags.

From Data Collection to Intelligent Decision-Making

Simply gathering data doesn’t inherently create intelligence. Each piece of content extracted from the web must be cleaned, normalized, and integrated into an appropriate data pipeline for further analysis. The real value surfaces once you feed this data into machine learning systems that can:

- Identify Anomalies: Whether in asset prices, transaction volumes, or suspicious patterns that might raise flags reminiscent of aml finance frameworks.

- Perform Sentiment Analysis: Gauge public or market sentiment around a certain stock, currency, or sector.

- Predict Outcomes: Forecast financial metrics like revenue streams, default probabilities, or even macroeconomic indicators.

- Optimize Strategies: Fuel algorithmic trading, dynamic pricing of financial products, or real-time risk scoring.

While an internal data warehouse can provide a strong historical reference point, the dynamic nature of markets – especially in global finance – necessitates external inputs. This is where large-scale web scraping becomes a competitive differentiator.

What Core Pillars Make Data Aggregation Successful?

Building an autonomous ML model on top of aggregated data sounds straightforward, but in practice, success depends on several pillars:

1. Diverse Data Sources

Variety matters. You need a broad range of data spanning news outlets, social media, regulatory websites, industry reports, or corporate disclosures. In discussions related to aml finance, for instance, looking at official blacklists or sanctioned entities might be pivotal.

2. Data Quality and Accuracy

Garbage in, garbage out. Even the most advanced autonomous system falters if fed with outdated or contradictory information. Quality checks – like verifying domain authority or deduplicating overlapping datasets – help maintain model reliability.

3. Timeliness

Markets move fast. A single piece of news can shift investor sentiment or reveal new risk factors. Ensuring your pipeline captures and processes data in real-time (or close to it) is vital to building reactive machine learning systems.

4. Ethical and Legal Compliance

Collecting public data is generally permissible, but always be mindful of local and international regulations. The last thing any institution wants is a compliance setback, particularly if a regulatory body focuses heavily on issues reminiscent of aml finance. By using reputable partners or adopting responsible scraping practices, organizations can mitigate legal risks.

5. Integrative Analytics

Data integration and advanced analytics are critical next steps. Think of aggregated data as the raw feed. It needs to be integrated into an environment—like a data lake or specialized analytics platform – where ML algorithms run continuously and refine themselves.

How Prompt Data Feeds Boost Autonomous ML?

Imagine your AI-driven trading platform or risk-scoring engine can seamlessly ingest global news headlines, parse social media chatter around emerging fintech solutions, and glean updated consumer sentiment from thousands of online forums. With each new data point, the model adjusts its weights and predictions, enabling near-autonomous operation.

Such performance leaps become particularly relevant if your institution deals with heavy compliance demands, paralleling those faced in aml finance. An autonomous system can more rapidly flag anomalies or suspicious transactions by correlating seemingly unrelated data points from across the web. It’s not just about combing through local or proprietary databases; it’s about harnessing the power of web data to see the bigger picture.

Common Challenges and Practical Solutions in Data Aggregation

Despite its game-changing potential, aggregating massive volumes of external data also presents challenges:

- Scalability: Large-scale data extraction requires robust infrastructure. For institutions needing updates around the clock, advanced data extraction setups with load balancing, proxy management, and error handling are essential.

- Data Cleansing: Web content can be messy—HTML tags, incomplete data, or inconsistent formats. Automated pipelines need to normalize this data for seamless ML integration.

- Security: Handling external data can expose systems to vulnerabilities if not properly sandboxed or scanned. Institutions must deploy strict security protocols to protect internal systems.

- Cost-Effectiveness: Building everything in-house can be expensive. Working with specialized data extraction providers can cut costs, accelerate deployment, and free internal teams to focus on analytics rather than scraping logistics.

Future Outlook: Where Web Data Aggregation Meets Self-Learning Algorithms?

The rapid emergence of advanced deep learning architectures, combined with powerful compute resources, points toward a future where financial algorithms operate with even more autonomy. Intelligent systems will parse text, images, and possibly even video content from the web, merging it with traditional market data to create predictive models that adapt in real time. For financial institutions hoping to capture these gains, adopting a well-orchestrated data strategy is non-negotiable.

As markets globalize and digital assets gain prominence, the lines between conventional financial instruments and emerging digital products blur. Access to broader data sets – particularly real-time information gleaned from an ever-expanding web – will differentiate winners from laggards. For institutions that engage heavily in compliance, especially domains reminiscent of aml finance, having immediate access to relevant signals can be the difference between catching an adverse event early or dealing with costly fallout later.

The Role of Web Scraping Providers in Modern Data Strategies

Building a proprietary scraping operation often demands specialized expertise – like rotating IP addresses to avoid blocks, parsing complex JavaScript-heavy sites, or scaling up infrastructure. This complexity grows if your institution plans to scrape dozens or hundreds of sources simultaneously, especially if you want near real-time coverage of developments that can sway an autonomous ML model.

Reputable web scraping and data extraction providers reduce these headaches, offering solutions tailored to your data needs. They manage the back-end complexities, ensuring compliance with legal and ethical guidelines while delivering curated datasets in a readily usable format. By partnering with such specialists, financial organizations can stay focused on algorithm design, analytics, and strategic implementation.

Conclusion

In a landscape where every second and every insight can impact the bottom line, the decision to invest in a strong data pipeline might be the most critical factor separating top-tier financial innovators from the rest. By integrating comprehensive data scraping capabilities into your autonomous ML frameworks, you position your organization for success in an era of heightened digital competition – and ensure that your internal systems remain agile and adaptable for whatever the future of global finance holds.For custom web scraping solutions, get in touch with us at sales@promptcloud.com