What is Data Extraction?

Data extraction is the process of retrieving structured or unstructured data from various sources for further processing and analysis. This practice is integral to transforming raw data into insightful information. Data sources can include:

- Databases

- Webpages

- Emails

- Documents

- PDFs

- APIs

Types of Data Extraction

Structured Data Extraction

- Usually involves databases where data resides in tables and is well-organized.

- Relies on SQL queries to fetch specific data points.

Unstructured Data Extraction

- Data sources include text files, web pages, and other formats lacking a predefined structure.

- Requires advanced techniques like Natural Language Processing (NLP) or web scraping.

Techniques Used in Data Extraction

- Web Scraping: Collects data from web pages using automated tools or scripts.

- Optical Character Recognition (OCR): Converts different types of documents, such as scanned paper documents or PDFs, into editable and searchable data.

- ETL (Extract, Transform, Load): Involves three phases. Data is first extracted, then transformed (cleansed and formatted), and finally loaded into a data warehouse or database.

Data Extraction APIs

Data extraction APIs empower businesses to automate and streamline the process of gathering useful information from various sources such as websites, databases, and documents. These tools are pivotal for enhancing data collection methods, improving efficiency, and gaining actionable insights.

Image Source: MonkeyLearn

Essential Tips for Choosing the Right Data Extraction API

Selecting the right Data Extraction API is critical for robust and efficient data collection. The following criteria should guide the decision-making process:

1. Data Extraction Capabilities

- Versatility: APIs should support multiple data formats like JSON, XML, CSV, and HTML.

- Accuracy: High precision in data extraction ensures reliability.

- Speed: Fast data retrieval keeps operations efficient.

2. Ease of Integration

- Compatibility: The API should work seamlessly with existing systems and languages such as Python, Java, or Ruby.

- Documentation: Comprehensive and clear documentation facilitates quick implementation.

- Sample Code: Availability of sample code can accelerate integration and testing.

3. Scalability

- Load Handling: Ability to manage high volumes of data without performance degradation.

- Cloud Support: APIs offering cloud-based solutions often provide better scalability.

- Rate Limiting: Understand limitations on request rates, especially for large-scale operations.

4. Security and Compliance

- Data Encryption: Ensure that APIs offer SSL/TLS encryption.

- Authentication: Strong mechanisms like OAuth 2.0 or API keys are essential.

- Compliance: Check for compliance with regulations like GDPR or HIPAA.

5. Cost-Effectiveness

- Pricing Models: Familiarize with subscription plans or pay-as-you-go models.

- Free Tier: Determine if a free tier is available for initial testing.

- Hidden Costs: Be aware of any potential additional costs for data volume or extra features.

6. Customer Support

- Responsiveness: Quick and knowledgeable support is critical for resolving issues.

- Channels: Multiple support channels (email, chat, phone) can be beneficial.

- Community: Active developer communities or forums can be invaluable resources.

7. Reputation and Reviews

- User Reviews: Consider feedback from current users.

- Case Studies: Look for case studies demonstrating successful implementations.

- Industry Endorsement: APIs endorsed by industry leaders often signal reliability and performance.

8. Additional Features

- Data Cleaning: Some APIs offer built-in data cleansing tools.

- Automation: Capabilities for scheduling automatic data extractions.

- Customization: Flexibility to tailor the API to specific needs.

Focusing on these criteria will help in identifying a Data Extraction API that best meets the specific needs and technical requirements for 2025 and beyond.

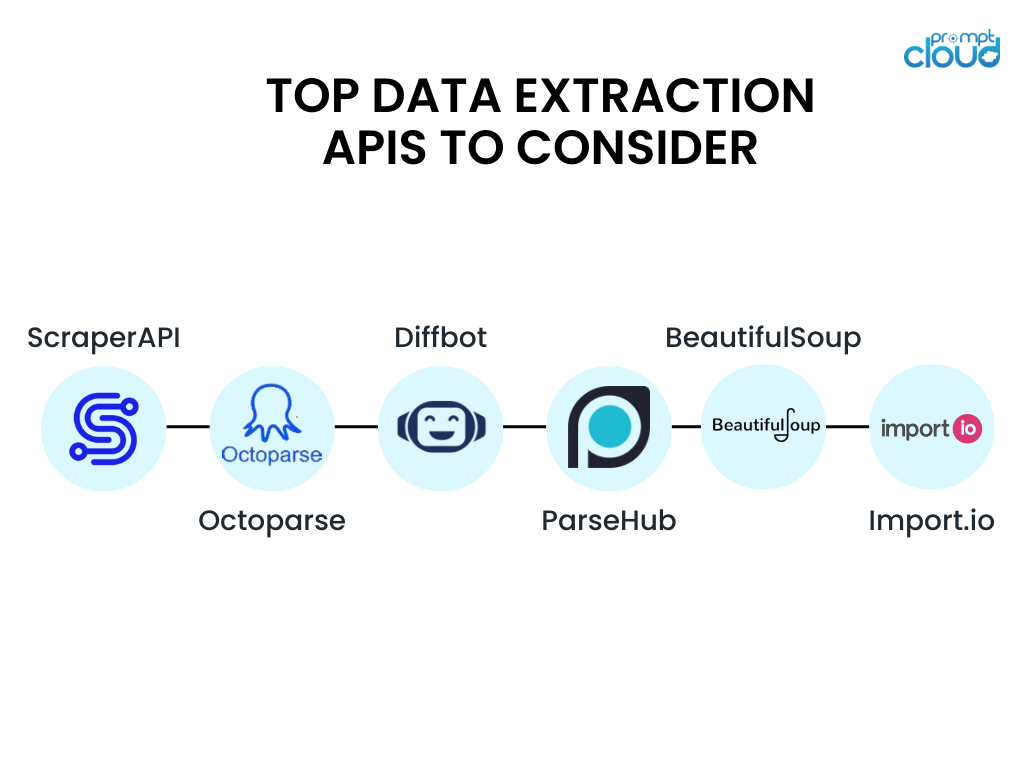

Best Data Extraction API in 2025

When it comes to selecting the right data extraction API, several options stand out due to their reliability, performance, and features. Here are the top APIs to consider in 2025:

1. ScraperAPI

ScraperAPI provides a powerful solution for developers looking to extract web data seamlessly. It offers:

- Rotating Proxies: Automatically rotates IP addresses to prevent blocks.

- Headless Browsers: Supports headless Chrome for JavaScript rendering.

- Geotargeting: Targets specific geographic locations.

2. Octoparse

Octoparse is known for its user-friendly interface and robust functionalities. Key features include:

- No Coding Required: Visual workflow for easy data extraction.

- Cloud-Based Extraction: Handles large-scale scraping tasks.

- Scheduled Crawling: Automates data extraction at set intervals.

3. Diffbot

Diffbot utilizes AI-driven technology to extract data with high accuracy. Its standout features are:

- Automatic Extraction: Identifies and extracts data from any web page.

- Enhanced APIs: Offers APIs for articles, products, discussions, and more.

- Custom Rules: Tailors extraction logic to specific needs.

4. ParseHub

ParseHub’s versatility makes it a preferred choice for developers. It provides:

- Interactive Interface: Supports both simple and complex data extraction tasks.

- Dynamic Content Handling: Can extract data from JavaScript-heavy websites.

- Export Options: Supports multiple formats including Excel and JSON.

5. BeautifulSoup

BeautifulSoup is a Python library for web scraping projects, known for:

- Flexible Parser: Handles HTML and XML parsing efficiently.

- Integration: Combines well with other Python libraries like Requests.

- Custom Parsing: Allows for precise extraction using custom search patterns.

6. Import.io

Import.io offers a complete platform for data extraction without the need for coding. Highlights include:

- Point and Click Interface: Easy to use for non-developers.

- Real-Time Data: Extracts real-time data through API integration.

- Data Integration: Connects with databases and visualization tools.

For developers and businesses seeking efficient and effective ways to collect vast amounts of data, these data extraction APIs are among the best available in 2025. Their features cater to various needs, from simple data collection to complex, large-scale scraping projects.

Data Extraction Services

Data extraction services are crucial for organizations requiring comprehensive and accurate data collection from various sources. These services utilize advanced technologies and algorithms to gather, process, and structure data efficiently. Among the top players in this domain, PromptCloud has distinguished itself through its innovative solutions and reliable performance.

Must-Have Features to Look for…

- Automated Data Scraping: Automated scripts and software systems are employed to extract data from web pages and documents, significantly reducing manual effort and errors.

- Real-time Data Collection: Ensures that the data is always up to date, making it highly relevant for time-sensitive applications.

- Data Structuring: The extracted information is organized into structured formats such as CSV, JSON, or XML, which facilitate easy analysis and integration.

- Multi-source Integration: Data can be collected from a variety of sources including social media, websites, APIs, and databases, providing a holistic view.

- Compliance and Security: Adheres to legal standards and data protection regulations, ensuring ethical data extraction practices.

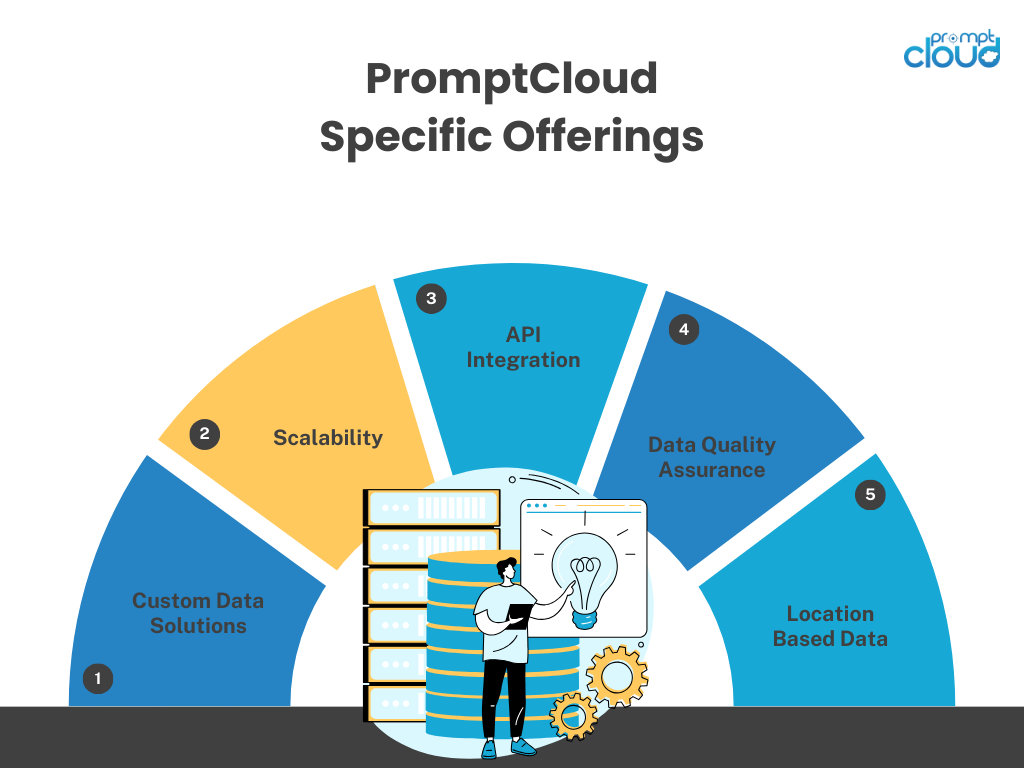

Why Choose for PromptCloud’s Data Extraction Services?

- Custom Data Solutions: Tailors data extraction services to meet specific client needs, including targeted data and specific frequency of data extraction.

- Scalability: Offers scalable solutions that can handle data extraction needs from small-scale to enterprise-level operations.

- API Integration: Facilitates seamless integration with client systems through robust API support, allowing for easy data access and manipulation.

- Data Quality Assurance: Ensures that the data extracted is accurate, clean, and ready for analysis, thus minimizing the need for post-processing.

- Geolocation Data: Supports the extraction of location-based data, beneficial for businesses relying on geospatial analytics.

Key Benefits of Choosing Us…

- Efficiency: Automates tedious tasks, freeing up human resources for more strategic activities.

- Accuracy: Minimizes human error, increasing the reliability of the data collected.

- Cost-effectiveness: Reduces costs associated with manual data collection and processing.

- Insights and Analytics: Provides a steady stream of data that can be analyzed to derive actionable insights, driving business growth.

- Competitive Advantage: Offers access to a wealth of information that can be leveraged to stay ahead in the market.

Data extraction services, particularly those provided by enterprises like PromptCloud, play a pivotal role in modern data-driven strategies, offering tools that are both powerful and adaptable to varied organizational needs.

Integrating Data Extraction API into Your Workflow

Integrating a data extraction API into an existing workflow can significantly streamline data collection processes. To ensure a smooth integration, the following steps should be implemented meticulously:

- Identify Requirements and Objectives

- Determine the specific data extraction needs based on the project scope.

- Outline the key performance indicators (KPIs) to measure the API’s effectiveness.

- Choose the Right API

- Evaluate various data extraction APIs based on feature compatibility, performance, and cost.

- Ensure that the selected API has comprehensive documentation and active support channels.

- Set Up API Authentication

- Obtain the required API keys or tokens through the provider’s developer portal.

- Implement secure storage practices for managing authentication credentials.

- Security should always be a top priority when handling API keys and tokens.

- Design the Data Flow

- Map out how extracted data will be integrated into the existing systems.

- Use flowcharts or diagrams to visualize the data pipeline from extraction to final storage or analysis.

- Implement the API

- Use the API documentation to write the necessary code for data extraction.

- Test the code thoroughly to identify potential issues during the extraction process.

- Error Handling and Logging

- Implement robust error handling mechanisms to manage API call failures.

- Set up logging for monitoring API requests and responses, and for troubleshooting.

- Automate Extraction Processes

- Schedule automated data extraction tasks using cron jobs or task schedulers.

- Ensure that the automation scripts can handle exceptions gracefully to prevent disruptions.

- Data Storage and Management

- Decide on appropriate storage solutions, such as databases or data warehouses, for the extracted data.

- Implement data validation techniques to maintain data integrity.

- Monitor and Optimize

- Continuously monitor API performance and data extraction efficiency.

- Optimize the extraction process by analyzing usage metrics and feedback.

- Maintain API Integration

- Stay updated with API version changes and updates from the provider.

- Regularly review and refactor the integration code to adhere to best practices.

By following these steps, teams can integrate a data extraction API into their workflows efficiently, enhancing their data collection capabilities and ensuring that the extracted data is accurate and readily available for analysis. Regular maintenance and optimization will ensure long-term success and adaptability to evolving data requirements.

Future Trends and Developments in Data Extraction

The landscape of data extraction is constantly evolving, driven by technological advancements and shifts in market demands. In 2025, several key trends and developments are expected to shape this field, enhancing the capabilities and efficiency of data extraction APIs.

Artificial Intelligence and Machine Learning Integration

- Enhanced Accuracy: AI and ML algorithms are continuously improving the precision with which data extraction tools can identify and extract relevant information from unstructured data.

- Predictive Analysis: These technologies enable predictive data extraction, where the system can anticipate the types of data required based on historical patterns.

- Automation: Automated learning processes can refine data extraction methods over time, reducing the need for manual intervention.

Natural Language Processing (NLP)

- Contextual Understanding: NLP advancements will enable tools to better understand context, allowing for more accurate interpretation of complex documents.

- Multilingual Support: Enhanced NLP capabilities will support a wider range of languages, making data extraction more accessible globally.

- Sentiment Analysis: Integration of sentiment analysis can help in extracting data that reflects public opinion or emotional tone, providing deeper insights.

Real-time Data Processing

- Instant Updates: With real-time data processing, users can receive and act on data as it is generated, significantly reducing latency.

- Scalability: Advanced computing power allows data extraction tools to handle large-scale, high-frequency data streams efficiently.

- Event-driven Models: These models ensure that data extraction processes are responsive to specific triggers, enhancing their responsiveness and relevance.

Blockchain Technology

- Data Integrity: Blockchain ensures the immutability and traceability of extracted data, enhancing its reliability and accountability.

- Secure Data Sharing: It facilitates secure and transparent data sharing across multiple stakeholders, ensuring that data remains tamper-proof.

- Smart Contracts: These self-executing contracts streamline data extraction tasks by automatically triggering actions based on predefined conditions.

Customizable APIs

- User-Centric Designs: APIs are becoming increasingly customizable to meet the specific needs of users, offering flexible integration and personalized functionalities.

- Modular Components: The trend towards modular API components allows organizations to choose and implement only the features they need, optimizing efficiency and cost.

- Third-Party Integrations: Seamless integration with other enterprise tools and systems ensures that data extraction APIs can be part of a larger, cohesive data strategy.

Open Source and Collaboration

- Community-Driven Improvements: Open-source data extraction tools benefit from continuous enhancements driven by a collaborative global community.

- Transparency: Open source solutions offer greater transparency, allowing users to understand and modify the code to better meet their needs.

- Cost-Effectiveness: Reduced costs associated with open-source tools make advanced data extraction capabilities more accessible to smaller organizations.

Conclusion

Selecting the right data extraction API is crucial for enhancing data collection processes, offering significant advantages in terms of efficiency, accuracy, and scalability. The APIs reviewed in this article stand out for their robust features, compatibility, and performance, making them top choices for businesses aiming to leverage data effectively.

Now that you have explored the world of data extraction and discovered the top data extraction APIs of 2025, it’s time to take your data strategy to the next level. Schedule a demo with PromptCloud today and unlock the full potential of our cutting-edge data extraction services!

Frequently Asked Questions

What is API data extraction?

API data extraction refers to the process of retrieving structured data from a web service or application using an Application Programming Interface (API). APIs provide a standardized way for different software systems to communicate and exchange data, enabling seamless interaction between applications. In the context of data extraction, APIs allow businesses to access specific sets of data directly from websites or services, without the need for web scraping. This can include anything from product information on e-commerce platforms to social media insights, depending on the API’s functionality.

By leveraging API data extraction, companies can automate the process of collecting large amounts of real-time data in a consistent and scalable manner. Since APIs typically return data in a well-structured format like JSON or XML, the process is efficient, less error-prone, and easier to integrate with existing data workflows. This method is particularly valuable for businesses looking for reliable, structured data feeds from providers who offer public or private APIs.

How to extract data from a database using API?

To extract data from a database using an API, follow these general steps:

- Set Up the API: First, ensure the database you’re working with has an API available for data access. APIs provide endpoints (URLs) that allow you to interact with the database. You’ll need to obtain the API documentation from the provider, which outlines the available endpoints, the structure of requests, and the expected responses. Most APIs use REST or GraphQL architecture, and they often require authentication, such as API keys or OAuth tokens.

- Make API Requests: After configuring access, you can start making requests to the API’s endpoints. For example, if you want to retrieve data, you’ll send a GET request to the API. The API will then interact with the database, fetching the requested data in a structured format such as JSON or XML. You can apply filters, query parameters, or specific fields to refine the data extraction process. Once received, the data can be parsed, processed, and integrated into your application or workflow for further analysis.

By automating this process with API calls, you can regularly pull data from a database, ensuring that your systems are always working with the latest information available.

Does API pull data?

Yes, an API can pull data from a server or database. When you make a request to an API—typically through a GET request—you are “pulling” data from a specific endpoint. This request asks the server to retrieve and return specific information in a structured format, usually JSON or XML.

In simple terms, pulling data via an API allows you to extract real-time or on-demand information, such as product listings, user profiles, or transaction records, depending on the API’s purpose. This process is essential for applications that need up-to-date data without directly interacting with the underlying database.

What is API data scraping?

API data scraping refers to the process of extracting data from web-based APIs (Application Programming Interfaces) for specific use cases, often involving large-scale, automated data collection. Unlike traditional web scraping, which involves extracting data from HTML pages, API data scraping interacts directly with an API to access structured data, typically in formats like JSON or XML.

By using API data scraping, businesses can efficiently gather real-time data such as product listings, pricing information, or user reviews from various platforms, depending on the API’s purpose and available endpoints. Since APIs are designed to provide specific data on request, API scraping is often more reliable, faster, and less prone to errors compared to traditional web scraping, and it reduces the risk of being blocked as long as the API usage respects the provider’s terms of service.

What is API data retrieval?

API data retrieval refers to the process of accessing and fetching data from a server or database using an Application Programming Interface (API). In this context, an API acts as a communication bridge between different software systems, allowing a user or application to request specific data from a database or service. When a request is made, such as a GET request, the API retrieves the requested data and delivers it in a structured format, typically JSON or XML.

This method of data retrieval is commonly used for accessing real-time or on-demand data, such as weather updates, product information, user data, or financial records, depending on the API’s purpose. API data retrieval ensures a more streamlined and organized way of collecting data, without needing direct access to the underlying database, making it an efficient solution for integrating data into various applications and systems.

How to extract data from a database using API?

To extract data from a database using an API, follow these steps:

1. Access the API Documentation

Start by reviewing the API documentation provided by the service or database you’re working with. This documentation will outline the available endpoints (specific URLs you can request data from), the types of data you can access, and any required parameters (such as filters or queries).

2. Set Up Authentication

Most APIs require authentication to ensure secure data access. Obtain the necessary credentials, such as an API key, OAuth token, or login credentials, and include them in your API requests. This might involve adding them to the request headers or as query parameters.

3. Send API Requests

Using the API’s endpoints, you can send a request (typically a GET request) to retrieve data. This request is usually done via tools like Postman, cURL, or programmatically using programming languages such as Python, Java, or JavaScript. The request will look something like:

GET https://api.example.com/data?parameter=value

The API will then fetch the relevant data from the database.

4. Receive and Parse the Data

Once the request is successful, the API will return the data in a structured format, usually JSON or XML. You will then need to parse this data to extract the specific information you need. If you’re programming, libraries like JSON.parse() (JavaScript) or requests (Python) can help with this.

5. Integrate and Use the Data

After retrieving and parsing the data, you can integrate it into your system, application, or use it for further analysis. Since APIs can often return large amounts of data, you can also set parameters or filters to limit the data to only what is needed.

By following these steps, you can efficiently extract data from a database using an API for real-time insights or data-driven decision-making.