In today’s digital landscape, websites are becoming increasingly complex, with many relying heavily on JavaScript to deliver dynamic content. While this enhances user experience, it presents significant challenges for traditional web crawling techniques. Businesses that rely on data extraction need to adapt to these changes to ensure comprehensive data collection. This article explores the latest techniques for crawling websites that are JavaScript-heavy, providing practical solutions that site crawlers can implement to stay ahead of the curve.

Common Crawling Challenges of JavaScript-Heavy Websites

Traditional web crawlers are designed to extract data from static HTML content, where the information is readily available in the initial HTML response. However, JavaScript-heavy websites load content dynamically, often requiring user interactions or additional HTTP requests to display all relevant data. This dynamic loading means that traditional crawling website techniques may miss significant portions of the content, leading to incomplete datasets and missed opportunities.

To effectively crawl these websites, businesses must employ advanced techniques that can handle JavaScript execution and dynamic content loading. Below, we outline the most effective strategies and tools for successfully crawling JavaScript-heavy websites.

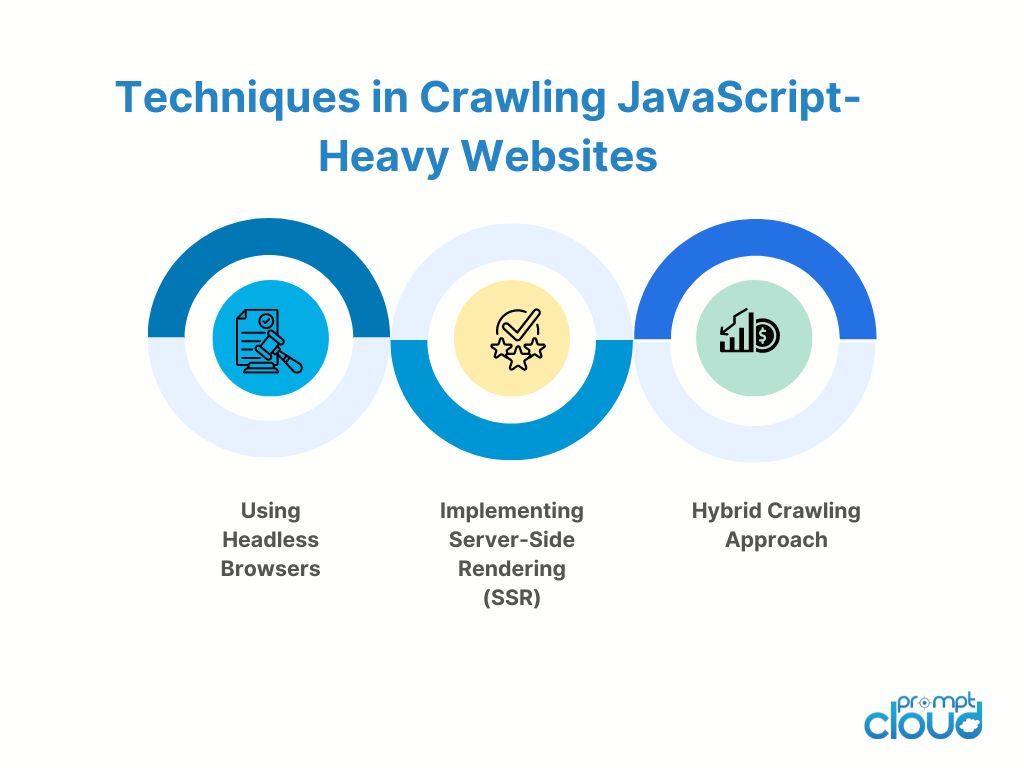

Techniques for Crawling Websites That Are JavaScript Heavy

Using Headless Browsers

One of the most powerful techniques for crawling websites that are JavaScript-heavy is utilizing headless browsers. A headless browser operates without a graphical user interface (GUI), allowing it to load and interact with web pages programmatically. Headless browsers like Puppeteer and Playwright are designed to execute JavaScript, fully rendering pages and accessing dynamically loaded content just as a human user would.

How It Works:

- A headless browser simulates a real user’s browsing experience, executing JavaScript, loading dynamic content, and interacting with elements on the page.

- Site crawlers can automate the headless browser to visit URLs, wait for specific content to load, and extract the rendered HTML or target data points.

Benefits:

- Comprehensive Data Extraction: Ensures that all dynamic content is loaded and captured, resulting in more accurate and complete datasets.

- Flexibility: Capable of handling complex web interactions, such as clicking buttons or filling out forms, to trigger additional content loading.

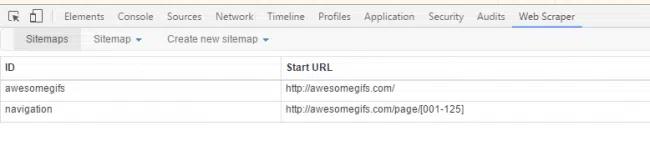

Leveraging JavaScript-Aware Crawlers

JavaScript-aware crawlers are specialized site crawlers designed to execute JavaScript and handle dynamic content natively. These crawlers come equipped with JavaScript engines that can interpret and execute JavaScript code during the crawling website process, making them particularly effective for crawling websites that are JavaScript-heavy.

How It Works:

- JavaScript-aware crawlers parse the initial HTML and then execute the JavaScript on the page, similar to a headless browser.

- These crawlers can intercept and replicate the browser’s behavior, making additional requests as needed to load all content.

Benefits:

- Efficiency: Typically faster than headless browsers as they are optimized specifically for crawling website tasks.

- Scalability: Suitable for large-scale crawling projects where managing vast amounts of data from JavaScript-heavy sites is essential.

Implementing Server-Side Rendering (SSR)

Server-side rendering (SSR) is another effective approach to simplify the crawling website process for JavaScript-heavy websites. With SSR, the website’s content is rendered on the server before being sent to the client or crawler, meaning that the crawler receives a fully rendered HTML page with all content included.

How It Works:

- Websites using SSR pre-render their pages on the server, sending fully constructed HTML pages to the client.

- Site crawlers can access this HTML directly, without needing to execute JavaScript.

Benefits:

- Simplicity: Eliminates the need for the crawler to execute JavaScript, making the crawling process more straightforward.

- Performance: Reduces computational overhead on the crawler, potentially speeding up the crawling website process.

Hybrid Crawling Approach

A hybrid approach combines traditional crawling website methods with JavaScript execution capabilities, using both static and dynamic content extraction techniques. This method is particularly useful for websites that contain a mix of static and dynamically loaded content.

How It Works:

- The crawler first attempts to extract as much information as possible using traditional methods.

- For elements that require JavaScript execution, the crawler switches to using a headless browser or JavaScript-aware module to load and extract the remaining data.

Benefits:

- Versatility: Capable of handling a wide range of websites, from fully static to heavily dynamic ones.

- Cost-Effective: Optimizes crawling efficiency by reducing the need for resource-intensive JavaScript execution for every page.

Best Practices for Crawling JavaScript-Heavy Websites

When implementing these techniques, it’s essential to follow best practices to ensure successful and efficient crawling:

- Implement Proper Delays: Use appropriate wait times to ensure all dynamic content is fully loaded before extracting data. This practice helps avoid missing critical information that loads after the initial page render.

- Handle AJAX Requests: For websites that use AJAX to load content, ensure your crawler can detect and handle these requests effectively, either by waiting for them to complete or by monitoring network activity.

- Monitor and Adjust for Website Changes: JavaScript-heavy websites frequently update their structure and loading behavior. Regularly monitor the websites you crawl and adjust your strategy as needed to accommodate these changes.

- Respect Website Policies: Always respect the website’s robots.txt file and terms of service. Implement rate limiting to avoid overwhelming the site’s server, and ensure your crawling practices are compliant with legal regulations.

Why Choose PromptCloud for Crawling Websites?

Crawling website that are JavaScript-heavy requires specialized knowledge and tools. At PromptCloud, we offer advanced web crawling and data extraction services tailored to meet your specific needs. Our team of experts utilizes the latest techniques, such as headless browsers and JavaScript-aware crawlers, to ensure comprehensive and accurate data collection from even the most complex websites.

Whether you need to scrape e-commerce data, monitor competitor websites, or collect large-scale web data for research, PromptCloud has the expertise and technology to deliver high-quality results. Let us help you navigate the complexities of modern web crawling and unlock the full potential of your data-driven initiatives.

Conclusion

Crawling JavaScript-heavy websites is no longer a challenge with the right techniques and tools in place. By leveraging advanced methods like headless browsers, JavaScript-aware crawlers, and hybrid approaches, businesses can ensure that they capture all relevant data, even from the most complex websites. Implementing these strategies will not only enhance your data collection capabilities but also give you a competitive edge in today’s fast-paced digital environment.

If you’re ready to take your web crawling to the next level, PromptCloud is here to help. Contact us today to learn more about our tailored crawling solutions and how we can support your business in achieving its data goals.