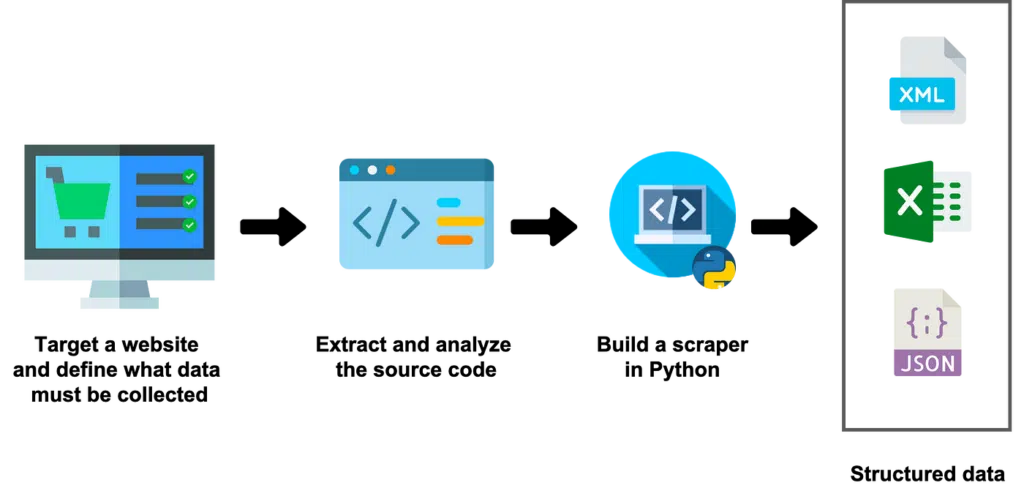

For those looking to harness the power of web data, BeautifulSoup stands out as a powerful and intuitive tool. This Python library is designed for web scraping purposes, allowing you to extract specific information from web pages easily. This guide will walk you through creating a web scraper using BeautifulSoup, process to web scrape with beautifulsoup, from setting up your environment to parsing and extracting the data you need.

Introduction to Web Scraping with BeautifulSoup

Source: https://medium.datadriveninvestor.com/introduction-to-scraping-in-python-with-beautifulsoup-and-requests-ab7b1c9bc113?gi=49f3217037d7

Web scraping is the process of programmatically collecting information from the World Wide Web. It’s a valuable technique used in data mining, information gathering, and automation tasks. BeautifulSoup, paired with Python’s requests library, provides a straightforward approach to web scraping, making it accessible to programmers of all levels. Web Scrape with BeautifulSoup involves various prerequisites.

Prerequisites

Before diving into BeautifulSoup, ensure you have the following prerequisites covered:

- Basic knowledge of Python programming.

- Python installed on your system.

- Familiarity with HTML and the structure of web pages.

Setting Up Your Environment

- Install Python: Make sure Python is installed on your system. Python 3 is recommended for its improved features and support.

- Install BeautifulSoup and Requests: Open your command line or terminal and install the necessary libraries using pip, Python’s package installer. Run the following commands:

pip install beautifulsoup4

pip install requests

Creating Your First Web Scraper

Creating your first web scraper with BeautifulSoup is an exciting step into the world of data extraction from the web. This guide will walk you through the basics of setting up a simple scraper using Python and BeautifulSoup to fetch and parse web content efficiently. We’ll scrape quotes from “http://quotes.toscrape.com”, a website designed for practicing web scraping skills.

Step 1: Setting Up Your Python Environment

Ensure Python is installed on your computer. You’ll also need two key libraries: requests for making HTTP requests to obtain web pages, and beautifulsoup4 for parsing HTML content.

If you haven’t installed these libraries yet, you can do so using pip, Python’s package installer. Open your terminal or command prompt and execute the following commands:

pip install beautifulsoup4

pip install requests

Step 2: Fetch the Web Page

To web scrape with BeautifulSoup, begin by writing a Python script to fetch the HTML content of the page you intend to scrape. In this case, we’ll fetch quotes from “http://quotes.toscrape.com”.

import requests

# URL of the website you want to scrape

url = ‘http://quotes.toscrape.com’

# Use the requests library to get the content of the website

response = requests.get(url)

# Ensure the request was successful

if response.status_code == 200:

print(“Web page fetched successfully!”)

else:

print(“Failed to fetch web page.”)

Step 3: Parse the HTML Content with BeautifulSoup

Once you’ve fetched the web page, the next step is to parse its HTML content. BeautifulSoup makes this task straightforward. Create a BeautifulSoup object and use it to parse the response text.

from bs4 import BeautifulSoup

# Create a BeautifulSoup object and specify the parser

soup = BeautifulSoup(response.text, ‘html.parser’)

# Print out the prettified HTML to see the structure

print(soup.prettify())

Step 4: Extracting Data from the HTML

Now that you have the HTML parsed, you can start extracting the data you’re interested in. Let’s extract all the quotes from the page.

# Find all the <span> elements with class ‘text’ and iterate over them

quotes = soup.find_all(‘span’, class_=’text’)

for quote in quotes:

# Print the text content of each <span>

print(quote.text)

This snippet finds all <span> elements with the class text—which contain the quotes on the page—and prints their text content.

Step 5: Going Further

You can also extract other information, like the authors of the quotes:

# Find all the <small> elements with class ‘author’

authors = soup.find_all(‘small’, class_=’author’)

for author in authors:

# Print the text content of each <small>, which contains the author’s name

print(author.text)

This will print out the name of each author corresponding to the quotes you’ve extracted.

Best Practices and Considerations

- Respect Robots.txt: Always check the robots.txt file of a website (e.g., http://quotes.toscrape.com/robots.txt) before scraping. It tells you the website’s scraping policy.

- Handle Exceptions: Ensure your code gracefully handles network errors or invalid responses.

- Rate Limiting: Be mindful of the number of requests you send to a website to avoid being blocked.

- Legal Considerations: Be aware of the legal implications of web scraping and ensure your activities comply with relevant laws and website terms of service.

Conclusion

BeautifulSoup, with its simplicity and power, opens up a world of possibilities for data extraction from the web. Whether you’re gathering data for analysis, monitoring websites for changes, or automating tasks, web scraping with BeautifulSoup is an invaluable skill in your programming toolkit. As you embark on your web scraping journey, remember to scrape responsibly and ethically, respecting the websites you interact with. Happy scraping!

Check out the other articles that will be helpful:

Web Scraping Guide With Python Using Beautiful Soup

The Ultimate Guide to Scrape the Web: Techniques, Tools, and Best Practices

Web Scraping with Python