As advanced as your model might be, it can only be as good as the data it is trained on. A messy, unstructured, or inconsistent dataset means that the algorithm will generate inaccurate, biased, or unreliable outputs. This is why data parsing is indispensable.

“Garbage in, garbage out” is a principle that fits AI workflows rather well. Offering unprocessed data to machine learning models is similar to asking someone to write a book in a completely alien tongue. Structuring and preparing the information so that machines can interpret, learn, and take actions based on it is crucial. Data parsing is the step that helps transform raw data into a format that can be worked with.

As highlighted in a 2023 MIT Sloan report, data scientists spend approximately 80% of their working hours in the preliminary phases of data checking and cleaning before model construction. That is an astonishingly large portion of time, which indicates the critical resource that data preparation—especially parsing—is.

In this article, we will explore data parsing in detail, its importance to machine learning, the challenges you are likely to encounter, and effective methods of parsing data from various sources.

What is Data Parsing?

Image Source: Nanonets

Data parsing refers to the transformation of raw data into a usable format that can be comprehended by machines—a structured one. Many data sources, such as web pages, APIs, and spreadsheets, are untidy. They contain characters or tags that are either invalid or formatted inconsistently. Parsing identifies patterns to split the data into useful components and clean it up.

For example, if you’re working with an HTML page, a parser can extract just the product names or prices you need. If you’re dealing with a JSON file, parsing can turn nested values into a flat structure suitable for model training.

The goal is to parse data in a way that makes it ready for analysis or input into machine learning models. Without parsing, raw data stays chaotic and difficult to process. Parsing acts like a translator, turning human-created formats into machine-ready inputs.

Why Data Parsing is Essential for AI and Machine Learning

The efficiency of AI and machine learning models heavily relies on previously acquired information known as data. If the data is not organized or is inconsistent, models will prove incapable of recognizing perceptible patterns. For that reason, data parsing is critical: it transforms disorganized inputs into clean, structured datasets.

When set in poorly formatted data, models will never perform well. Poor-quality data will produce skewed outputs, unreliable results, and even lead to biased marketing decisions. Even the slightest inconsistency in formatting, such as dates and fields, which in other scenarios could be categorized as optional, may halt the progress of an entire training framework.

When you parse data properly, you reduce noise and remove unnecessary clutter. With parsed data, standardization of inputs, feature labeling, and consistency of datasets is achieved. With these measures in place, the machine learning pipeline’s accuracy and speed will increase exponentially.

In short, without effective parsing, you risk wasting time training models on flawed data—and the results will reflect that.

Common Challenges in Data Parsing for AI and Machine Learning

Image Source: DocSumo

When it comes to AI, data parsing can be particularly challenging if the data is raw, unstructured, or branched out into different data sources. Here are some of the most common challenges teams face while trying to parse data in machine learning:

1. Unstructured and Noisy Data

Many useful forms of data, such as scraped web content, social media posts, and customer reviews, are unstructured. This makes it extremely difficult to ascertain useful patterns or fields without a lot of heavy pre-processing. Noise like extra punctuation, HTML tags, or irrelevant text can confuse the parser and reduce model accuracy.

2. Inconsistent Formats

Data that comes from structured files such as CSV or JSON contain some fundamental gaps. For example, in one row, a date might be formatted as “01-04-2024” and as “April 1, 2024” in another. There are oftentimes gaps where fields are absent, misaligned or displaced, or utilize differing units of measurement. These small issues often break parsing logic or lead to incorrect data mappings.

3. Encoding and Language Issues

Parsing data that involves delicate languages with special symbols or emojis is complex. With a lack of proper encoding like UTF-8, your parser may completely break or generate random gibberish. This is particularly problematic with global datasets or multilingual sources.

4. Schema Variations Across Sources

Merging data from APIs, webpages, and databases poses a challenge since the schema or data structure of the information tends to differ from one source to another. The names of the fields and the type of data contained within them can differ, and/or the nesting structure could be different, so specific aligning protocols have to be made with preset transformations to properly align everything before training the model.

5. Frequent Data Structure Changes

Websites and APIs often update their formats. A parser that works today might break tomorrow if a layout or endpoint changes. This makes it hard to maintain reliable data flows without ongoing monitoring and flexible parsing logic.

6. Scalability and Performance Bottlenecks

The volume of data greatly impacts the efficiency and speed of the parsing process. Handling millions of pages or rows and rows of data requires a certain standard of code and the use of parallel processing, memory management, and other forms of optimization. If not handled properly, parsing large datasets can become time intensive, which could shut down the pipeline.

These challenges explain why data parsing is not a solvable problem, it is a continuous process to fortify the structure and scale of an AI system.

Methods and Tools to Parse Data for AI Models

To build high-performing AI models, having the right tools to parse data efficiently is essential. Whether you’re handling text, tables, or API responses, these tools help convert raw information into structured, machine-readable formats.

Here are some widely used methods and tools for data parsing in AI and machine learning:

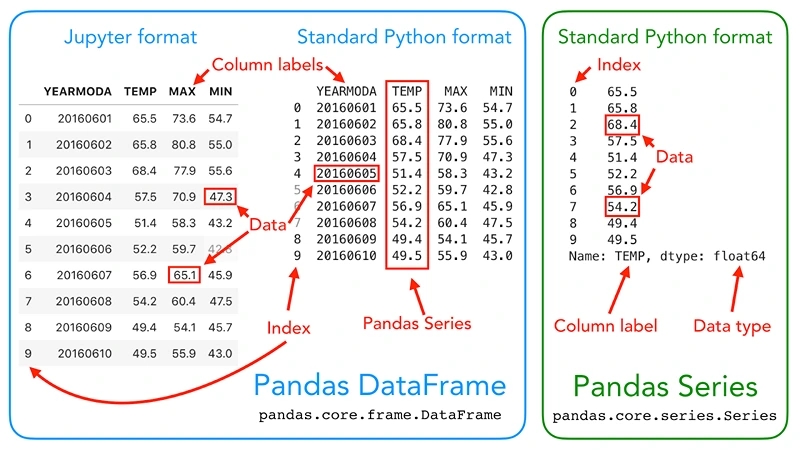

1. Pandas

Image Source: GitHub

Handling structured data is made easy, thanks to the Python library Pandas. Items such as CVS, Excel, and JSON can all be read, parsed and manipulated by Pandas. It becomes ready to be used by models after it has been cleaned, values dealt with, and reshaped. Mounds of raw files can be made into various forms with the help of numerous functions. With the countless options, parsing and transforming files becomes fast and intuitive; an example of such functions is todataframe() and readcsv().

2. BeautifulSoup

HTML and XML files can be parsed by this tool, BeautifulSoup, which is why it is used so widely. When gathering information from a web page, banners, product names, reviews, and many other sentences can be collected easily from tags or CSS selectors. This works great when you want to perform web scraping alongside AI pipelines and need real-time data to be processed.

3. Regular Expressions (RegEx)

Finding and extracting unstructured text becomes simple with the help of RegEx. Be it simply email addresses, phone numbers, or keywords, with regular expressions, extraction is very simple. It is commonly used for extracting information from logs or any documents provided by the user.

4. OpenRefine

OpenRefine is one of the most powerful open-source applications specially designed for cleaning dirty datasets. It identifies duplicates, fixes inconsistent records, and alters values spread throughout multiple files. Though it doesn’t fall under a coding tool, it’s extremely useful for data cleansing in non-automated workflows.

5. Automated Parsing Tools & APIs

PromptCloud is one of many that offers parsing data to be automated for users who need it. Their products are tailored to the retrieval of large amounts of data from web pages or APIs, delivering clean, structured data in JSON, XML, or CSV. With constantly changing sources, this allows set-up time to be improved drastically while also enhancing precision.

6. NLTK and SpaCy (for text data)

If your dataset consists of elements like reviews or support tickets, which involve free-form text, then NLP libraries such as NLTK and SpaCy are very useful to have on hand. These libraries assist with tokenization, entity recognition, and other forms of meaning extraction from text. The resulting structured data can subsequently be utilized for tasks like sentiment analysis, classification, or the training of chatbots.

Each of these tools has a specific use case, and often, they’re used together in a pipeline. Choosing the right method depends on your data source, volume, and the kind of structure you want to achieve.

How to Parse Data from Different Sources: APIs, Web, Files, & More

Image Source: Nimble

In AI and machine learning workflows, data doesn’t always come from one neat place. It often arrives in chunks—from APIs, web scraping tools, databases, or file uploads. Knowing how to parse data from these different sources is key to building a reliable data pipeline.

Let’s walk through some of the most common data sources and how parsing works for each.

1. Parsing Data from APIs

APIs are one of the cleanest sources of machine-readable data, usually delivered in structured formats like JSON or XML. However, even API data needs parsing.

For example, if you’re collecting product data from an e-commerce API, each product might include nested fields—like category, price, ratings, and descriptions. Using Python, you can parse this JSON structure with the json module or directly into a DataFrame using pandas.json_normalize().

The challenge here is handling authentication, rate limits, and structural changes over time. Parsers must also normalize fields if APIs from different sources are being combined.

2. Parsing Web Data (Scraped HTML)

Web scraping pulls unstructured HTML data from websites. To parse data from web pages, tools like BeautifulSoup or lxml are used. These libraries help navigate the HTML tree and extract specific elements based on tags, classes, or CSS selectors.

Web data is often messy—filled with ads, layout changes, and inconsistent formatting. So, the parsed output must be cleaned and standardized before it can be used for training ML models.

PromptCloud, for instance, provides web data that’s already pre-parsed and cleaned, making this process faster and more reliable for AI teams.

3. Parsing Data from CSV and Excel Files

CSV and Excel files are popular in business settings, but they’re not always clean. Columns may be misaligned, contain mixed data types, or lack headers.

Libraries like Pandas make it easy to parse data in Excel or CSV using read_csv() or read_excel(). From there, rows and columns can be filtered, renamed, or reshaped for analysis. You’ll also need to check for empty cells, string encoding, and duplicate values.

Many users ask how to parse data in Excel when integrating human-generated datasets into AI pipelines. Tools like OpenPyXL or Pandas work well for this.

4. Parsing Data from Databases

SQL and NoSQL databases often serve as long-term storage for transactional or user data. Parsing from these sources involves querying and transforming the results into flat tables that your model can digest.

Python’s sqlite3, sqlalchemy, or pymongo for MongoDB are common tools. After fetching the data, it’s usually parsed into a DataFrame and cleaned for outliers, nulls, or inconsistent formats.

5. Parsing JSON, XML, and Other Structured Files

Many enterprise data flows rely on files in structured formats like JSON or XML. These formats are readable by machines but often need flattening or transformation for AI use.

To parse JSON, Python’s built-in JSON module or Pandas can convert deeply nested objects into structured tables. XML requires libraries like XML. etree.ElementTree to traverse nodes and extract values.

The key is ensuring consistency, especially when parsing large batches of files where structures can subtly vary.

Data Parsing for Feature Engineering in Machine Learning

Once data is parsed and cleaned, the next crucial step is turning it into features that machine learning models can learn from. This process, called feature engineering, is where parsed data becomes truly valuable.

Parsed data lays the groundwork for feature extraction. For example, if you’ve parsed review data from an e-commerce site, you might turn the text into sentiment scores, extract keywords, or calculate review lengths. Each of these becomes a potential input feature for a recommendation model or sentiment classifier.

Let’s say you’ve parsed a column of dates from a CSV file. On its own, a date isn’t useful to a model—but once parsed, it can be split into features like day of the week, month, or whether it falls on a holiday. Similarly, parsing product prices lets you create features like discount percentage, price tier, or price change over time.

When your data is parsed well, this transformation stage becomes easier and more accurate. Poorly parsed or inconsistent data, on the other hand, leads to flawed features and unreliable model outcomes.

Text data, in particular, benefits from proper parsing. NLP pipelines depend on tokenized, cleaned, and structured inputs. Without that, you risk feeding garbage into your model—what many in the field refer to as “garbage in, garbage out.”

In short, parsing data isn’t just about formatting. It’s about preparing the raw material your models will learn from. And the cleaner your parsed data, the smarter your models will be.

Streamlining AI Data Parsing with PromptCloud

Clean, well-parsed data is the unsung hero behind every powerful AI model. Whether you’re training a natural language processor, a recommendation engine, or a predictive analytics system, the quality of your input data makes or breaks performance. That’s why data parsing is so important—not just as a technical step but as a strategic part of your AI pipeline.

In this article, we explored how parsing transforms raw, messy data into structured inputs that machine learning models can understand. We covered common challenges like inconsistent formats, schema mismatches, and unstructured sources. We also looked at how parsed data feeds directly into feature engineering, making your models smarter and more reliable.

But here’s the thing—managing all this parsing at scale isn’t easy. With sources changing constantly, file formats varying wildly, and data volume growing fast, manual efforts quickly fall short.

That’s where PromptCloud comes in.

At PromptCloud, we specialize in large-scale data extraction and parsing from a wide range of sources—websites, APIs, documents, and more. Our solutions are built for AI teams that need reliable, ready-to-use data without spending hours on cleaning and reformatting. Whether you’re building your next LLM or training a domain-specific model, we can help you start with the cleanest version of your data.If you want to spend less time wrangling messy datasets and more time training models, let’s talk. With PromptCloud’s data parsing and delivery infrastructure, your AI models will always have the right data to learn from. Schedule a demo today!