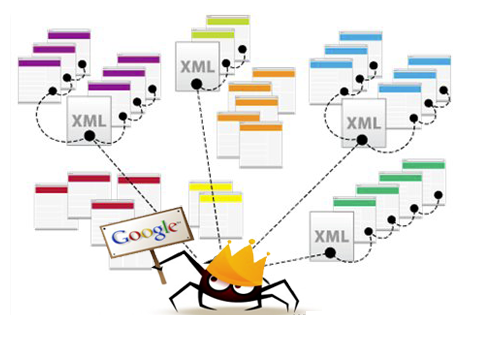

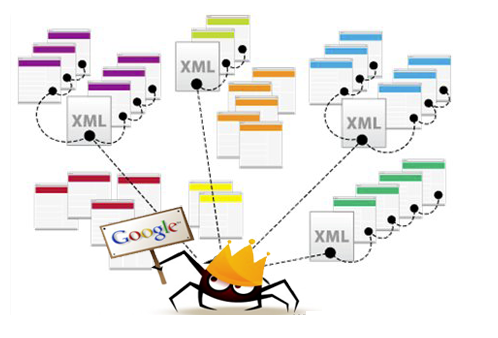

Internet today has become a vast interconnected and a complex infrastructure of data with newer pieces of information being uploaded every minute. With increased availability of data it becomes equally important to find and index relevant data for our use with the help of Evolution of Web Crawlers. From Archie to AltaVista to Google’s bots, web crawlers have been around since the inception of World Wide Web and have helped store and index all useful data hidden between layers of text, different pages and URLs.

With the evolution of internet and the communication algorithms that run forth between the user and the web pages, changing platforms and rules of data encryptions, there has been a massive demand for a fast paced and evolving set of web crawlers to help cover all forms of data frequently and qualitatively. Early web crawlers were focused upon just traversing URLs from seed links to others and downloading all the relevant information. However, the last decade has seen web crawlers evolving from spiders or bots to multitasking and multipurpose crawlers covering a wider set of databases and information platforms. This second generation of web crawlers has changed the game of data analysis and shows a strong promise of growth in the future as well. Below are some of the game-changing Evolution of Web Crawlers introduced in the last decade.

1. Distributed Crawlers

By the year 2003, the World Wide Web saw a series of multi-threaded crawlers using cloud computing, crawl millions of pages in seconds. The crawlers used multiple computers that offered their own free resources and bandwidth to crawl through pages they access, thereby saving the cost and trouble of maintaining huge singular database servers.

Looksmart was one such search engine that used volunteering computer user’s resources to crawl through data pages and provide faster and quicker results.

2. Heritrix crawler or Circa

In 2004, Internet Archive started Heritrix crawlers intended to specifically archive websites and support multiple use cases and perform focused and broad crawls. It came to the attention of the Internet Archive that it was necessary to preserve digital artifacts of our culture for the benefit of future use and knowledge. Java being the high-level, object oriented language that was being used the most at that time, it only seemed appropriate to use Java as the core software programming language for the launch of Circa version 1.0.0. Thanks to Heritrix, all the latest information about projects or other downloaded documents can be found at https://crawler.archive.org.

3. Ajax Crawler or Crawljax

With the advent of client side technologies by the year 2007, there was a huge shift towards client side computing, such that the same page showed different results using AJAX at different client sides due to different computations. Thus it became difficult for web crawlers to crawl through and index such Rich Internet applications with deep

data or hidden data. Therefore, in 2008, an AJAX crawler was launched called Crawljax to crawl through Rich internet applications and index them using Breadth first search algorithms.

4. Web Crawler in Mobile Systems

With the latest advancement in internet technology and easy accessibility via mobile phone, the internet trends have seen a massive shift of usage towards the mobile space. Mobiles use various softwares for browsing through internet like Opera, Polaris, Kindle Basic Web, safari, etc. thus there is a high scope of mobile web crawlers in the industry today. Googlebot-Mobile and Bingbot Mobile, launched in 2014, are the two major mobile web crawlers catering to the smartphone mobile user’s web crawling demands. With heavy traffic being generated in mobile commerce or mobile e-learning solutions, there is still a huge demand for more developed and advanced mobile compatible crawlers.

So, more adaptive and enhanced mobile crawlers when developed would definitely take the world by storm.