Python is one of the easiest and most user-friendly codes that can be written by coders. Factor analysis of python is one of the popular methods of discovering underlying factors and latent variables in data. What do I mean by latent variables? Let’s find out. Say we have a dataset with 6 variables-

- Age

- Weight

- Height

- Salary

- Bank Balance

- Number of Credit Cards

And we have collected these 6 data points for 1000 users. Now after thorough analysis we find out that there exist two latent or hidden variables that are dependent on some of the data points present here.

The figure above gives a good understanding of how factor-analysis helps find hidden variables. In this specific example, I chose data points that one can easily separate and analyze, but when you have a data set with headings like x1, x2, y1, y2, z1, z2, you will need the proper tools to get this job done. When it comes to exploratory factor analysis, which is by far the most popular factor analysis approach amond researchers, the basic assumption made at the very beginning is that every variable at hand is directly associated with a factor.

What Is The Aim Of Factor Analysis Of Python?

The main aim of factor analysis is to reduce the number of data-points by extracting unobservable variables. The new variables make it easier for a market researcher or a statistician to complete his study, and also makes the data more consumable. This conversion of

Observable variables → Unobservable variables

needs to be done through Factor Extraction followed by Factor Rotation.

In Factor Extraction, we decide the number of factors that we want and how we want to extract it. This is done using variance partitioning methods, of which common factor analysis and principal components analysis are common. Next comes Factor Rotation, which is done to reduce the complexity of the solution.

How to find these latent variables using Python?

Python comes with multiple third-party libraries that can help you in statistical analysis. And today we will be using its factor_analyser library. We will also be using pandas to handle our data and convert it to a data frame, that makes it more usable. Another library matplotlib is being used for creating graphs. One point to note is that certain commands in this code may not print the result directly if you are running this in the terminal. I would recommend you to use Jupyter notebooks since it helps visualize data well.

In the first step, we import the libraries that are required for the task and load our dataset. Once that is done, we will view the columns.

Once you see the columns, you will get a fair idea of the number of features. You can also use df.head to get a snapshot of the data.

| Index([‘Unnamed: 0’, ‘A1’, ‘A2’, ‘A3’, ‘A4’, ‘A5’, ‘C1’, ‘C2’, ‘C3’, ‘C4’, ‘C5’, ‘E1’, ‘E2’, ‘E3’, ‘E4’, ‘E5’, ‘N1’, ‘N2’, ‘N3’, ‘N4’, ‘N5’, ‘O1’, ‘O2’, ‘O3’, ‘O4’, ‘O5’, ‘gender’, ‘education’, ‘age’], dtype=‘object’) |

Before we try out an exploratory analysis of the dataset, we need to prepare the data. For this, we will be dropping the first and the last three columns of the dataset and also removing all values that are “Nan”. After data preparation completion, we shall view the schema of the data.

| #Steps required for data preparation df.drop([“gender”, “education”, “age”], axis =1 , inplace = True) df = df.iloc[0:,1:26] df.dropna(inplace=True) df.info() |

Once you have viewed the schema of the data, you can also see the data itself – a few rows of it.

| #View the data df.head() |

| A1 | A2 | A3 | A4 | A5 | C1 | C2 | C3 | C4 | C5 | E1 | E2 | E3 | E4 | E5 | N1 | N2 | N3 | N4 | N5 | O1 | O2 | O3 | O4 | O5 |

| 2 | 4 | 3 | 4 | 4 | 2 | 3 | 3 | 4 | 4 | 3 | 3 | 3 | 4 | 4 | 3 | 4 | 2 | 2 | 3 | 3 | 6 | 3 | 4 | 3 |

| 2 | 4 | 5 | 2 | 5 | 5 | 4 | 4 | 3 | 4 | 1 | 1 | 6 | 4 | 3 | 3 | 3 | 3 | 5 | 5 | 4 | 2 | 4 | 3 | 3 |

| 5 | 4 | 5 | 4 | 4 | 4 | 5 | 4 | 2 | 5 | 2 | 4 | 4 | 4 | 5 | 4 | 5 | 4 | 2 | 3 | 4 | 2 | 5 | 5 | 2 |

| 4 | 4 | 6 | 5 | 5 | 4 | 4 | 3 | 5 | 5 | 5 | 3 | 4 | 4 | 4 | 2 | 5 | 2 | 4 | 1 | 3 | 3 | 4 | 3 | 5 |

| 2 | 3 | 3 | 4 | 5 | 4 | 4 | 5 | 3 | 2 | 2 | 2 | 5 | 4 | 5 | 2 | 3 | 4 | 4 | 3 | 3 | 3 | 4 | 3 | 3 |

Fig: Output of df.head()

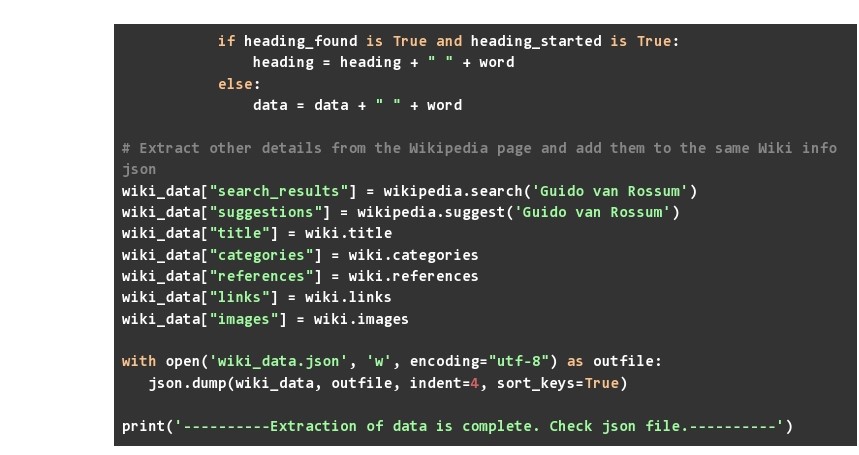

After this, we shall create a Factor analyzer object and extract the eigenvalues. This used to create a Scree plot.

| #Create an object of FactorAnalyser with number of factors 6 and varimax rotation fa=FactorAnalyzer(n_factors=6, rotation=‘varimax’) fa.fit(df) #Extract eigenvalues and create the Scree Plot eigen_values, vectors = fa.get_eigenvalues() plt.scatter(range(1,df.shape[1]+1),eigen_values) plt.plot(range(1,df.shape[1]+1),eigen_values) plt.title(‘Scree Plot’) plt.xlabel(‘Factors’) plt.ylabel(‘Eigenvalue’) plt.grid() plt.show() |

From the scree plot, we can see that the number of eigenvalues greater than one is 6. Hence our guess of setting n_factors as 6 was correct. In case of a mismatch, we could have reinitialized the FactorAnalyser object before continuing to the next step. There can be a possibility that a different value of n_factor can perform better, but we shall know that only after generating the loading values.

| #Extract factor loadings for each variable and convert the 2d array to a dataframe loadings = fa.loadings_ df_new = pd.DataFrame(loadings) |

In the final step, we will extract the factor loadings for each variable corresponding to each factor. We have converted the final 2d array to a Pandas data frame so that it is easier to understand.

| 0 | 1 | 2 | 3 | 4 | 5 | |

| A1 | 0.09521974225 | 0.04078315808 | 0.04873388544 | -0.5309873495 | -0.1130573296 | 0.1612163531 |

| A2 | 0.03313127607 | 0.2355380394 | 0.1337143946 | 0.6611409759 | 0.063733787 | -0.006243536373 |

| A3 | -0.009620884158 | 0.3430081731 | 0.1213533673 | 0.6059326946 | 0.03399026531 | 0.1601064273 |

| A4 | -0.08151755875 | 0.2197167204 | 0.2351395315 | 0.4045940388 | -0.125338019 | 0.08635570252 |

| A5 | -0.1496158854 | 0.4144576737 | 0.1063821654 | 0.4696982914 | 0.03097657247 | 0.2365193425 |

| C1 | -0.004358402322 | 0.07724775244 | 0.5545822542 | 0.007510696144 | 0.1901237294 | 0.0950350462 |

| C2 | 0.06833008359 | 0.03837038378 | 0.6745454503 | 0.05705498763 | 0.08759259138 | 0.1527750794 |

| C3 | -0.03999367337 | 0.03186730037 | 0.5511644394 | 0.1012822407 | -0.0113380875 | 0.008996283589 |

| C4 | 0.2162833656 | -0.06624077375 | -0.6384754897 | -0.1026169404 | -0.1438464754 | 0.3183589004 |

| C5 | 0.2841872452 | -0.1808116969 | -0.5448376774 | -0.05995482185 | 0.02583709444 | 0.1324234458 |

| E1 | 0.02227979411 | -0.5904508905 | 0.05391490631 | -0.1308505313 | -0.07120457953 | 0.1565826561 |

| E2 | 0.2336235667 | -0.6845776318 | -0.08849707106 | -0.1167156651 | -0.0455610415 | 0.1150654014 |

| E3 | -0.0008950062665 | 0.5567741796 | 0.1033903473 | 0.1793964806 | 0.2411799037 | 0.2672913156 |

| E4 | -0.1367880762 | 0.6583949072 | 0.113798005 | 0.2411429611 | -0.1078082035 | 0.1585128513 |

| E5 | 0.03448958849 | 0.5075350823 | 0.309812528 | 0.07880428634 | 0.2008213507 | 0.00874730272 |

| N1 | 0.8058059388 | 0.06801130302 | -0.05126378745 | -0.1748493582 | -0.07497711895 | -0.09626617104 |

| N2 | 0.7898316869 | 0.02295829406 | -0.03747686989 | -0.1411344819 | 0.006726461165 | -0.1398226126 |

| N3 | 0.7250812171 | -0.06568693383 | -0.05903943749 | -0.01918381747 | -0.01066355481 | 0.06249533658 |

| N4 | 0.5783188498 | -0.3450723247 | -0.162173861 | 0.0004031249446 | 0.06291648314 | 0.147551243 |

| N5 | 0.523097071 | -0.161675117 | -0.02530497777 | 0.09012479018 | -0.1618919778 | 0.1200494769 |

| O1 | -0.02000401796 | 0.225338562 | 0.1332007995 | 0.005177937857 | 0.4794772167 | 0.2186898349 |

| O2 | 0.1562301084 | -0.001981519764 | -0.08604684937 | 0.04398910669 | -0.4966396744 | 0.1346929729 |

| O3 | 0.01185101513 | 0.3259544824 | 0.09387961097 | 0.07664164686 | 0.5661280477 | 0.2107772204 |

| O4 | 0.2072805716 | -0.1777457135 | -0.005671465969 | 0.1336555723 | 0.3492271365 | 0.178068367 |

| O5 | 0.06323436646 | -0.01422106258 | -0.04705922852 | -0.05756077769 | -0.576742635 | 0.1359358722 |

Fig: Output of fa.loadings_

What do the results tell us?

So you got a 2-D array of 25 rows and 5 columns as the final output. What does that mean? If you take a closer look at the data, you will spot that specific factors have high loading values for specific variables.

| Factor 0 | N1, N2, N3, N4, N5 |

| Factor 2 | E1, E2, E3, E4, E5 |

| Factor 3 | C1, C2, C3, C4, C5 |

| Factor 4 | A1, A2, A3, A4, A5 |

| Factor 5 | O1, O2, O3, O4, O5 |

| Factor 6 | No high loading value for any variable. |

So in a way, our estimate missed the mark, and our results will be better if redone with just 5 factors instead of 6. You can try that out on your system and share the results!