URL Crawlers: The Unsung Heroes of Big Data

Image Source: Investopedia

Imagine trying to gather information from the entire internet by manually searching and copying data from websites. It’s a daunting task, to say the least. That’s where URL crawlers come in – automating the process of extracting data from the web, these tools are capable of quickly and accurately scouring millions of web pages for relevant information.

But what exactly do URL crawlers do? Essentially, they follow links from one webpage to another, indexing and storing data along the way.

This process is called web scraping or data scraping, and it allows companies and organizations to collect large amounts of structured data from the unstructured chaos of the internet.

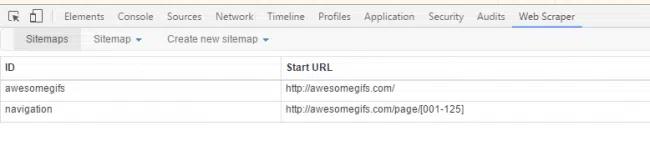

The Anatomy of a URL Crawler

Image Source: ResearchGate

So, how does a URL crawler work its magic? Here’s a simplified breakdown of the steps involved:

- Seeding: The crawler starts by identifying a set of seed URLs – typically a list of known websites or pages related to the desired data.

- Crawling: The crawler follows links from the seed URLs, retrieving the contents of each page and storing them in a database or file system.

- Parsing: The crawler then analyzes the HTML structure of each page, extracting relevant data elements such as text, images, videos, and other media.

- Filtering: To avoid irrelevant or duplicate data, the crawler applies filters to remove unnecessary information and focus on the specific data points needed for analysis.

- Transforming: Finally, the crawler transforms the raw data into a usable format, such as JSON or CSV, making it ready for further analysis and processing.

Big Data Applications Powered by URL Crawlers

Image Source: PromptCloud | What is web crawling

Now that we understand how URL crawlers work, let’s look at some examples of big data applications that rely on their capabilities:

Business Intelligence

Businesses use URL crawlers to gather market research, competitor analysis, customer feedback, and more. By monitoring social media platforms, review sites, and blogs, companies can gain valuable insights into consumer behavior and preferences, helping them make informed decisions about product development, marketing strategies, and pricing.

Artificial Intelligence (AI) and Machine Learning (ML)

URL crawlers provide the foundation for many AI and ML applications by supplying them with vast amounts of training data. For instance, natural language processing algorithms can be trained on massive datasets of text extracted from web pages, enabling chatbots, voice assistants, and language translation systems to understand human language better.

Sentiment Analysis

By analyzing online opinions and sentiment, companies can identify trends, track brand reputation, and assess customer satisfaction. URL crawlers help gather data from various sources, including social media, news outlets, and discussion forums, which are then fed into sentiment analysis models.

Price Comparison and Market Research

E-commerce businesses utilize URL crawlers to monitor prices, product offerings, and availability across different online retailers. This helps them stay competitive, optimize inventory management, and identify new opportunities in the market.

Conclusion: Harnessing the Power of URL Crawlers

With the ability to efficiently harvest and organize vast amounts of data from the web, URL crawlers pave the way for businesses to gain valuable insights, improve operations, and drive innovation.

Providing a versatile set of applications to analyze customer sentiment, track market trends, or train AI models, URL crawlers can be extremely useful when used well.

PromptCloud has been one such service provider for a decade, helping businesses of all sizes in different geographies meet their goals. For a free demo, contact us today at sales@promptcloud.com