Every single day, billions of queries are typed into Google’s search bar. Everything from trends, behaviors, and businesses is revealed by these searches. It is no wonder why Google scraping is so popular in today’s world as companies seek market insight. These data points can be useful for tracking search engine rankings, monitoring competitors, tracking consumers, and forming real-time-based decisions.

To set the stage, here’s a mind-blowing statistic: Google handles more than 99,000 searches every second, according to Internet Live Stats. This translates to more than 8.5 billion daily queries. That’s an astounding amount of data that could ethically be useful for formulating marketing, sales, and product development strategies.

However, it is important to note that scraping Google is not as easy as it sounds. With every technical challenge come legal boundaries and ethical considerations. Businesses able to read the gray in the law can easily gain incentive-free information. In this article, we will discuss all things Google scraping, including its meaning, functions, and responsible ethical use.

What is Google (SERP) Scraping and How Is It Used in Business?

Image Source: APIFY

To start, what is Google scraping? In simple terms, it is the process of retrieving data from Google’s search engine results pages (also known as SERPs). This data can be URLs, page titles, descriptions, ads, featured snippets, and even answer boxes.

Companies use scraping Google search results for a wide variety of purposes. In SEO, for example, professionals track keyword rankings and monitor how their content stacks up against competitors. In digital marketing, brands analyze trends and consumer intent. In business intelligence, teams might monitor competitors’ online presence or gather information about products and services in a particular domain.

Across industries, search engine scraping is becoming a key tool for:

- Competitive research: Understand how rival brands are performing in search results.

- SEO performance tracking: Monitor keyword positions, visibility, and search trends.

- Market research: Identify what people are searching for and how demand shifts over time.

- Content strategy: Analyze top-performing pages to understand what kind of content ranks well.

In essence, Google scraping turns publicly visible search data into structured, usable information that can inform high-impact business strategies.

Techniques for Scraping Google Search Results

There isn’t a uniform strategy applicable to all when it comes to scraping Google search results. Based on objectives, level of technical proficiency, and resource accessibility, different groups tend to employ varied strategies. That being said, most approaches can be classified into three broad categories: manual scraping, coded automated scraping, and google scraping through purpose-built APIs.

Let’s take a closer look at each.

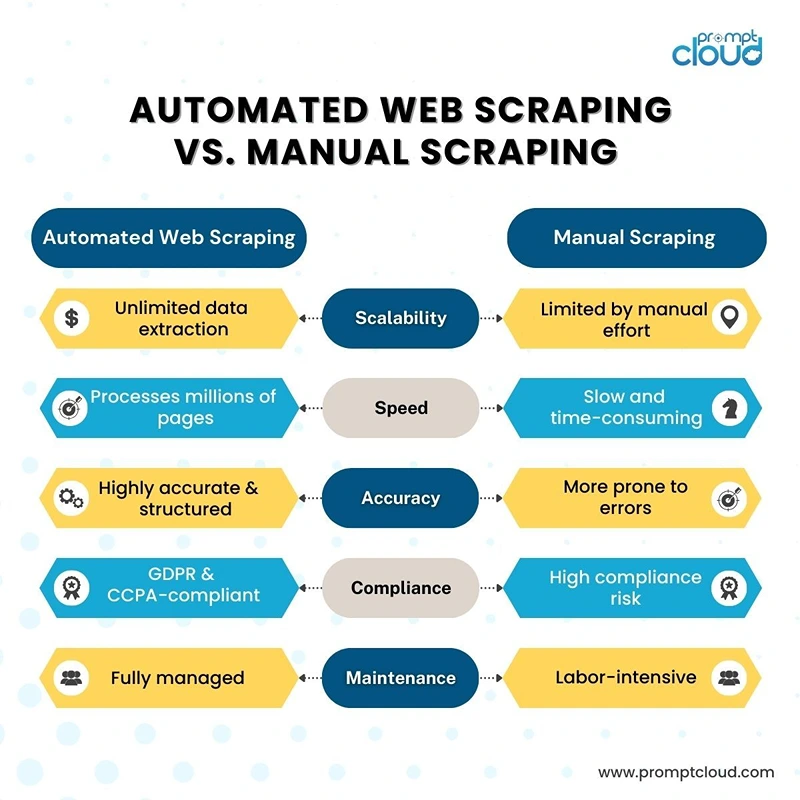

Manual vs. Automated Scraping

Manual scraping is the most rudimentary method, where users retrieve information from Google search pages and paste it into spreadsheets. While this method may aid in addressing one-off requests quickly, it is far too inefficient to sustain recurring requests and bulk datasets. As an example, if you are tracking hundreds of keywords or monitoring global search trends across various categories, manual collection simply would not work.

This is exactly where automation helps. Users are able to retrieve SERP data at scaled volumes around the clock due to automated tools and scripts. The time-saving and consistency offered in data collection through automation is accompanied by the benefits of accuracy. However, along with automation comes the new age hurdles of overcoming Google’s anti-scraping technologies, which will be further discussed in later sections.

Using Python Libraries Like BeautifulSoup and Scrapy

People with some coding experience can make use of powerful libraries such as Google Scrapy, BeautifulSoup, and Selenium to automate scraping Google search results.

- BeautifulSoup is a Python library used to parse HTML and XML documents. It is lightweight, making it ideal for simpler tasks such as extracting titles and URLs from a Google results page.

- Scrapy is more robust and built specifically for large-scale web scraping projects. It is a top choice for teams building custom search engine scraping solutions because it can handle data crawling, processing, and exporting efficiently.

- While Selenium is slower, it does allow for browser automation, which can be useful when dealing with dynamic content or CAPTCHAs. It simulates user activity, making it more difficult for Google to identify scraping.

All these tools have various advantages and disadvantages. However, all of them offer control and flexibility that off-the-shelf solutions do not.

Google SERP APIs and Their Advantages

If you want to skip the technical headache and still get reliable SERP data, APIs are your best friend. Google SERP APIs are built by third-party providers to deliver structured, real-time search result data through a simple API call.

These APIs take care of the heavy lifting for users, like rotating proxies, resolving CAPTCHAS, and maintaining uptime, so users can focus on data analysis rather than collection. APIs offer immense usefulness in the case of accuracy, dependability, timeliness of response, and uniformity of data attained.

At PromptCloud, we’ve worked with clients who chose APIs over custom scraping scripts specifically because of the reliability and scalability they offer. Especially when tracking thousands of keywords across regions, APIs provide an efficient, low-maintenance way to keep a pulse on the SERP.

Challenges in Scraping Google Search Results

While scraping Google may reveal hidden gems of information, it is not without its constraints. In fact, scraping search engine results—especially from Google—is one of the more technically complex forms of web scraping. Preventative measures against automated access are continuously refined by Google, making scrapers have to keep up equally sophisticated, which leads to complications like CAPTCHAs or ensuring data freshness. These are just a few of the many issues that businesses and developers face when tackling this problem.

Google’s Anti-Scraping Mechanisms

Google employs one of the most sophisticated anti-scraping infrastructures out there. Their systems work around trying to identify and eliminate unreasonably provocative or monotonous traffic, particularly from non-human participants. One of the primary barriers is CAPTCHA: the test is meant to distinguish between a machine and a human. If you are scaling and scraping Google data, Unusual Activity Detection will trigger, and CAPTCHAs will show up more frequently.

IP blocking is yet another mechanism in Google’s arsenal. They will assign a temporary blockade to the said IP if query levels are particularly high and sustained over a period of time. Having your requests too frequently or coming from the same IP address may lead Google to block that IP permanently or temporarily. This halts the collection of data and may require changing proxies or VPNs to continue scraping.

Handling these protections requires technical know-how, infrastructure, and sometimes external tools or services. These are not impossible barriers, but they certainly raise the bar for anyone looking to scrape Google effectively.

Data Accuracy and Freshness Issues

Search engine results can change by the minute. Factors like location, device type, search history, and even the language setting can influence what appears in a given SERP. This means the same keyword might yield slightly different results across users or timeframes.

For anyone doing search engine scraping for competitive intelligence or SEO, this variability presents a challenge. Maintaining accuracy and freshness in your data collection requires frequent scraping, ideally with regional and contextual customization. That’s where scalable scraping solutions or APIs become critical—they allow you to pull updated data consistently across different environments.

However, this also puts pressure on infrastructure. More frequent scraping increases the risk of detection, adds strain to systems, and demands more resource allocation, both in terms of time and cost.

Technical and Resource Limitations

Not every organization has a team of developers ready to build and maintain custom scrapers. Writing a scraper is one thing; maintaining it against Google’s constant updates is another. Websites change their layout often, and when Google tweaks its HTML structure—even slightly—it can break a scraper.

There’s also the question of resources. Running a scraper 24/7 to monitor keywords globally involves rotating proxies, managing errors, storing large volumes of data, and scaling infrastructure. All this requires dedicated servers, bandwidth, and consistent maintenance, which may not be feasible for small businesses or teams with limited budgets.

For many, these challenges become a strong reason to consider alternatives like PromptCloud’s custom data solutions or ready-to-use Google SERP APIs, which eliminate the need to build everything from scratch.

Is Scraping Google Legal?

One of the most frequently asked questions we hear at PromptCloud is, “Is scraping Google legal?” And the answer isn’t as straightforward as you might hope—it depends on what data you’re accessing, how you’re accessing it, and what you do with that data afterward. While scraping itself isn’t inherently illegal, the context and execution make all the difference.

Google’s Terms of Service and Restrictions

To start, let’s look at Google’s own rules. According to Google’s Terms of Service, automated access to its services without explicit permission is prohibited. This includes sending bots or scripts to scrape Google Search results.

That said, Terms of Service violations are not automatically criminal offenses. However, they can lead to civil lawsuits or platform bans. For instance, if you’re scraping Google aggressively and causing high traffic loads or accessing restricted areas, Google could block your IP, serve legal notices, or suspend associated accounts.

It’s also important to remember that while search results are publicly visible, they are still considered part of Google’s proprietary content. This means scraping without permission can fall into a legal gray area—especially if the scraped data is used for commercial purposes.

Legal Risks and Ethical Considerations

From a legal standpoint, the biggest concern is often whether your scraping activity violates any specific laws, like the Computer Fraud and Abuse Act (CFAA) in the U.S. or similar data protection regulations in other regions.

The risks become more serious when scraping involves:

- Bypassing access restrictions (e.g., scraping data behind logins or paywalls).

- Harvesting personally identifiable information (PII).

- Causing service disruptions to the target website.

That’s why many companies tread carefully. Even if scraping Google search results helps with SEO tracking or competitive analysis, the legal landscape must be considered. It’s not just about what’s possible—it’s about what’s permissible.

There’s also an ethical dimension. Search engines like Google invest heavily in maintaining their infrastructure and user experience. Respecting their guidelines and usage boundaries is part of being a responsible web user. If scraping activity causes server strain or negatively affects how results are displayed to others, that’s not just bad practice—it can harm reputations.

Workarounds: Public Data vs. Restricted Content

The most important aspect in this case is distinguishing between data that is truly publicly accessible without restrictions and those behind walls and fences. For example, general listings on SERPs such as URLs, snippets, and featured snippets are believed to be public, so anyone without an account can access them. However, attempting to scrape Google Ads or user-generated reviews associated with profile images dives deeper into sensitive territory.

To maintain a balance within ethically and legally suitable limits, many businesses resort to data partners like PromptCloud as well as Google’s SERP APIs. These businesses have set policies to capture legally sensitive data without outlining strict laws, all the while providing clean, structured, and compliant data for purchase.

Best Practices for Ethical Google Scraping

Even though scraping Google search results can offer immense value for SEO, market research, and competitive analysis, it’s important to approach it the right way. Ethical scraping not only helps you stay within legal boundaries but also ensures you’re not hurting the performance or integrity of the websites you’re pulling data from—including Google.

At PromptCloud, we always advocate for ethical and compliant data practices. Here are some of the best ways to keep your Google scraping efforts on the right side of both policy and performance.

Use Google SERP APIs Where Possible

One of the easiest ways to scrape ethically is to use a reliable SERP API instead of building your own scraper. These APIs are designed specifically for retrieving search engine data in a structured, stable, and scalable format. They typically handle all the behind-the-scenes complexity—like rotating proxies, solving CAPTCHAs, and managing region-specific queries—so you don’t have to.

Most importantly, reputable API providers design their services with legal compliance in mind. So when you opt for a Google SERP API, you’re not just getting convenience—you’re also reducing your legal risk.

At PromptCloud, many of our clients choose APIs for this exact reason. They need reliable, real-time data without having to worry about bans, blocks, or legal gray areas.

Respect Robots.txt Guidelines

Another important ethical guideline in search engine scraping is respecting the robots.txt file. This file exists on almost every website and tells bots which pages can or cannot be crawled. While robots.txt isn’t legally binding, it reflects the publisher’s preferences about automated access.

For Google, this file can be viewed at google.com/robots.txt. If your scraper is set up to respect these rules, you’re showing good faith and reducing your chances of facing unwanted blocks or challenges.

Remember: just because a page is publicly accessible doesn’t mean it’s open for unrestricted scraping. When in doubt, check robots.txt and limit scraping to what’s allowed.

Avoid Excessive Server Load

Google’s systems are built to handle massive traffic, but that doesn’t mean scraping should be aggressive. One of the biggest red flags is sending too many requests in a short amount of time. This can trigger Google’s anti-bot systems, cause your IP to get blocked, or even result in broader access restrictions.

To avoid this, follow rate-limiting practices:

- Space out your requests over time

- Use randomized intervals between queries

- Implement proxy rotation to spread traffic across multiple IPs

This not only helps avoid detection but also ensures you’re not overburdening the servers. Ethical scraping is about being efficient, not disruptive.

Stay Transparent Internally

Finally, make sure everyone involved in your scraping process—developers, analysts, legal teams, and decision-makers—is aware of the methods and policies being used. Transparency helps ensure that legal, technical, and ethical guidelines are followed throughout the project.

If you’re working with a vendor like PromptCloud, ask questions about how data is collected. The right partner will always be open about compliance, infrastructure, and risk management.

Responsible Google Scraping and Smarter Alternatives

Google scraping can deliver powerful insights for SEO, digital marketing, and competitive research—but it’s not without risks. From IP blocks to legal gray areas, scraping Google search results requires a careful, ethical approach.

The smartest way to stay compliant and efficient is to use Google SERP APIs or partner with data experts who understand the legal and technical nuances. These tools provide structured, up-to-date data without the hassle of maintaining your own scrapers.At PromptCloud, we help businesses access reliable SERP data at scale—ethically and efficiently. If you’re looking to skip the complexity and focus on insights, we’ve got you covered. Schedule a demo today!