Introduction

In the rapidly evolving landscape of artificial intelligence, generative AI has emerged as a groundbreaking technology. These AI models can create content that is indistinguishable from human-generated content, ranging from text and images to music and code. A critical aspect of training these models is the acquisition of vast and varied datasets, a task where web data scraping plays a crucial role.

What is Web Data Scraping?

Web data scraping is the process of extracting data from websites. This technique uses software to access the web as a human user would but at a much larger scale. The scraped data can then be used for various purposes, including analysis, research, and training AI models.

Generative AI and its Need for Data

Generative AI, a subset of artificial intelligence, is focused on creating new content, whether it be text, images, videos, or even music. Unlike traditional AI models that are designed to analyze and interpret data, generative AI models actively produce new data that mimics human-like creativity. This remarkable capability is powered by complex algorithms and, most importantly, by extensive and diverse datasets. Here’s a deeper dive into the data needs of generative AI:

Volume of Data:

- Scale and Depth: Generative AI models, like GPT (Generative Pre-trained Transformer) and image generators such as DALL-E, require an enormous volume of data to effectively learn and understand diverse patterns. The scale of this data is not just in the order of gigabytes but often terabytes or more.

- Variety in Data: To capture the nuances of human language, art, or other forms of expression, the dataset must encompass a wide range of topics, languages, and formats.

Quality and Diversity of Data:

- Richness in Content: The quality of the data is as important as its quantity. Data must be rich in information, providing a broad spectrum of knowledge and cultural context.

- Diversity and Representation: Ensuring that the data is not biased and represents a balanced view is essential. This includes diversity in terms of geography, culture, language, and perspectives.

Real-World and Contextual Relevance:

- Keeping Up with Evolving Contexts: AI models need to understand current events, slang, new terminologies, and evolving cultural norms. This requires regular updates with recent data.

- Contextual Understanding: For AI to generate relevant and sensible content, it needs data that provides context, which can be intricate and multi-layered.

Legal and Ethical Aspects of Data:

- Consent and Copyright: When scraping web data, it’s crucial to consider legal aspects such as copyright laws and user consent, especially when dealing with user-generated content.

- Data Privacy: With regulations like GDPR, ensuring data privacy and ethical use of scraped data is paramount.

Challenges in Data Processing:

- Data Cleaning and Preparation: Raw data from the web is often unstructured and requires significant cleaning and processing to be usable for AI training.

- Handling Ambiguity and Errors: Data from the web can be inconsistent, incomplete, or contain errors, posing challenges in training effective AI models.

Future Directions:

- Synthetic Data Generation: To overcome limitations in data availability, there is growing interest in using AI to generate synthetic data that can augment real-world datasets.

- Cross-Domain Learning: Leveraging data from diverse domains to train more robust and versatile AI models is an area of active research.

The need for data in generative AI is not just about quantity but also about the richness, diversity, and relevance of the data. As AI technology continues to evolve, so too will the methods and strategies for collecting and utilizing data, always balancing the tremendous potential against ethical and legal considerations.

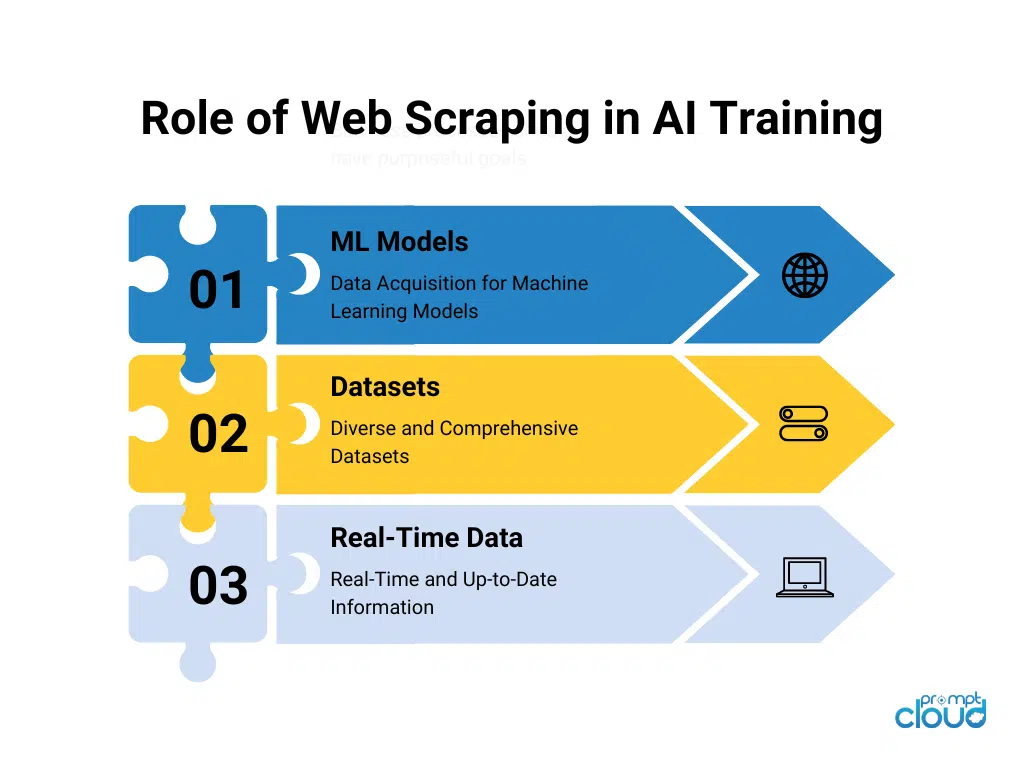

Role of Web Scraping in AI Training

Web scraping, a technique for extracting data from websites, plays a pivotal role in the training and development of generative AI models. This process, when executed correctly and ethically, can provide the vast and varied datasets necessary for these AI systems to learn and evolve. Let’s delve into the specifics of how web scraping contributes to AI training:

Data Acquisition for Machine Learning Models:

- Foundation for Learning: Generative AI models learn by example. Web scraping provides these examples in large quantities, offering a diverse range of data, from text and images to complex web structures.

- Automated Collection: Web scraping automates the data collection process, enabling the gathering of vast amounts of data more efficiently than manual methods.

Diverse and Comprehensive Datasets:

- Wide Range of Sources: Scraping data from various websites ensures a richness in the dataset, encompassing different styles, topics, and formats, which is crucial for training versatile AI models.

- Global and Cultural Variance: It allows for the inclusion of global and cultural nuances by accessing content from different regions and languages, leading to more culturally aware AI.

Real-Time and Up-to-Date Information:

- Current Trends and Developments: Web scraping helps in capturing real-time data, ensuring that the AI models are trained on current and up-to-date information.

- Adaptability to Changing Environments: This is particularly important for AI models that need to understand or generate content relevant to current events or trends.

Challenges and Solutions in Data Quality:

- Ensuring Relevance and Accuracy: Web scraping must be paired with robust filtering and processing mechanisms to ensure that the data collected is relevant and of high quality.

- Dealing with Noisy Data: Techniques like data cleaning, normalization, and validation are crucial to refine the scraped data for training purposes.

Ethical and Legal Considerations:

- Respecting Copyright and Privacy Laws: It’s important to navigate legal constraints, such as copyright laws and data privacy regulations, while scraping data.

- Consent and Transparency: Ethical scraping involves respecting website terms of use and being transparent about data collection practices.

Customization and Specificity:

- Tailored Data Collection: Web scraping can be customized to target specific types of data, which is particularly useful for training specialized AI models in fields like healthcare, finance, or legal.

Cost-Effective and Scalable:

- Reducing Resource Expenditure: Scraping provides a cost-effective way to gather large datasets, reducing the need for expensive data acquisition methods.

- Scalability for Large-Scale Projects: As AI models grow more complex, the scalability of web scraping becomes a significant advantage.

Web scraping is a vital tool in the arsenal of AI development. It provides the necessary fuel — data — that drives the learning and sophistication of generative AI models. As AI technology continues to advance, the role of web scraping in acquiring diverse, comprehensive, and up-to-date datasets becomes increasingly significant, highlighting the need for responsible and ethical scraping practices.

PromptCloud – Your Right Web Scraping Partner

PromptCloud offers state-of-the-art web scraping solutions that empower businesses and researchers to harness the full potential of data-driven strategies. Our advanced web scraping tools are designed to efficiently and ethically gather data from a wide array of online sources. With PromptCloud’s solutions, users can access real-time, high-quality data, ensuring that they stay ahead in today’s fast-paced digital landscape.

Our services cater to a range of needs, from market research and competitive analysis to training sophisticated generative AI models. We prioritize ethical scraping practices, ensuring compliance with legal and privacy standards, thus safeguarding our clients’ interests and reputations. Our scalable solutions are suitable for businesses of all sizes, offering a cost-effective and powerful way to drive innovation and informed decision-making.

Are you ready to unlock the power of data for your business? With PromptCloud’s web scraping solutions, you can tap into the wealth of information available online, transforming it into actionable insights. Whether you’re developing cutting-edge AI technologies or seeking to understand market trends, our tools are here to help you succeed.

Join the ranks of our satisfied clients who have seen tangible results by leveraging our web scraping services. Contact us today to learn more and take the first step towards harnessing the power of web data. Reach out to our sales team at sales@promptcloud.com

Frequently Asked Questions (FAQs)

Where can I get AI training data?

AI training data can be sourced from a variety of platforms, including Kaggle, Google Dataset Search, and the UCI Machine Learning Repository. For tailored and specific needs, PromptCloud offers custom data solutions, providing high-quality, relevant datasets that are crucial for effective AI training. We specialize in web scraping and data extraction, delivering structured data as per your requirements. Additionally, crowdsourcing platforms like Amazon Mechanical Turk can also be utilized for custom dataset generation.

How big is the AI training dataset?

The size of an AI training dataset can vary greatly depending on the complexity of the task, the algorithm being used, and the desired accuracy of the model. Here are some general guidelines:

- Simple Tasks: For basic machine learning models, such as linear regression or small-scale classification problems, a few hundred to a few thousand data points might be sufficient.

- Complex Tasks: For more complex tasks, like deep learning applications (including image and speech recognition), datasets can be significantly larger, often ranging from tens of thousands to millions of data points.

- Natural Language Processing (NLP): NLP tasks, especially those involving deep learning, typically require large datasets, sometimes comprising millions of text samples.

- Image and Video Recognition: These tasks also require large datasets, often in the order of millions of images or frames, particularly for high-accuracy deep learning models.

The key is not just the quantity of data but also its quality and diversity. A large dataset with poor quality or low variability might be less effective than a smaller, well-curated dataset. For specific projects, it’s important to balance the size of the dataset with the computational resources available and the specific goals of the AI application.

Where can I find data for AI?

Finding data for AI projects can be done through a variety of sources, depending on the nature and requirements of your project:

- Public Datasets: Websites like Kaggle, Google Dataset Search, UCI Machine Learning Repository, and government databases often provide a wide range of datasets for different domains.

- Web Scraping: Tools like PromptCloud can help you extract large amounts of custom data from the web. This is particularly useful for creating datasets tailored to your specific AI project.

- Crowdsourcing Platforms: Amazon Mechanical Turk and Figure Eight allow you to gather and label data, which is especially useful for tasks requiring human judgment.

- Data Sharing Platforms: Platforms like AWS Data Exchange and Data.gov provide access to a variety of datasets, including those for commercial use.

- Academic Databases: For research-oriented projects, academic databases like JSTOR or PubMed offer valuable data, especially in fields like social sciences and healthcare.

- APIs: Many organizations provide APIs for accessing their data. For example, Twitter and Facebook offer APIs for social media data, and there are numerous APIs for weather, financial data, etc.

Remember, the key to effective AI training is not only the size but also the quality and relevance of the data to your specific problem.