Web scraping has become an essential tool for businesses seeking valuable data to inform their strategies. Whether it’s for tracking competitor pricing, monitoring market trends, or extracting customer sentiment, web scraping empowers businesses to make data-driven decisions. However, one major challenge that frequently arises is IP bans – a common roadblock in the scraping process.

This guide will explore how to bypass IP bans, the reasons they occur, and strategies for successful, uninterrupted web scraping. By the end, you’ll understand how to bypass IP bans effectively and ensure your data extraction efforts are seamless, scalable, and productive.

Why IP Bans Happen?

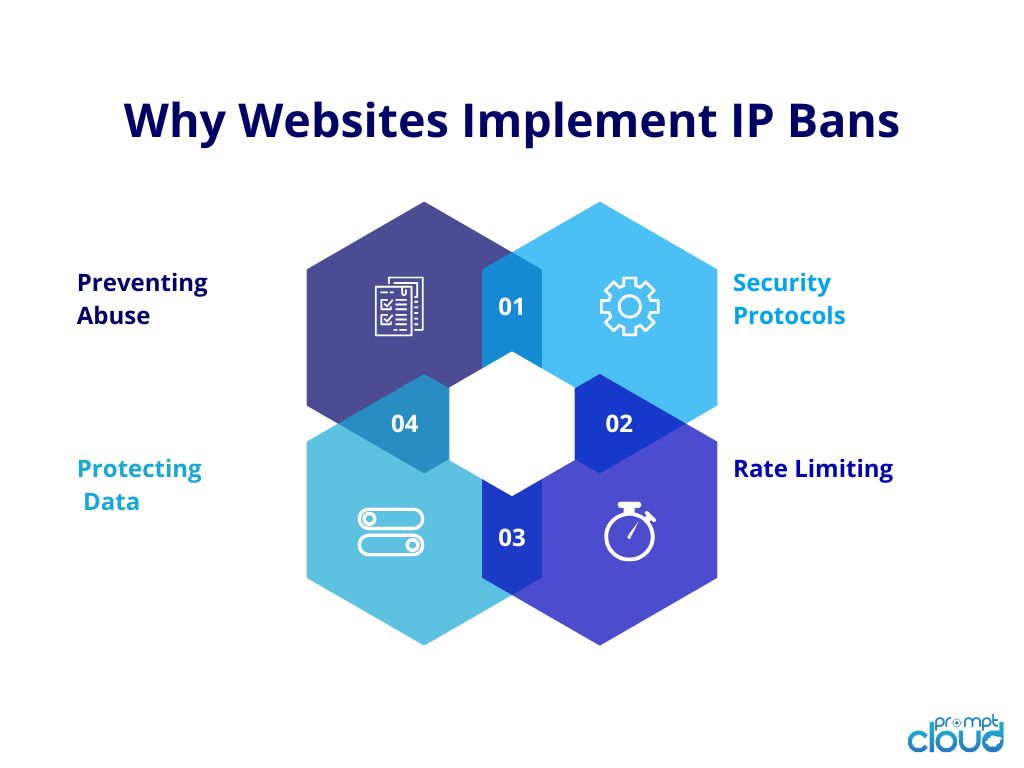

Before diving into how to bypass IP bans, it’s important to understand why websites implement them. IP bans typically occur due to:

- Preventing Abuse:

Websites use IP bans to prevent excessive data requests, ensuring their servers are not overloaded by automated bots. - Security Protocols:

IP bans are often part of security measures aimed at detecting suspicious behavior and preventing unauthorized access. - Protecting Data:

Some websites restrict access to safeguard proprietary data or to limit competitors from extracting valuable information. - Rate Limiting:

Websites enforce rate limits to manage traffic, limiting the number of requests from the same IP address within a given timeframe.

Understanding these reasons is the first step toward developing effective strategies to bypass IP bans and continue data extraction smoothly.

How to Effectively Bypass IP Bans for Web Scraping?

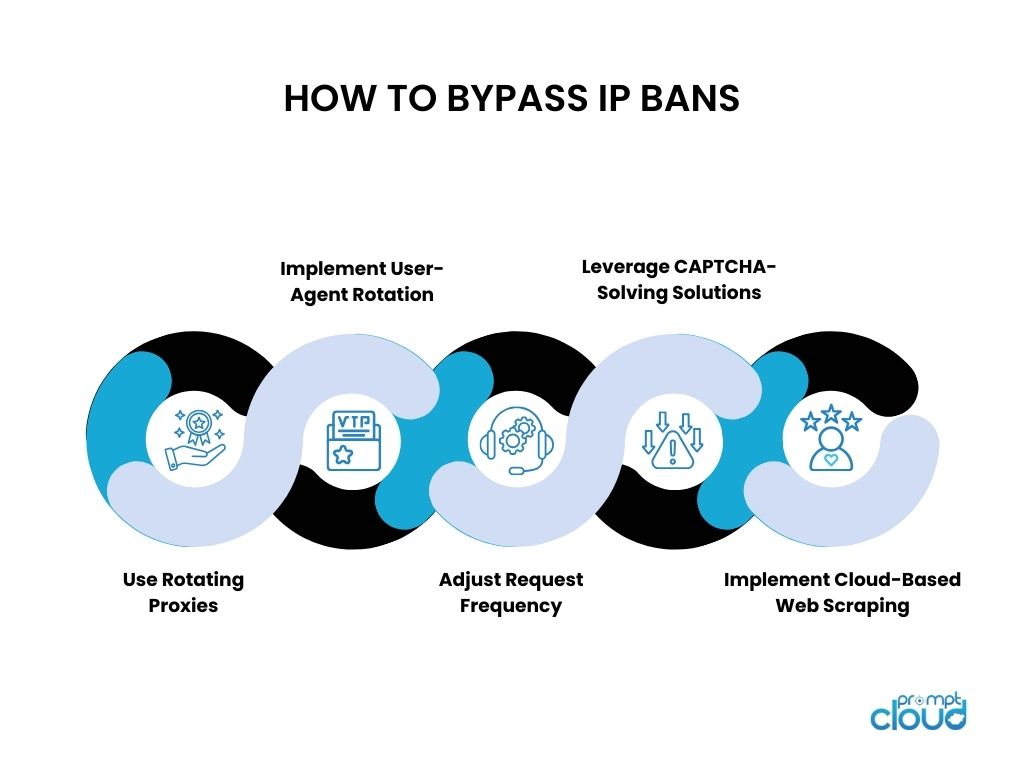

1. Use Rotating Proxies

One of the most effective ways to bypass IP bans is by using rotating proxies. Proxies act as intermediaries between your scraper and the target website, allowing you to send requests from different IP addresses.

Here’s why they work:

- Prevent Detection:

By using a different IP for each request, it’s difficult for websites to detect patterns, reducing the risk of getting banned. - Scalability:

Rotating proxies enable scraping at scale, as you can send multiple requests simultaneously without exceeding rate limits.

How to Use Rotating Proxies to Avoid IP Bans?

- Choose High-Quality Proxies:

Invest in residential or data center proxies from a reliable provider to ensure stable and consistent performance. - Randomize IP Rotation Intervals:

Set your proxies to rotate randomly to make your requests appear natural and unpredictable. - Integrate Proxies with User-Agent Rotation:

Combining proxies with user-agent rotation increases your chances of bypassing IP bans successfully.

2. Implement User-Agent Rotation

Another effective way to bypass IP bans is by rotating user-agent strings, which help simulate requests from different browsers, devices, or operating systems. By making each request look like it’s coming from a different device, you can evade detection.

- Why It Works?

Websites often track user-agent strings to identify bots. Rotating them makes your scraper appear as multiple users, making it harder to detect as a bot.

How to Implement User-Agent Rotation for Seamless Scraping?

- Create a Diverse Pool of User-Agents:

Use user-agents from popular browsers and devices to make your requests appear more authentic. - Randomize Headers:

Alongside user-agent rotation, rotate other headers like Accept-Language and Referer to mimic human behavior. - Use in Combination with Proxies:

Combining user-agent rotation with proxies increases the chances of successfully bypassing IP bans.

3. Adjust Request Frequency

Managing request frequency is a critical aspect of learning how to bypass IP bans effectively. Websites often set rate limits to detect unusual traffic spikes.

- Why It Works:

Mimicking human-like behavior with randomized delays between requests prevents rate limits from being triggered, helping you bypass IP bans.

How to Manage Request Frequency for Undetectable Scraping?

- Use Random Delays:

Implement randomized delays between requests to make scraping behavior appear natural. - Throttling Mechanism:

Use a dynamic throttling system that adjusts request speeds based on server response times. - Monitor Server Response:

Pay attention to response times. If the server slows down, it’s a sign that you might be approaching rate limits—adjust your speed accordingly.

4. Leverage CAPTCHA-Solving Solutions

Websites use CAPTCHAs to block bots from accessing their content. To maintain seamless access, you need to understand how to bypass IP bans triggered by CAPTCHA challenges.

- Use CAPTCHA-Solving Services:

Integrate third-party CAPTCHA solvers that use AI to solve challenges in real-time. - Optical Character Recognition (OCR):

Use OCR tools to extract data from CAPTCHA images, converting it into readable text for your scraper. - Automated Retry Mechanism:

Design your scraper to retry a few times if CAPTCHA solving fails, ensuring continuous data extraction.

5. Implement Cloud-Based Web Scraping

Cloud-based web scraping solutions offer a robust and scalable way to bypass IP bans. Here’s how cloud-hosted web scraping makes data extraction more efficient:

- High Availability & Scalability:

The cloud provides access to a large pool of IPs, making it easier to rotate addresses and avoid IP bans. - Automated Scheduling:

With cloud-based scheduling, you can automate scraping at intervals that align with rate limits, helping you avoid detection. - Adaptive IP Pools:

Cloud web scraping solutions have access to a dynamic IP pool, making IP rotation seamless and reducing the likelihood of IP bans.

6. Set Up Adaptive Scraping Mechanisms

Websites often change their structures, blocking patterns, or anti-scraping measures. Adaptive mechanisms ensure your scraping strategies evolve to handle these changes and effectively bypass IP bans.

- Use AI for Adaptation:

AI algorithms can detect website changes and adjust scraping patterns accordingly. - Set Up Monitoring Systems:

Regularly monitor target websites for layout changes and update your scraping scripts promptly.

Best Practices You Need for Successful Web Scraping

While understanding how to bypass IP bans is crucial, there are a few other best practices to ensure efficient and compliant data extraction:

1. Prioritize Compliance

Ensure that your scraping activities comply with legal and ethical standards. Scraping publicly available data is generally legal, but respecting website terms and privacy policies is essential.

2. Invest in Robust Web Scraping Tools

Using reliable web scraping tools with built-in features like proxy management, user-agent rotation, and CAPTCHA-solving capabilities significantly improves the chances of bypassing IP bans.

3. Optimize Scraping Strategies Regularly

Continuously test, optimize, and refine your scraping strategies to improve efficiency, enhance data quality, and maintain consistent access.

4. Ensure Data Quality

Implement data validation processes to ensure the data collected is accurate, clean, and usable. This will help improve data analysis, decision-making, and overall business outcomes.

Conclusion:

Mastering how to bypass IP bans is key to unlocking the full potential of web scraping. By using rotating proxies, user-agent rotation, adaptive cloud-based scraping, and managing request rates, you can maintain seamless access to valuable web data.

Implementing these strategies will enable you to extract the data you need without disruptions, keeping your business competitive and data-driven. For custom web scraping requirements, schedule a quick demo with our team.