The stock market is a huge database with millions of entries that get updated every single second. While there are many companies that do provide financial data of companies, it is usually through an API (Application programming interface). And as you may have guessed, these APIs are never free. Yahoo Finance is a trusted source of stock market data and has a paid API. We will guide you in a step-by-step process on how to scrape Yahoo Finance data using Python.

Why Crawl Data from Yahoo Finance?

If you require a free, clean finance data from a trusted source, Yahoo Finance is your best bet. The company-profile webpages are built in a uniform structure, making web scraping python easy. If you write a script to crawl data from the financial page of Microsoft, the same script could also be used to crawl data from the financial page of Apple.

How to Web Scrape Finance Data using Python?

For the installation and getting started, you can refer the basic steps from this article on data scraping python , where we discussed how to crawl data from a leading hotel booking portal. Once you have installed all dependencies along with the code editor Atom, let’s start with scraping Yahoo Finance with Python.

1. Start with the Code for Scraping with Python

Once installation and setup stages are complete, we can go right into the code and start Yahoo Finance Scraping. The code is given further below and can be run using just the Python command.

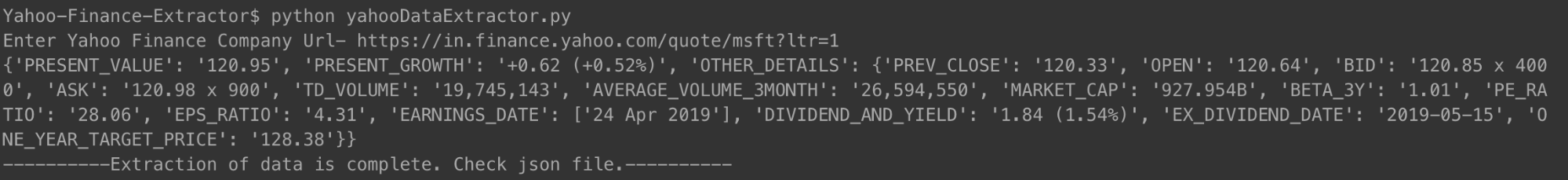

You can run the code in the manner shown above. When prompted, just enter the URL of the company whose financial summary you want to check. We have used the link for Microsoft.

[code language=”python”]

#!/usr/bin/python

# -*- coding: utf-8 -*-

import urllib.request

import urllib.parse

import urllib.error

from bs4 import BeautifulSoup

import ssl

import json

import ast

import os

from urllib.request import Request, urlopen

# For ignoring SSL certificate errors

ctx = ssl.create_default_context()

ctx.check_hostname = False

ctx.verify_mode = ssl.CERT_NONE

# Input from the user

url = input(‘Enter Yahoo Finance Company Url- ‘)

# Making the website believe that you are accessing it using a Mozilla browser

req = Request(url, headers={‘User-Agent’: ‘Mozilla/5.0’})

webpage = urlopen(req).read()

# Creating a BeautifulSoup object of the HTML page for easy extraction of data.

soup = BeautifulSoup(webpage, ‘html.parser’)

html = soup.prettify(‘utf-8’)

company_json = {}

other_details = {}

for span in soup.findAll(‘span’,

attrs={‘class’: ‘Trsdu(0.3s) Trsdu(0.3s) Fw(b) Fz(36px) Mb(-4px) D(b)’

}):

company_json[‘PRESENT_VALUE’] = span.text.strip()

for div in soup.findAll(‘div’, attrs={‘class’: ‘D(ib) Va(t)’}):

for span in div.findAll(‘span’, recursive=False):

company_json[‘PRESENT_GROWTH’] = span.text.strip()

for td in soup.findAll(‘td’, attrs={‘data-test’: ‘PREV_CLOSE-value’}):

for span in td.findAll(‘span’, recursive=False):

other_details[‘PREV_CLOSE’] = span.text.strip()

for td in soup.findAll(‘td’, attrs={‘data-test’: ‘OPEN-value’}):

for span in td.findAll(‘span’, recursive=False):

other_details[‘OPEN’] = span.text.strip()

for td in soup.findAll(‘td’, attrs={‘data-test’: ‘BID-value’}):

for span in td.findAll(‘span’, recursive=False):

other_details[‘BID’] = span.text.strip()

for td in soup.findAll(‘td’, attrs={‘data-test’: ‘ASK-value’}):

for span in td.findAll(‘span’, recursive=False):

other_details[‘ASK’] = span.text.strip()

for td in soup.findAll(‘td’, attrs={‘data-test’: ‘DAYS_RANGE-value’}):

for span in td.findAll(‘span’, recursive=False):

other_details[‘DAYS_RANGE’] = span.text.strip()

for td in soup.findAll(‘td’,

attrs={‘data-test’: ‘FIFTY_TWO_WK_RANGE-value’}):

for span in td.findAll(‘span’, recursive=False):

other_details[‘FIFTY_TWO_WK_RANGE’] = span.text.strip()

for td in soup.findAll(‘td’, attrs={‘data-test’: ‘TD_VOLUME-value’}):

for span in td.findAll(‘span’, recursive=False):

other_details[‘TD_VOLUME’] = span.text.strip()

for td in soup.findAll(‘td’,

attrs={‘data-test’: ‘AVERAGE_VOLUME_3MONTH-value’

}):

for span in td.findAll(‘span’, recursive=False):

other_details[‘AVERAGE_VOLUME_3MONTH’] = span.text.strip()

for td in soup.findAll(‘td’, attrs={‘data-test’: ‘MARKET_CAP-value’}):

for span in td.findAll(‘span’, recursive=False):

other_details[‘MARKET_CAP’] = span.text.strip()

for td in soup.findAll(‘td’, attrs={‘data-test’: ‘BETA_3Y-value’}):

for span in td.findAll(‘span’, recursive=False):

other_details[‘BETA_3Y’] = span.text.strip()

for td in soup.findAll(‘td’, attrs={‘data-test’: ‘PE_RATIO-value’}):

for span in td.findAll(‘span’, recursive=False):

other_details[‘PE_RATIO’] = span.text.strip()

for td in soup.findAll(‘td’, attrs={‘data-test’: ‘EPS_RATIO-value’}):

for span in td.findAll(‘span’, recursive=False):

other_details[‘EPS_RATIO’] = span.text.strip()

for td in soup.findAll(‘td’, attrs={‘data-test’: ‘EARNINGS_DATE-value’

}):

other_details[‘EARNINGS_DATE’] = []

for span in td.findAll(‘span’, recursive=False):

other_details[‘EARNINGS_DATE’].append(span.text.strip())

for td in soup.findAll(‘td’,

attrs={‘data-test’: ‘DIVIDEND_AND_YIELD-value’}):

other_details[‘DIVIDEND_AND_YIELD’] = td.text.strip()

for td in soup.findAll(‘td’,

attrs={‘data-test’: ‘EX_DIVIDEND_DATE-value’}):

for span in td.findAll(‘span’, recursive=False):

other_details[‘EX_DIVIDEND_DATE’] = span.text.strip()

for td in soup.findAll(‘td’,

attrs={‘data-test’: ‘ONE_YEAR_TARGET_PRICE-value’

}):

for span in td.findAll(‘span’, recursive=False):

other_details[‘ONE_YEAR_TARGET_PRICE’] = span.text.strip()

company_json[‘OTHER_DETAILS’] = other_details

with open(‘data.json’, ‘w’) as outfile:

json.dump(company_json, outfile, indent=4)

print company_json

with open(‘output_file.html’, ‘wb’) as file:

file.write(html)

print ‘———-Extraction of data is complete. Check json file.———-‘

[/code]

Once you run the code and enter a company URL, you will see a json printed on your terminal. This json will also be saved to a file with the name “data.json” in your folder. For Microsoft, we obtained the following JSON.

[code language=”python”]

{

“PRESENT_GROWTH”: “+1.31 (+0.67%)”,

“PRESENT_VALUE”: “197.00”,

“PREV_CLOSE”: “195.69”,

“OPEN”: “196.45”,

“BID”: “196.89 x 900”,

“ASK”: “197.00 x 1400”,

“TD_VOLUME”: “18,526,644”,

“AVERAGE_VOLUME_3MONTH”: “29,962,082”,

“MARKET_CAP”: “928.91B”,

“BETA_3Y”: “0.91”,

“PE_RATIO”: “16.25”,

“EPS_RATIO”: “12.12”,

“EARNINGS_DATE”: [

“Apr 30, 2019”

],

“DIVIDEND_AND_YIELD”: “2.92 (1.50%)”,

“EX_DIVIDEND_DATE”: “2019-02-08”,

“ONE_YEAR_TARGET_PRICE”: “190.94”

}

[/code]

For Apple, which has been making multiple losses of late, the JSON looked like this.

[code language=”python”]

{

“PRESENT_VALUE”: “198.87”,

“PRESENT_GROWTH”: “-0.08 (-0.04%)”,

“OTHER_DETAILS”: {

“PREV_CLOSE”: “198.95”,

“OPEN”: “199.20”,

“BID”: “198.91 x 800”,

“ASK”: “198.99 x 1000”,

“TD_VOLUME”: “27,760,668”,

“AVERAGE_VOLUME_3MONTH”: “28,641,896”,

“MARKET_CAP”: “937.728B”,

“BETA_3Y”: “0.91”,

“PE_RATIO”: “16.41”,

“EPS_RATIO”: “12.12”,

“EARNINGS_DATE”: [

“30 Apr 2019”

],

“DIVIDEND_AND_YIELD”: “2.92 (1.50%)”,

“EX_DIVIDEND_DATE”: “2019-02-08”,

“ONE_YEAR_TARGET_PRICE”: “193.12”

}

}

[/code]

You can do the same with any number of companies that you want and as frequently as you want to stay updated.

2. The Crawler Code Explained

Like previous web scraping codes, in this one also, we first obtained the entire HTML file. From that, we identified the specific tags (with specific classes) that had the data we needed. This step was done manually for a single company details page. Once the specific tags and their respective classes were identified, we used beautiful soup to get those tags out, using our code.

Then from each of those tags, we copied the necessary data into a variable called the company_json. This is the json that we eventually wrote into a JSON type file called data.json. You can see that we also saved the scraped HTML into a file called output_file.html in your local memory. This is done so that you can analyse the HTML page yourself and come up with other data scraping python techniques to crawl more data.

3. Some Important Data Points that We Captured

- PREV_CLOSE: It refers to the closing price of a stock on the previous day of trading. The prior day’s value of a stock refers only to the last closing price on a day when the stock market was open (not holidays).

- OPEN: The opening price also referred to Open in short, is the starting price of a share on a trading day. For example, the opening price for any stock market on the New York Stock Exchange (NYSE) would be its price at 9:30 a.m. Eastern time.

- BID & ASK: Both the prices are quotes on a single share of the stock. Bid refers to the price that buyers are willing to pay for it whereas the ask is what sellers are willing to sell it for. The multiplier refers to the number of shares pending trade at their respective prices.

- TD_VOLUME: It is simply the number of shares that changed hands during the day.

- PE_RATIO: Probably the most important single factor that investors look into, it is calculated by dividing the current market price of the stock of a company by the earnings per share of the company. Simply put it is the sum of money one is ready to pay for each rupee worth of the earnings of the company.

The reason why we explained some of the important data points is that we wanted you to know how deep you can dive into the financials of a company, just by scraping data from its Yahoo Finance page.

What Data can you Crawl from Yahoo Finance?

You will be able to crawl different data points using this Python scraper. The present value and the present growth or fall percentage is of utmost importance. The other data points, when viewed together, present a better picture and helps one decide whether investing in the stock of a company would be a good idea or not. Looking at a snapshot of the data might not prove too effective though. Scraping the data at regular intervals and using a big dataset to predict future prices of stocks might serve to prove more useful in the long run.

What Other Finance Data can You Crawl?

The finance data that we scraped are from the summary page of a company in Yahoo Finance. Each company also has a chart page, where you can see stock data for up to five years. While the data is not exactly very structured, being able to crawl it might give you a very good insight into the historical performance of the stocks of a company.

The statistics page gives you more than thirty different data points, over and above the ones we captured. These are in a structured format and can be scraped using a code similar to the one we provided.

The historical data contains data from the chart page, but in a format similar to a CSV – you can easily extract the data and store it in a CSV. Other pages like profile, financials, analysis, options, holders and sustainability might give you a good estimation of how the shares of the company will perform compared to its competitors.

Used cases of Yahoo Finance Data

You can use the historical data to predict prices of stocks, or you could create an application that uses regularly updated data from your scraping engine to provide updates to users. You can recommend when one should sell their stocks, or when they should buy more – the possibilities are endless!

In case you have a small team and cannot decide how to get started with web scraping, you can take the help of a committed and experienced web scraping team like PromptCloud. Our web scraping requirement gathering dashboard makes submitting requirements, getting a quote, and finally getting the data in a plug and play format a simple and straightforward process.

Need help with extracting web data?

Get clean and ready-to-use data from websites for business applications through our web scraping services.

Oops! We could not locate your form.

Disclaimer: The code provided in this tutorial is only for learning purposes. We are not responsible for how it is used and assume no liability for any detrimental usage of the source code.