Microsoft Excel is undoubtedly one of the best scraping tools python to manage information in a structured form. Excel is like the Swiss army knife of data, with its great features and capabilities. Here is how MS Excel can be used as a basic web scraping excel tool to extract web data directly into a worksheet. We will be using Excel web queries to make this happen. Excel web scraper is truly powerful. Here’s a comprehensive guide on how to scrape data from website to excel.

Web queries feature of MS Excel is used to fetch website data to excel and can be extracted into the worksheet easily. It can automatically find tables on the webpage and would let you pick the particular table you need the data from. Web queries can also be handy in situations where an ODBC connection is impossible to maintain, apart from just extracting data from the web pages. Let’s see how web queries work and how you can crawl HTML tables and use MS Excel as the best scraping tool in python. We’ll learn in detail about how to scrape data from a website into excel and how to scrape data from website to excel.

Excel Web Scraping Excel Explained

Excel web scraper is a valuable tool. To pull data from website to excel, there are certain steps involved. We’ll start with a simple web query to crawl data from the Yahoo Finance page. This page is particularly easier to crawl and hence is a good fit for learning the method. The page is also pretty straightforward and doesn’t have important information in the form of links or images. Here is the >=”noopener”>tutorial video for using MS Excel as a web scraping excel tool.

1) Create a New Web Query

1. Select the cell in which you want the data to appear

2. Click on Data > From Web

3. The New Web query box will pop up as shown below

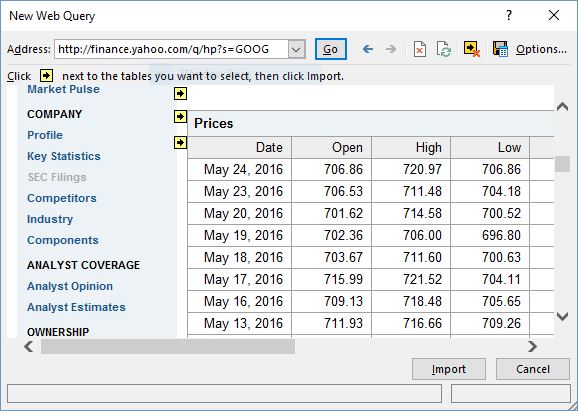

4. Enter the web page URL you need to extract data from in the Address bar and hit the Go button. Extract data from website to excel easily.

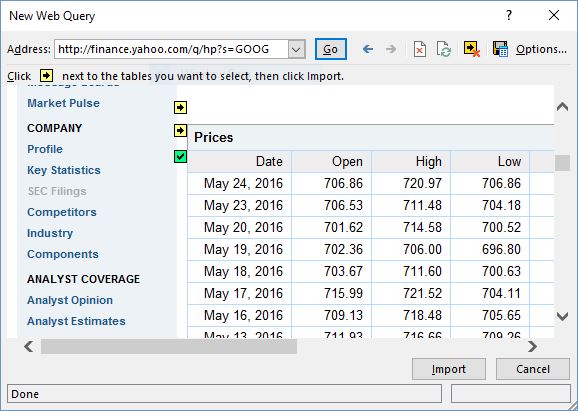

5. Click on the yellow-black buttons next to the table you need to extract data from

6. After selecting the required tables, click on the Import button and you’re done. Excel will now start downloading the content of the selected tables into your worksheet

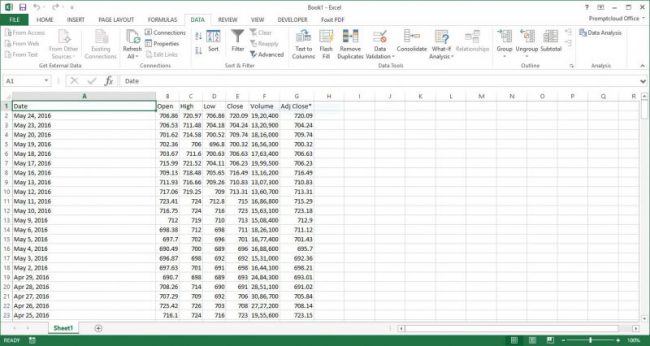

Once you have the data scraped into your Excel worksheet, you can do a host of things like creating charts, sorting, formatting, etc. to better understand or present the data in a simpler way.

2) Web Scraping Excel – Customizing the Query

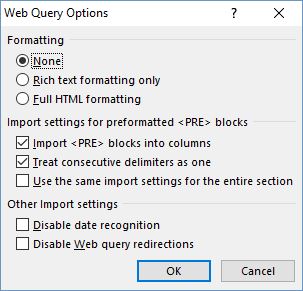

Once you have created a web query, you have the option to customize it according to your requirements. To do this, access web query properties by right-clicking on a cell with the extracted data. The page you were querying appears again, click on the Options button to the right of the address bar. A new pop-up box will display where you can customize how the web query interacts with the target page. The options here let you change some of the basic things related to web pages like the formatting and redirections. Innrguide on how to scrape data from website to excel.

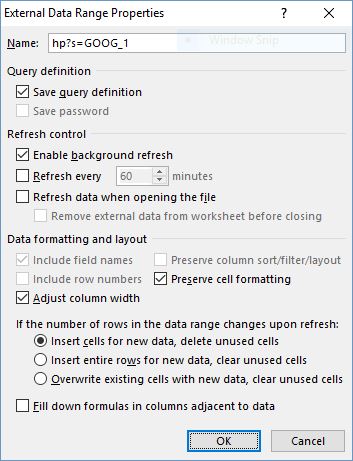

Apart from this, you can also alter the date range options by right-clicking on a random cell with the query results and selecting Data range properties. The data range properties dialog box will pop up where you can make the required changes. You might want to rename the data range to something you can easily recognize like ‘Stock Prices’.

3) Auto-Refresh

Auto-refresh is a feature of web queries worth mentioning, and one which makes our Excel web scraper truly powerful. You can make the extracted data auto-refreshing so that your Excel worksheet will update the data whenever the source website changes. You can set how often you need the data to be updated from the source web page in the data range options menu. The auto-refresh feature can enable by ticking the box beside ‘Refresh every’ and setting your preferred time interval for updating the data.</p> <h3>Web Scraping Excel at Scale

Although >opener”>&amp;gt;web data extraction</a></strong></a></a></strong></em> using the best scraping tools python can be a great way to crawl HTML tables from the websites into excel, it is nowhere close to the enterprise web scraping solution. This can prove to be useful if you are collecting data for your college research paper or you are a hobbyist looking for a budgeted way to get your hands on some data. If data for business is your need, you will have to depend on a web data extraction service with expertise in dealing with web scraping at scale. Outsourcing the complicated web scraping process will also give you more room to deal with other things that need extra attention. In guide on how to scrape data from website to excel.

How to Pull Data from a Website into Excel

Pulling data from a website into Excel can be a game-changer for anyone looking to analyze information quickly and efficiently. Whether you need data for market research, competitor analysis, or tracking trends, this process allows you to transform raw web data into actionable insights. In this guide, we’ll walk you through the steps to extract data from a website and import it directly into Excel, covering both manual and automated methods.

1. Manual Import Using Excel’s Built-In Tools

Excel provides a straightforward way to import data from a website through its “Get & Transform” feature. Here’s how:

- Step 1: Open Excel and go to the “Data” tab.

- Step 2: Select “From Web” under the “Get External Data” group.

- Step 3: Enter the URL of the webpage you want to extract data from.

- Step 4: Excel will load the data into a navigator window. You can select the specific tables or data you need and click “Load” to import it directly into your worksheet.

This method is ideal for small datasets and occasional data pulls, offering a quick way to get started without any additional tools.

2. Automated Data Extraction with Web Scraping Tools

For more complex or large-scale data extraction tasks, web scraping is the way to go. Automated web scraping tools, like the ones offered by PromptCloud, allow you to extract vast amounts of data efficiently and import it into Excel for analysis.

- Step 1: Identify the data you need and the website where it’s located.

- Step 2: Use a web scraping tool or service to automate the data extraction process, capturing everything from product prices to customer reviews.

- Step 3: Export the scraped data in a CSV format, which can be easily imported into Excel for further processing.

This approach is highly scalable and ideal for businesses that require frequent updates or need to handle large volumes of data.

Best Practices for Data Extraction

When pulling data from websites, it’s important to adhere to ethical standards and best practices. Always ensure that your data extraction efforts comply with the website’s terms of service and consider the impact of your activities on the website’s performance.

By following these steps, you can seamlessly pull data from a website into Excel, enabling you to leverage valuable web data for your business decisions. Whether you’re using Excel’s built-in tools for quick data imports or employing advanced web scraping techniques for large-scale projects, PromptCloud is here to support your data extraction needs.

Frequently Asked Questions

#1: How to extract data from a website to Excel?

To extract data from a website to Excel, you can follow these steps:

- Using Excel’s Built-in “Get & Transform” Tool:

- Open Excel and navigate to the “Data” tab.

- Click on “From Web” in the “Get & Transform Data” section.

- Enter the URL of the website you want to extract data from.

- Excel will display the data in a navigator window, where you can select specific tables or data elements to import.

- Click “Load” to import the selected data into your Excel spreadsheet.

- Automating the Process with Web Scraping Tools:

- For more complex or large-scale data extraction, consider using web scraping tools or services.

- These tools can automate the process, extracting data from multiple web pages and exporting it in CSV or Excel formats.

- Once you have the scraped data, you can easily import it into Excel for further analysis.

- Considerations:

- Always ensure that your data extraction efforts comply with the website’s terms of service.

- For best results, make sure the data you’re extracting is structured, such as tables or lists, which are easier to import into Excel.

By following these methods, you can effectively pull data from a website into Excel for analysis, reporting, or decision-making.

#2: Is web scraping legal?

Web scraping is generally legal, but it depends on how and what you scrape. Here are some key points to consider:

- Terms of Service: Many websites have terms of service that explicitly prohibit scraping. Scraping these websites without permission can lead to legal consequences.

- Public vs. Private Data: Scraping publicly available data is usually legal, but accessing private or restricted data without authorization is illegal.

- Ethical Considerations: Even when scraping is legal, it’s important to do so ethically. This means not overwhelming a website’s server with requests, respecting robots.txt files, and ensuring that your scraping activities don’t harm the website’s performance.

- Jurisdiction: Laws around web scraping can vary by country, so it’s essential to be aware of the legal environment in the region where you’re operating.

In summary, while web scraping is legal in many cases, it’s crucial to follow legal guidelines, respect website terms of service, and operate within ethical boundaries to avoid potential issues.

#3: How do I copy a data table from a website to Excel?

Copying a data table from a website to Excel can be done in a few simple steps:

- Manual Copy-Paste Method:

- Open the website with the data table you want to copy.

- Highlight the table by clicking and dragging your cursor over the data.

- Right-click the highlighted area and select “Copy” (or use Ctrl+C on your keyboard).

- Open Excel and select the cell where you want to paste the data.

- Right-click in the selected cell and choose “Paste” (or use Ctrl+V on your keyboard).

- The data table should now appear in your Excel worksheet.

- Using Excel’s “Get & Transform” Feature:

- Open Excel and go to the “Data” tab.

- Select “From Web” in the “Get & Transform Data” section.

- Enter the URL of the webpage containing the data table.

- Excel will display the available tables in a navigator window. Select the table you want and click “Load.”

- The table will be imported directly into your Excel sheet.

- Cleaning Up the Data:

- After pasting or importing, you may need to format the data to fit your needs. This can involve adjusting column widths, removing extra rows or columns, and applying any necessary data formatting.

By following these steps, you can easily copy and transfer a data table from a website to Excel for further analysis or reporting.

#4: How do I extract data from Chrome to Excel?

#5: How do I automatically pull data from a website into Excel?

#6: How do you make Excel automatically update data from a website?

To make Excel automatically update data from a website, you can use Excel’s built-in features like Power Query (also known as “Get & Transform”) or connect it to an external data source like a Google Sheet or an API. Here’s how you can set it up:

1. Using Excel’s Power Query to Automatically Update Data

Excel’s Power Query tool allows you to import data from a website and set it to refresh automatically. Here’s how to do it:

Step 1: Open Excel and navigate to the “Data” tab.

Step 2: Click on “From Web” in the “Get & Transform Data” section.

Step 3: Enter the URL of the webpage containing the data you want to pull into Excel and click “OK.”

Step 4: Excel will load a preview of the data tables available on the webpage. Select the table or data you want to import, then click “Load.”

Step 5: Once the data is loaded into Excel, you can set it to automatically refresh. Right-click on the data table in Excel and select “Refresh” or go to the “Data” tab and select “Refresh All” to refresh all data connections.

Step 6: To set up automatic refresh intervals, click on the “Data” tab, select “Queries & Connections,” and then choose “Properties” next to your query. In the Query Properties window, check the “Refresh every” box and set your desired time interval (e.g., every 30 minutes). You can also choose to refresh data when opening the file by selecting “Refresh data when opening the file.”

2. Using an API for Automatic Data Updates

If the website provides an API, you can connect to it directly from Excel:

Step 1: Obtain API access and documentation from the website.

Step 2: In Excel, go to the “Data” tab and select “From Web” under “Get & Transform Data.”

Step 3: Enter the API endpoint URL and follow the prompts to configure the data request.

Step 4: After importing the data, you can set up automatic refresh in the same way as with Power Query by configuring the refresh settings in Query Properties.

3. Using Google Sheets as an Intermediate Step

You can also use Google Sheets to automatically update web data and then link it to Excel:

Step 1: In Google Sheets, use the IMPORTHTML function to pull data from a website:

=IMPORTHTML("URL", "table", index)

Replace “URL” with the webpage URL and specify the table index.

Step 2: Google Sheets will automatically refresh the data at regular intervals.

Step 3: To link this data to Excel, use Excel’s “From Web” or “From File” options in the “Data” tab to connect to the Google Sheet or download it as an Excel file.:

By setting up automatic refresh in Excel using Power Query, connecting to an API, or using Google Sheets as an intermediary, you can ensure that your Excel workbook always contains the most up-to-date information from your desired websites. This process is especially useful for tracking live data such as stock prices, weather updates, or any regularly updated online data.

#7: How do I sync data from a website to Excel?

To sync data from a website to Excel, you can use several methods depending on the frequency and complexity of the data you need. Here’s how you can do it:

1. Using Excel’s Power Query (Get & Transform)

Power Query allows you to connect to a website and pull data directly into Excel. Here’s how to set it up for syncing:

Step 1: Open Excel and go to the “Data” tab.

Step 2: Click on “From Web” under the “Get & Transform Data” section.

Step 3: Enter the URL of the webpage from which you want to sync data, and click “OK.”

Step 4: Excel will display a preview of the available data tables on the webpage. Select the table or data you need and click “Load.”

Step 5: The data will be imported into Excel. To sync this data automatically, you need to set up a refresh schedule:

- Right-click on the data table in Excel and select “Refresh.”

- For automatic syncing, go to the “Data” tab, select “Queries & Connections,” and then click on “Properties” next to your query.

- In the Query Properties window, check “Refresh every” and set the desired interval (e.g., every 30 minutes). You can also choose “Refresh data when opening the file.”

This method will keep your Excel workbook in sync with the website’s data at the intervals you specify.

2. Using an API for Real-Time Sync

If the website offers an API (Application Programming Interface), you can sync data in real-time:

Step 1: Obtain API access details and documentation from the website.

Step 2: In Excel, go to the “Data” tab and select “From Web.”

Step 3: Enter the API endpoint URL provided by the website, and follow the prompts to configure your query.

Step 4: Once the data is pulled into Excel, you can set up automatic syncing using the same refresh interval settings as with Power Query.

This method allows for more precise and real-time data syncing, especially useful for live data such as financial markets or news updates.

3. Using Google Sheets with Excel

If you prefer a more straightforward approach, you can use Google Sheets to sync data from a website and then connect it to Excel:

Step 1: In Google Sheets, use the IMPORTHTML function to pull data from a website:

- Example:

=IMPORTHTML("URL", "table", index) - Replace “URL” with the webpage’s URL and specify the table index.

Step 2: Google Sheets will automatically update the data periodically.

Step 3: To sync this data with Excel, either export the Google Sheet to Excel or use Excel’s “From Web” or “From File” option in the “Data” tab to connect directly to the Google Sheet.

This method provides a simple way to keep data synced between the web and Excel, with Google Sheets acting as the intermediary.

To sync data from a website to Excel, you can use Power Query for periodic updates, APIs for real-time data syncing, or Google Sheets for a simpler approach. Each method allows you to keep your Excel data up-to-date with the latest information from your chosen website, helping you make informed decisions based on the most current data available.

#8: Can Excel do web scraping?

Yes, Excel can perform basic web scraping using its built-in tools, specifically through the “Get & Transform” feature, also known as Power Query. This allows you to pull data directly from a webpage into Excel. Here’s how Excel can be used for web scraping:

1. Using the “Get & Transform” (Power Query) Feature

Excel’s Power Query is a powerful tool that allows you to connect to a webpage, extract data, and import it directly into Excel for further analysis. Here’s a basic guide:

Step 1: Open Excel and go to the “Data” tab.

Step 2: Click on “From Web” in the “Get & Transform Data” section.

Step 3: Enter the URL of the webpage from which you want to scrape data and click “OK.”

Step 4: Excel will analyze the webpage and display the available data tables in a navigator window. Select the table or data you want to scrape.

Step 5: Click “Load” to import the data into Excel. You can also choose to “Load To” if you want to place the data in a specific location within your worksheet.

Step 6: Once the data is loaded into Excel, you can refresh it manually by right-clicking the data table and selecting “Refresh,” or set up an automatic refresh schedule.

2. Limitations of Excel’s Web Scraping

While Excel is capable of scraping data from websites, it has some limitations:

- Structured Data Only: Excel works best with structured data, such as tables. If the data is not organized in a recognizable table format, Excel may have difficulty extracting it.

- Basic Automation: Excel’s web scraping capabilities are limited in terms of automation and customization compared to dedicated web scraping tools.

- Dynamic Content: Excel may struggle with websites that use JavaScript to load content dynamically, as Power Query may not capture data that isn’t immediately visible in the page source.

3. When to Use Excel for Web Scraping

Excel is a good choice for simple web scraping tasks, such as extracting tables or lists from websites that have a static structure. It’s ideal for users who need to quickly pull data from the web for analysis without requiring complex setup or coding.

For more advanced or large-scale web scraping needs, using a dedicated web scraping tool or programming language like Python with libraries like BeautifulSoup or Scrapy may be more appropriate.

Yes, Excel can do basic web scraping using its “Get & Transform” feature. This allows you to extract structured data, such as tables, directly from websites and import it into Excel. However, for more complex or large-scale scraping tasks, you might need to explore more specialized tools or programming languages.

Also check: Why MS Excel is a Poor Choice for Data Projects.