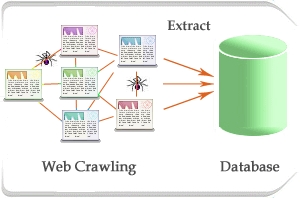

In the present day and age, web scraping comes across as a handy tool in the right hands. In essence, web scraping means quickly crawling the web for specific information, using pre-written programs. Scraping efforts are designed to crawl and analyze the data of entire websites, and save the parts that are needed. Many industries have successfully used web scraper to create massive banks of relevant, actionable data that they use on a daily basis to further their business interests and provide better service to customers. This is the age of the Big Data, and website scraping is one of the ways in which businesses can tap into this huge data repository and come up with relevant information that aids them in every way.

Web scrapping, however, does come with its own share of problems and roadblocks. With every passing day, a growing number of websites are trying to actively minimize the instance of scraping and protect their own data to stay afloat in today’s situation of immense competition. There are several other complications which might arise and several traps that can slow you down during your web scraping pursuits. Knowing about these traps and how to avoid them can be of great help if you want to successfully accomplish your web scraping goals and get the amount of data that you require.

Complications in Web Scraping

Over time, various complications have risen in the field of websitescraper. Many websites have started to get paranoid about data duplication and data security problems and have begun to protect their data in many ways. Some websites are not generally agreeable to the moral and ethical implications of web scraping, and do not want their content to be scraped. There are many places where website owners can set traps and roadblocks to slow down or stop web scraping activities. Major search engines also have a system in place to discourage scraping of search engine results. Last but not the least, many websites and web services announce a blanket ban on website scrapers and say the same in their terms and conditions, potentially leading to legal issues in the event of any scraping.

Here are some of the most common complications that you might face during your web scraping efforts which you should be particularly aware about –

- Some locations on the intranet might discourage web crawling to prevent data duplication or data theft.

- Many websites have in place a number of different traps to detect and ban web scraping tools and programs.

- Certain websites make it clear in their terms and conditions that they consider data scraping an infringement of their privacy and might even consider legal redress.

- In a number of locations, simple measures are implemented to prevent non-human traffic to websites, making it difficult for web scraping tools to go on collecting data at a fast pace.

To surmount these difficulties, you need a deeper and more insightful understanding of the way web scraping works and also the attitude of website owners towards web scraping efforts. Most major issues can be subverted or quietly avoided if you maintain good working practice during your scrape website efforts and understand the mentality of the people whose sites you are scraping.

Common Problems

With automated scraping, you might face a number of common problems. The behaviour of web scraping programs or spiders presents a certain picture to the target website. It then uses this behavior to distinguish between human users and web scraping spiders. Depending on that information, a website may or may not employ particular web scraping traps to stop your efforts. Some of the commonly employed traps are –

Crawling Pattern Checks –

Some websites detect scraping activities by analyzing crawling patterns. Web scraping robots follow a distinct crawling pattern which incorporates repetitive tasks like visiting links and copying content. By carefully analyzing these patterns, websites can determine that they are being caused by a web scraping robot and not a human user, and can take preventive measures.

Honeypots –

Some websites have honeypots in their webpages to detect and block web scraping activities. These can be in the form of links that are not visible to human users, being disguised in a certain way. Since your web crawler program does not operate the way a human user does, it can try and crawl information from that link. As a result, the website can detect the scraping effort and block the source IP addresses.

Policies –

Some websites make it absolutely apparent in their terms and conditions that they are particularly averse to screenscraper activities on their content. This can act as a deterrent and make you vulnerable against possible ethical and legal implications.

Infinite Loops –

Your web scraping program can be tricked into visiting the same URL again and again by using certain URL building techniques.

These traps in site crawler can prove to be detrimental to your efforts and you need to find innovative and effective ways to surpass these problems. Learning some web crawler tips to avoid traps and judiciously using them is a great way of making sure that your web scraping requirements are met without any hassle.

What you can do

The first and foremost rule of thumb about web scraping is that you have to make your efforts as inconspicuous as possible. This way you will not arouse suspicion and negative behavior from your target websites. To this end, you need a well-designed web scraping program with a human touch. Such a program can operate in flexible ways so as to not alert website owners through the usual traffic criteria used to spot scraping tools.

Some of the measures that you can implement to ensure that you steer clear of common page crawler traps are –

- The first thing that you need to do is to ascertain if a particular website that you are trying to crawl has any particular dislike towards web scraping tools. If you see any indication in their terms and conditions, tread cautiously and stop scraping their website if you receive any notification regarding their lack of approval. Being polite and honest can help you get away with a lot.

- Try and minimize the load on every single website that you visit for scraping. Putting a high load on websites can alert them towards your intentions and often might cause them to develop a negative attitude. To decrease the overall load on a particular website, there are many techniques that you can employ.

- Start by caching the pages that you have already crawled to ensure that you do not have to load them again.Also, store the URLs of crawled pages.

- Take things slow and do not flood the website with multiple parallel requests that put a strain on their resources.

- Handle your scraping in gentle phases and take only the content you require.

- Your scraping spider should be able to diversify its actions, change its crawling pattern and present a polymorphic front to websites, so as not to cause an alarm and put them on the defensive.

- Arrive at an optimum crawling speed, so as to not tax the resources and bandwidth of the target website. Use auto throttling mechanisms to optimize web traffic and put random breaks in between page requests, with the lowest possible number of concurrent requests that you can work with.

- Use multiple IP addresses for your scraping efforts, or take advantage of proxy servers and VPN services. This will help to minimize the danger of getting trapped and blacklisted by a website.

- Be prepared to understand the respect the express wishes and policies of a website regarding web scraping by taking a good look at the target ‘robots.txt’ file. This file contains clear instructions on the exact pages that you are allowed to crawl, and the requisite intervals between page requests. It might also specify that you use a pre-determined user-agent identification string that classifies you as a scraping bot. adhering to these instructions minimizes the chance of getting on the bad side of website owners and risking bans.

Use an advanced tool for web scraping which can store and check data, URLs and patterns. Whether your web scraping needs are confined to one domain or spread over many, you need to appreciate that many website owners do not take kindly to scraping. The trick here is to ensure that you maintain industry best practices while extracting data from websites. This prevents any incident of misunderstanding and allows you a clear pathway to most of the data sources that you want to leverage for your requirements.

Hope this article helps in understanding the different traps and roadblocks that you might face during your web scraping endeavours. This will help you in figuring outsmart, sensible ways to work around them and make sure that your experience remains smooth. This way, you can keep receiving the important information that you need with web scraping. Following these basic guidelines can help you prevent getting banned or blacklisted and stay in the good books of website owners. This will allow you to continue with your web scraping activities unencumbered.

Image Credits: hi-tech-bpo