Web scraping, a method used to scrape data from websites to Excel, is a powerful tool for gathering information from the internet. This technique enables individuals and businesses to collect and analyze data that is publicly available on web pages in a structured format. While web scraping can provide valuable insights and support various business processes, such as market research, competitive analysis, and price monitoring, it’s crucial to navigate the practice with a clear understanding of its legal and ethical considerations.

Legally, web scraping occupies a gray area that varies by jurisdiction. The legality of scraping depends on several factors, including the website’s terms of service, the nature of the data being scraped, and how the data is used. Many websites include clauses in their terms of service that explicitly prohibit scraping, and ignoring these terms can lead to legal consequences. Furthermore, laws such as the Computer Fraud and Abuse Act (CFAA) in the United States and the General Data Protection Regulation (GDPR) in the European Union impose additional legal frameworks that can affect web scraping activities, especially when they involve personal data.

Microsoft Excel, known for its robust data management and analysis capabilities, emerges as an excellent tool for organizing data obtained from web scraping. Excel allows users to sort, filter, and process large datasets, making it easier to derive meaningful insights from the collected data. Whether for academic research, business intelligence, or personal projects, Excel’s powerful features can help users efficiently manage and analyze web-scraped data. Here are certain things to watch out for before you start to scrape data from website to excel.

What You Need to Know Before Starting

Before diving into the world of web scraping and data management in Excel, it’s crucial to arm yourself with some foundational knowledge. Here’s what you need to know to ensure a smooth start:

Basic Knowledge of HTML and CSS Selectors

HTML (HyperText Markup Language) is the standard language for creating web pages. It provides the basic structure of sites, which is enhanced and modified by other technologies like CSS (Cascading Style Sheets) and JavaScript. Understanding HTML is fundamental to web scraping because it allows you to identify the content you wish to extract. Web pages are built using HTML elements, and knowing how these elements are structured and interact will enable you to navigate a website’s DOM (Document Object Model) tree and identify the data you want to collect.

CSS selectors are patterns used to select the elements you want to style in a webpage. In the context of web scraping, CSS selectors are invaluable for pinpointing specific elements within the HTML structure of a webpage. By learning how to use CSS selectors, you can efficiently extract items such as titles, prices, descriptions, and more, depending on your scraping objectives.

Understanding of Excel and Its Data Management Capabilities

Microsoft Excel is a powerful tool not just for data analysis but also for managing large datasets, which includes data cleaned and structured through web scraping. Excel offers a range of features that can help you sort, filter, analyze, and visualize the scraped data:

- Data Sorting and Filtering: Excel allows you to organize your data according to specific criteria. This is particularly useful when dealing with large volumes of data, enabling you to quickly find the information you need.

- Formulas and Functions: Excel’s built-in formulas and functions can perform calculations, text manipulation, and data transformation, which are essential for analyzing scraped data.

- PivotTables: These are Excel’s premier analytical tool, which can automatically sort, count, and total the data stored in one table or spreadsheet and create a second table displaying the summarized data.

- Data Visualization: Excel provides a variety of options to visualize your data through charts and graphs, helping you to identify patterns, trends, and correlations within your dataset.

- Excel Power Query: For more advanced users, Excel’s Power Query tool can import data from various sources, perform complex transformations, and load the refined data into Excel for further analysis.

By combining a solid understanding of HTML and CSS selectors with proficiency in Excel, you’ll be well-equipped to navigate the technical aspects of web scraping and effectively manage and analyze your data. Whether you’re looking to perform market research, track pricing trends, or gather information for academic purposes, these skills are essential for anyone looking to leverage the power of web scraping and data analysis.

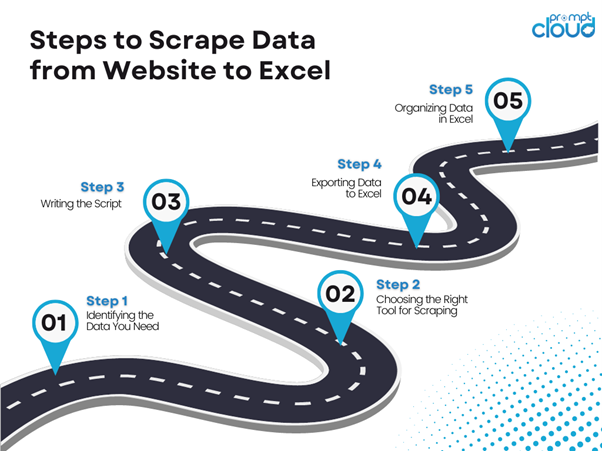

Steps to Scrape Data from Website to Excel

Step 1: Identifying the Data You Need

The first step in web scraping is to clearly define what data you’re interested in collecting. Use developer tools in your browser to inspect the webpage and identify the HTML elements containing the data

Step 2: Choosing the Right Tool for Scraping

There are several tools at your disposal for scraping data:

- Python Libraries: Beautiful Soup for static content and Selenium for dynamic content are popular choices among developers for their flexibility and power.

- Dedicated Web Scraping Tools: Tools like Octoparse and ParseHub offer a user-friendly interface for those less inclined to code.

- Excel’s Web Query Feature: A built-in feature in Excel that allows you to import data directly from the web into your spreadsheet

Each method has its pros and cons, from the complexity of setup to the flexibility of the data you can scrape.

Step 3: Writing the Script

For those using Python, setting up your environment and writing a script is a critical step. Install Python and necessary libraries like BeautifulSoup or Selenium, write a script to request and parse the webpage, and extract the data using CSS selectors.

Step 4: Exporting Data to Excel

Once you’ve captured the data, it’s time to bring it into Excel. You can manually input the data, use Python libraries like Pandas to export to Excel, or leverage Excel’s Get Data From Web feature for direct import

Step 5: Organizing Data in Excel

After importing the data into Excel, use its built-in features to clean and organize the data. This might include removing duplicates, sorting and filtering the data, or using formulas for more complex transformations.

In Conclusion

Web scraping into Excel is a powerful technique for extracting valuable data from the web, enabling businesses and individuals to make informed decisions based on up-to-date information. Whether you’re analyzing market trends, gathering competitive intelligence, or conducting academic research, the ability to efficiently scrape and analyze data in Excel can significantly enhance your capabilities. By following the steps outlined in this guide, how to scrape data from website to excel, you can start leveraging web data to its full potential.

However, web scraping comes with its challenges, including legal and ethical considerations, as well as technical hurdles. It’s crucial to navigate these carefully to ensure your data collection is compliant and effective. For those looking for a more robust solution that handles the complexities of web scraping at scale, PromptCloud offers a comprehensive suite of web scraping services. Our advanced technology and expertise in data extraction can simplify the process for you, delivering clean, structured data directly from the web to your fingertips.

Whether you’re a seasoned data analyst or just getting started, PromptCloud can help you harness the power of web data. Contact us today to learn more about our services and how we can help you achieve your data goals. By choosing PromptCloud, you’re not just accessing data; you’re unlocking the insights needed to drive your business forward.

Get in touch with us at sales@promptcloud.com or schedule a demo.

Frequently Asked Questions (FAQs)

How do I extract data from a website to Excel?

Extracting data from a website to Excel can be done through various methods, including manual copy-pasting, using Excel’s built-in “Get & Transform Data” feature (previously known as “Web Query”), or through programming methods using VBA (Visual Basic for Applications) or external APIs. The “Get & Transform Data” feature allows you to connect to a web page, select the data you want to import, and bring it into Excel for analysis. For more complex or dynamic websites, you might consider using VBA scripts or Python scripts (with libraries like BeautifulSoup or Selenium) to automate the data extraction process, and then import the data into Excel.

Can Excel scrape websites?

Yes, Excel can scrape websites, but its capabilities are somewhat limited to simpler, table-based data through the “Get & Transform Data” feature. For static pages and well-structured data, Excel’s built-in tools can be quite effective. However, for dynamic content loaded through JavaScript or for more complex scraping needs, you might need to use additional tools or scripts outside of Excel and then import the data into Excel for analysis.

Is it legal to scrape a website?

The legality of web scraping depends on several factors, including the website’s terms of service, the data being scraped, and the way the scraped data is used. While public information might be considered fair game, scraping personal data without consent can violate privacy laws such as the GDPR in the EU. Websites’ terms of service often have clauses about automated access or data extraction, and violating these terms can lead to legal actions. It’s crucial to review the legal guidelines and obtain permission when necessary before scraping a website.

How do I automatically update data from a website in Excel?

To automatically update data from a website in Excel, you can use the “Get & Transform Data” feature to establish a connection to the web page from which you’re extracting data. When setting up the import, Excel allows you to refresh the data at regular intervals or upon opening the workbook, ensuring that you have the latest information from the website. For more advanced scenarios, using VBA scripts or connecting to an API can provide more flexibility in how data is fetched and updated, allowing for more frequent or conditional updates based on your specific needs.