In today’s data-driven world, businesses need efficient ways to gather and analyze information. Manual data collection can be time-consuming and error-prone, which is why many organizations are turning to web scraper tools to automate their data extraction processes. In this article, we will explore what a scraper tool is used for, how to use scraper tool effectively, and the benefits of integrating advanced web scraper tools into your data strategy.

What is a Scraper Tool Used For?

A scraper tool is a software application designed to extract data from websites automatically. It navigates web pages, extracts the required information, and stores it in a structured format, such as a spreadsheet or database. These tools are invaluable for businesses that need to collect large volumes of data quickly and accurately, without the need for manual intervention.

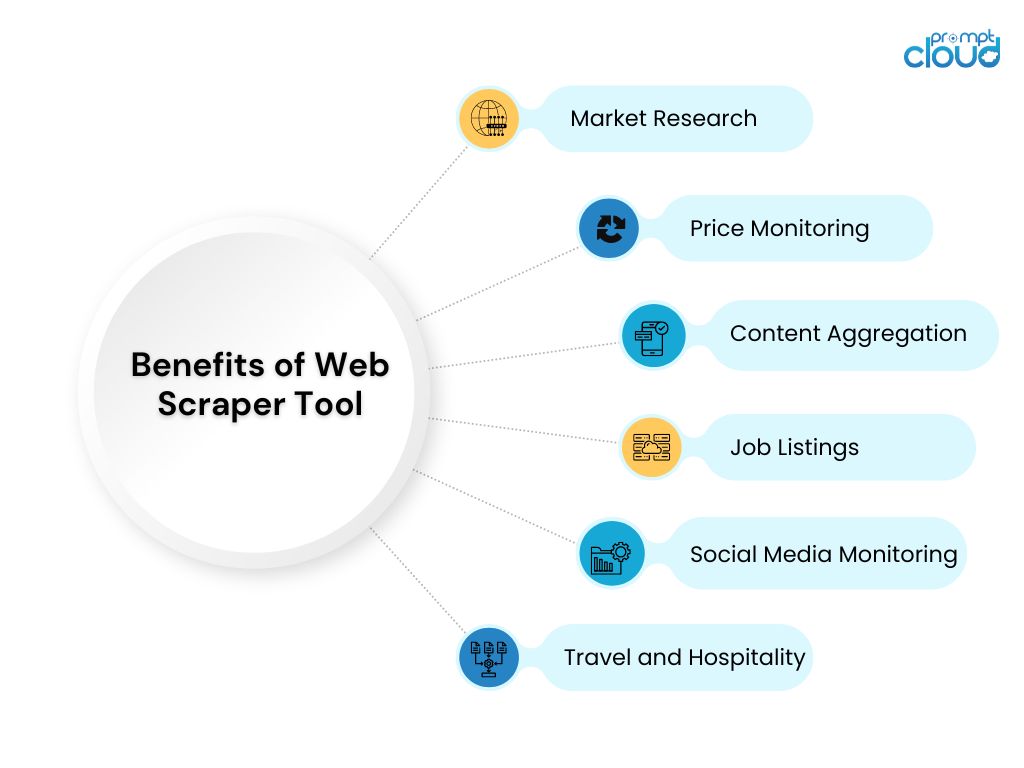

Scraper tools can be used for a variety of purposes, including:

- Market Research: Scraper tools allow businesses to gather competitive intelligence by collecting data on market trends, product pricing, customer reviews, and industry benchmarks. By analyzing this information, companies can gain insights into market dynamics and make informed strategic decisions.

- Price Monitoring: E-commerce companies use scraper tools to track pricing changes and fluctuations across competitors’ websites. This enables businesses to stay competitive by adjusting their pricing strategies in real-time to attract more customers and increase sales.

- Content Aggregation: Media and content platforms often use scraper tools to gather articles, news, and multimedia from multiple sources. This allows them to create comprehensive content databases that can be used for research, analysis, and curation.

- Job Listings: Recruitment agencies and job boards can leverage scraper tools to extract job postings from company websites and employment platforms. This data can be used to populate job boards and match candidates with suitable job opportunities.

- Social Media Monitoring: Scraper tools can be used to track social media mentions, comments, and trends, providing businesses with insights into public perception and brand sentiment. This data can be valuable for reputation management and targeted marketing campaigns.

- Academic Research: Researchers and academics can use scraper tools to collect data for studies and projects. This may include extracting data from online surveys, public records, and academic publications to support their research findings.

- Travel and Hospitality: Scraper tools help travel agencies and hospitality businesses collect data on flight prices, hotel availability, and customer reviews. This information can be used to optimize travel packages, improve customer service, and enhance the overall travel experience.

Choosing the Right Scraper Tool

Selecting the appropriate scraper tool for your needs involves considering various factors to ensure you can efficiently and effectively extract the data you need. Here are some key considerations to keep in mind when choosing a scraper tool:

Ease of Use

- User-Friendly Interface: Look for a tool with an intuitive interface that allows users of all skill levels to set up and run data extraction tasks without extensive technical knowledge.

- Documentation and Support: Choose a tool that provides comprehensive documentation and reliable customer support to assist you in troubleshooting any issues that arise.

Scalability

- Handling Large Data Volumes: Ensure that the tool can handle your current data needs and scale with your business as those needs grow. It should efficiently manage large datasets and extract data at scale.

- Performance Optimization: Opt for a tool that optimizes scraping performance, minimizing server load and extraction time while maintaining data quality.

Compatibility

- Target Websites: Verify that the tool is compatible with the websites you need to scrape, including those with complex structures or dynamic content such as JavaScript-heavy pages.

- Data Formats: Ensure the tool can export data in formats that are compatible with your existing data processing systems, such as CSV, JSON, or XML.

Customization and Flexibility

- Tailored Solutions: Advanced tools like those offered by PromptCloud provide customizable solutions that can be tailored to your specific data requirements, allowing you to adjust extraction parameters to suit your unique needs.

- Automation and Scheduling: Look for features that enable automated data extraction at scheduled intervals, ensuring you have access to up-to-date information without manual intervention.

Legal and Ethical Compliance

- Respecting Terms of Service: Choose a tool that adheres to legal standards and respects the terms of service of target websites, ensuring your data collection practices are compliant with applicable laws and regulations.

- Data Privacy: Consider tools that prioritize data privacy and incorporate features to anonymize or protect sensitive information during the extraction process.

Integration Capabilities

- Seamless Integration: Select a tool that can easily integrate with your existing systems, such as databases, analytics platforms, or CRM software, to streamline data processing and analysis workflows.

- API Access: If your business relies on APIs for data integration, ensure the scraper tool provides API access for seamless data transfer and integration with other tools.

Cost-Effectiveness

- Budget Considerations: Evaluate the cost of the tool relative to its features and the value it brings to your business. Consider both initial investment and long-term operational costs.

- Free Trials and Demos: Take advantage of free trials or demos offered by many scraper tool providers to test the tool’s capabilities and ensure it meets your needs before committing to a purchase.

How to Use Web Scraper Tool?

Implementing a data scraper tool into your workflow can significantly streamline your data collection process. Here are some steps to effectively use a scraper tool:

Define Your Data Needs

Before using a scraper tool, it’s crucial to clearly define what data you need to collect. Determine the specific websites or platforms from which you want to extract data, and identify the data points that are most relevant to your goals.

Example: Suppose you want to track the prices of a specific model of laptop on several e-commerce websites. You’ll need to decide which e-commerce sites to target (e.g., Amazon, Best Buy) and what data points to collect (e.g., product name, price, availability).

Choose the Right Scraper Tool

Selecting the right scraper tool is essential for successful data extraction. There are various tools available, each with different features and capabilities. Consider factors such as ease of use, scalability, and compatibility with your target websites when choosing a tool. Advanced scraper tools, like those offered by PromptCloud, provide customizable solutions that can be tailored to your specific data needs.

Example: For tracking laptop prices, you might choose a tool like BeautifulSoup for a simple project or a more advanced tool like Scrapy if you need to automate data collection and schedule regular updates.

Set Up and Configure the Tool

Once you have chosen a scraper tool, set it up by configuring the necessary parameters. This includes specifying the target URLs, defining data fields to extract, and setting extraction schedules. Many tools offer intuitive interfaces that make configuration straightforward, even for users with limited technical expertise.

Example: Using BeautifulSoup, you would write a script to access the product page on Amazon, parse the HTML to locate the price element, and extract the data. You could use Python to automate this task and schedule it to run daily.

import requests

from bs4 import BeautifulSoup

# Define the URL of the product page

url = “https://www.amazon.com/dp/B09XYZ123”

# Send a request to the page

response = requests.get(url)

# Parse the HTML content

soup = BeautifulSoup(response.content, “html.parser”)

# Extract the price

price = soup.find(“span”, {“id”: “priceblock_ourprice”}).get_text()

# Output the extracted price

print(“Laptop Price:”, price)

Monitor and Maintain Data Quality

After deploying your web scraper tool, it’s essential to regularly monitor the data extraction process to ensure data quality and accuracy. Check for any changes in the target website’s structure that might affect the scraping process, and make adjustments as needed.

Example: If the e-commerce site updates its page layout, you may need to adjust your script to accommodate changes in the HTML structure to ensure you’re still extracting the correct data.

Analyze and Utilize the Data

Finally, analyze the collected data to derive actionable insights that can inform your business decisions. Use data visualization tools to present the information in an easily digestible format, and integrate the data into your existing systems for further analysis.

Example: You could use a tool like Microsoft Excel or Tableau to visualize price trends over time, helping you decide when to purchase the laptop at the best price.

Conclusion

Automating your data collection with advanced scraper tools can revolutionize the way your business gathers and utilizes information. By understanding what a scraper tool is used for and how to use it effectively, you can streamline your data processes, gain a competitive edge, and drive informed decision-making. With PromptCloud’s customizable web scraper tool solutions, you can unlock the full potential of automated data extraction and transform the way you operate.

For more information on how PromptCloud’s data scraper tool services can benefit your business, schedule a demo now.

Frequently Asked Questions

#1: What is a scraper tool used for?

A scraper tool is used to automatically extract data from websites. This process, known as web scraping, allows users to gather large amounts of information quickly and efficiently without manually copying and pasting content. Scraper tools can be programmed to navigate through web pages, identify specific data points (such as text, images, or links), and collect this information into a structured format like a spreadsheet or database. Businesses and researchers often use scraper tools to gather competitive intelligence, monitor prices, track news and trends, or aggregate content from multiple sources. However, it’s important to ensure that scraping is done in compliance with legal and ethical guidelines, respecting the terms of service of the websites involved.

#2: What is a scraper tool?

A scraper tool is a software application designed to extract data from websites automatically. Instead of manually copying information from a web page, a scraper tool can browse the web, locate specific data points, and collect them into a structured format such as a spreadsheet, CSV file, or database. These tools can be used for various purposes, including data mining, market research, price monitoring, content aggregation, and more. Scraper tools are powerful for gathering large amounts of data quickly and efficiently, but they must be used responsibly, adhering to legal and ethical standards to avoid violating website terms of service or data privacy regulations.

#3: What is a scraper used for?

A scraper is used to automatically extract data from websites. This process, known as web scraping, allows users to gather information from multiple web pages quickly and efficiently, without the need for manual data collection. Scrapers can be used for a variety of purposes, such as:

- Market Research: Collecting competitor pricing, product details, or customer reviews to analyze market trends.

- Price Monitoring: Tracking prices across e-commerce sites to ensure competitive pricing or identify opportunities for discounts.

- Content Aggregation: Gathering news articles, blog posts, or social media content from various sources to create curated feeds or databases.

- Lead Generation: Extracting contact information from directories or professional networking sites for business outreach.

- Academic Research: Collecting large datasets from public sources for analysis in research projects.

Scrapers are valuable tools for businesses, researchers, and developers who need to collect and analyze web data efficiently. However, they should be used ethically and in compliance with website terms of service and data privacy regulations.

#4: How to use scraper tool?

Using a scraper tool involves several steps, from setting up the tool to extracting and processing the desired data. Here’s a general guide on how to use a scraper tool:

1. Choose the Right Scraper Tool

- Select a scraper tool that fits your needs. There are various options available, ranging from user-friendly browser extensions to more advanced programming libraries like BeautifulSoup (Python) or Scrapy.

- If you’re new to web scraping, tools like Octoparse, ParseHub, or browser-based scrapers like Web Scraper (a Chrome extension) might be easier to start with.

2. Install or Access the Tool

- Download and install the scraper tool if required. For browser-based tools, add the extension to your browser.

- If using a programming library, set up your development environment by installing the necessary packages.

3. Identify the Target Website

- Determine the website or web pages you want to scrape. Ensure that scraping the site is permitted by reviewing its terms of service or robots.txt file.

- Identify the specific data points you need, such as text, images, links, or tables.

4. Configure the Scraper

- For non-coding tools: Use the graphical interface to navigate the target website, select the data elements you want to scrape, and configure any necessary settings (e.g., pagination, scrolling).

- For coding libraries: Write a script that accesses the target website, identifies the data elements using HTML tags or CSS selectors, and extracts the data. Tools like BeautifulSoup or Scrapy offer flexible options for this step.

5. Run the Scraper

- Execute the scraper tool to start the data extraction process. Depending on the tool and the complexity of the task, this may take a few seconds to several minutes.

- Monitor the scraping process to ensure it’s running correctly and that the data is being collected as expected.

6. Export and Process the Data

- Once the scraping is complete, export the data to a file format of your choice (e.g., CSV, Excel, JSON).

- Review and clean the data if necessary, especially if you’re using the data for analysis or integration into other systems.

7. Respect Legal and Ethical Considerations

- Always scrape data responsibly, adhering to the website’s terms of service and any applicable data privacy laws. Avoid scraping content that is copyrighted or protected by intellectual property rights without permission.

8. Automate (Optional)

- If you need to scrape data regularly, consider automating the process by scheduling the scraper to run at specific intervals. Some tools and libraries offer built-in scheduling features, while others might require additional setup.

By following these steps, you can effectively use a scraper tool to collect the data you need for your projects.

#5: What is a web scraping tool?

A web scraping tool is a software application designed to automatically extract data from websites. Instead of manually copying and pasting information from web pages, a web scraping tool allows users to collect data in a structured format, such as a spreadsheet, CSV file, or database, quickly and efficiently.

These tools work by navigating through web pages, identifying specific elements (like text, images, links, or tables), and retrieving the data from these elements based on predefined rules or user specifications. Web scraping tools can vary in complexity, from simple browser extensions that require no coding to advanced programming libraries that allow for highly customized scraping processes.

Web scraping tools are used for various purposes, including:

- Market Research: Gathering data on competitors, pricing, and consumer reviews.

- Price Monitoring: Tracking prices on e-commerce sites to ensure competitive pricing.

- Content Aggregation: Collecting articles, news, or social media posts from multiple sources.

- Data Mining: Extracting large datasets for analysis in business, academic, or scientific research.

- Lead Generation: Harvesting contact information from directories or professional networking sites.

While web scraping tools are powerful and versatile, it’s important to use them responsibly, ensuring that you comply with the legal and ethical guidelines, including respecting website terms of service and data privacy regulations.

#6: Is it legal to web scrape?

The legality of web scraping is a complex issue that depends on several factors, including the purpose of the scraping, the type of data being collected, and the specific website’s terms of service. Here are some key points to consider:

1. Terms of Service

- Many websites have terms of service that explicitly prohibit web scraping. Violating these terms can lead to legal action, such as being blocked from the site or sued for breach of contract.

- Always check a website’s terms of service before scraping. If the terms prohibit scraping, it’s best to avoid scraping that site.

2. Public vs. Private Data

- Scraping publicly accessible data (data that is available to anyone without logging in or bypassing security measures) is generally more acceptable than scraping private or restricted data.

- Scraping private data or data that requires bypassing security measures (e.g., logging in or CAPTCHA) is usually illegal and can lead to serious legal consequences.

3. Copyright and Intellectual Property

- Content on websites is often protected by copyright or intellectual property laws. Scraping such content, especially for commercial purposes, could infringe on these rights.

- If you plan to use scraped data for commercial purposes, it’s important to ensure that you’re not violating copyright or other intellectual property laws.

4. Data Privacy Regulations

- In many regions, data privacy laws such as the General Data Protection Regulation (GDPR) in Europe or the California Consumer Privacy Act (CCPA) in the U.S. impose strict rules on collecting and using personal data.

- If your web scraping activities involve collecting personal data (e.g., names, email addresses, IP addresses), you must comply with these regulations, which may include obtaining consent from individuals.

5. Case Law and Legal Precedents

- There have been several legal cases involving web scraping, with varying outcomes. In some cases, courts have ruled in favor of the scraper, especially when the data was public and no significant harm was caused to the website owner. In other cases, courts have ruled against scrapers, particularly when scraping involved bypassing access controls or violated the website’s terms of service.

- The legal landscape is continually evolving, so it’s crucial to stay informed about recent rulings and legal precedents.

6. Ethical Considerations

- Even if web scraping is technically legal, it’s important to consider the ethical implications. For example, excessive scraping can overload a website’s servers, leading to downtime or degraded performance, which could harm the website owner and its users.

#7: Which tool is best for web scraping?

The best tool for web scraping depends on your specific needs, technical expertise, and the complexity of the scraping task. Here are some popular web scraping tools, each suited to different levels of experience and requirements:

1. BeautifulSoup (Python)

- Best For: Beginners and intermediate users comfortable with Python programming.

- Description: BeautifulSoup is a Python library for parsing HTML and XML documents. It is widely used for simple web scraping tasks and is great for beginners because of its straightforward syntax and ease of use.

- Pros: Easy to learn, excellent for small to medium-sized scraping tasks, well-documented.

- Cons: Requires knowledge of Python, not suited for very large or complex scraping projects.

2. Scrapy (Python)

- Best For: Advanced users looking for a powerful and scalable scraping framework.

- Description: Scrapy is a fast, open-source web crawling framework for Python. It’s designed for large-scale scraping projects and is highly customizable.

- Pros: Built-in support for handling large-scale scraping, asynchronous processing, well-suited for complex and high-volume tasks.

- Cons: Steeper learning curve, requires programming knowledge, can be overkill for simple tasks.

3. Octoparse

- Best For: Non-programmers who need a user-friendly, visual tool for scraping.

- Description: Octoparse is a visual web scraping tool that allows users to extract data without coding. It provides a point-and-click interface to configure scraping tasks.

- Pros: No coding required, easy to use, supports cloud-based scraping for large-scale projects.

- Cons: Limited flexibility compared to coding-based tools, pricing can be high for premium features.

4. ParseHub

- Best For: Users who need a visual tool with robust customization options.

- Description: ParseHub is another visual scraping tool that lets you scrape data from websites without coding. It offers advanced features like handling dynamic content and interacting with websites.

- Pros: User-friendly interface, good for complex sites with AJAX or JavaScript, allows conditional scraping logic.

- Cons: Can be expensive for heavy users, some advanced features might require a learning curve.

5. Web Scraper (Chrome Extension)

- Best For: Casual users who need a quick and easy way to scrape data directly from their browser.

- Description: Web Scraper is a free Chrome extension that allows users to scrape data directly from their browser. It’s ideal for quick tasks and small projects.

- Pros: No installation required beyond the browser extension, easy to use, free.

- Cons: Limited to what can be done in a browser environment, not suitable for very large-scale tasks.

6. Diffbot

- Best For: Businesses needing a powerful API-based tool for large-scale web scraping and data extraction.

- Description: Diffbot is a commercial web scraping service that uses AI to extract data from web pages. It’s designed for businesses that need reliable, large-scale data extraction.

- Pros: Highly reliable, handles complex pages, provides structured data in various formats, API-based.

- Cons: Expensive, requires technical knowledge to implement effectively.

7. Apify

- Best For: Developers looking for a cloud-based scraping platform with automation capabilities.

- Description: Apify is a web scraping and automation platform that allows users to run and manage web scrapers in the cloud. It supports both coding-based and visual interfaces.

- Pros: Supports large-scale scraping, offers cloud-based execution, integrates well with other automation tools.

#8: Do hackers use web scraping?

Yes, hackers can use web scraping, but it’s important to understand that web scraping itself is a neutral tool. Like many technologies, it can be used for both legitimate and malicious purposes depending on the intent of the user.

Legitimate Uses of Web Scraping

Web scraping is widely used for legitimate purposes across various industries, including:

- Market Research: Companies gather data on competitors, prices, and consumer behavior.

- Content Aggregation: News sites and blogs compile information from multiple sources.

- Academic Research: Researchers collect large datasets from public sources for analysis.

- Price Monitoring: E-commerce platforms track competitor pricing to stay competitive.

Malicious Uses of Web Scraping

While web scraping is legal and ethical when done within the bounds of law and terms of service, hackers might use scraping techniques for harmful activities, such as:

- Data Harvesting: Hackers might scrape personal information (like email addresses, phone numbers, or social media profiles) from websites to build databases for spamming, phishing, or other malicious purposes.

- Competitive Espionage: Scraping can be used unethically to steal trade secrets, pricing strategies, or customer data from competitors.

- Content Theft: Hackers might scrape copyrighted content from websites to republish it without permission, leading to intellectual property infringement.

- Automated Account Creation: Scraping can be used to automate the creation of fake accounts on websites, which can then be used for fraudulent activities or to manipulate systems (e.g., voting bots, spam bots).

Security Concerns

Organizations need to be aware of the potential for malicious scraping and take steps to protect their data and systems. Some common defenses include:

- Robots.txt: Setting up a

robots.txtfile to guide ethical scrapers on what content should not be scraped. - CAPTCHAs: Implementing CAPTCHAs to prevent automated bots from accessing certain areas of a website.

- Rate Limiting: Limiting the number of requests a user can make to a website in a given timeframe to deter automated scraping.

- IP Blocking: Identifying and blocking suspicious IP addresses that may be associated with malicious scraping.

#9: What is data scraper tool?

A data scraper tool is a software application designed to automatically extract or “scrape” data from websites and other online sources. The tool navigates through web pages, identifies specific data points (such as text, images, links, or tables), and collects this information into a structured format, such as a spreadsheet, CSV file, or database.

Key Features of Data Scraper Tools

- Automation: Data scraper tools can automate the process of collecting data from multiple web pages, saving time and effort compared to manual data collection.

- Customization: Many tools allow users to specify exactly which data points to extract, such as specific fields in a table, headings, or links.

- Data Export: The scraped data can typically be exported in various formats, making it easier to analyze or integrate with other systems.

- Handling Dynamic Content: Some advanced data scraper tools can interact with dynamic web pages that use JavaScript or AJAX to load content, allowing them to scrape data from more complex websites.

Common Uses of Data Scraper Tools

- Market Research: Businesses use data scrapers to collect information on competitors, pricing trends, and customer reviews.

- Price Monitoring: E-commerce companies track competitor prices and stock availability using scraper tools.

- Content Aggregation: News and media outlets aggregate articles, blogs, or social media posts from multiple sources.

- Lead Generation: Companies extract contact information from directories or social media for marketing and outreach.

- Academic Research: Researchers collect large datasets from public online sources for analysis in various fields.

Popular Data Scraper Tools

- BeautifulSoup (Python): A popular Python library for parsing HTML and XML, used for simple to moderately complex scraping tasks.

- Scrapy (Python): A powerful and scalable Python framework for web scraping, suitable for large-scale projects.

- Octoparse: A visual, no-code web scraping tool that allows users to scrape data without needing programming skills.

- ParseHub: Another no-code scraping tool that handles dynamic content and offers robust customization options.

- Web Scraper (Chrome Extension): A browser-based tool that makes it easy to scrape data directly from web pages you’re viewing in your browser.

Legal and Ethical Considerations

While data scraper tools are powerful and versatile, they must be used responsibly. It’s important to respect the terms of service of the websites you scrape and comply with data privacy laws and copyright regulations. Misusing data scraper tools, such as scraping personal data without consent or violating a website’s terms of service, can lead to legal issues.

#10: Are data scrapers illegal?

Data scrapers are not inherently illegal, but their legality depends on how they are used, the type of data being scraped, and whether the activity violates the terms of service of the website being scraped or applicable laws. Here’s a breakdown of the key factors that determine the legality of data scraping:

1. Website Terms of Service

- Many websites have terms of service that explicitly prohibit web scraping or automated data collection. If you scrape data from a website that prohibits it in its terms of service, you could be violating a contractual agreement, which might lead to legal action from the website owner.

- It’s crucial to review and adhere to the terms of service of any website you plan to scrape.

2. Public vs. Private Data

- Public Data: Scraping publicly accessible data (data that can be viewed without logging in or bypassing security measures) is generally more acceptable and may be considered legal in many cases. However, even public data scraping can be contentious if it involves large-scale extraction or impacts the website’s performance.

- Private or Protected Data: Scraping data behind a login, paywall, or other access controls without permission is usually illegal. This can be considered unauthorized access and a violation of laws such as the Computer Fraud and Abuse Act (CFAA) in the United States.

3. Copyright and Intellectual Property

- The content on many websites is protected by copyright or intellectual property laws. Scraping such content, especially for commercial use, could infringe on the rights of the content owners.

- If you plan to use scraped data in a way that involves redistribution or commercial use, it’s important to ensure that you are not violating copyright or intellectual property laws.

4. Data Privacy Regulations

- Regulations like the General Data Protection Regulation (GDPR) in Europe and the California Consumer Privacy Act (CCPA) in the U.S. impose strict rules on collecting, storing, and using personal data. If your scraping activities involve personal data (e.g., names, email addresses), you must comply with these regulations, which often require obtaining explicit consent from the individuals whose data is being collected.

- Violating data privacy laws can result in significant fines and legal consequences.

5. Case Law and Legal Precedents

- Legal cases involving web scraping have resulted in varying outcomes, with courts sometimes ruling in favor of scrapers, particularly when the data is public and no significant harm is caused to the website owner. In other cases, courts have sided with website owners, especially when scraping involved bypassing access controls or caused harm.

- The legal landscape is evolving, and it’s important to stay informed about recent rulings and legal precedents related to web scraping.

6. Ethical Considerations

- Even if scraping is technically legal, it’s important to consider the ethical implications. For example, scraping large amounts of data could overwhelm a website’s servers, leading to performance issues or downtime, which could harm the website owner and its users.

- Ethical scraping practices include respecting a website’s

robots.txtfile, limiting the frequency of requests, and avoiding scraping sensitive or proprietary information.

#11: What is the best data scraper?

The “best” data scraper depends on your specific needs, technical skills, and the complexity of the data scraping task you want to accomplish. Below are some of the most popular and effective data scraper tools, each suited to different types of users and tasks:

1. BeautifulSoup (Python)

- Best For: Beginners and intermediate users comfortable with Python programming.

- Description: BeautifulSoup is a Python library that makes it easy to scrape information from web pages. It works well with HTML and XML documents, allowing you to parse and extract data with minimal coding.

- Pros: Simple to use, excellent for smaller projects, well-documented.

- Cons: Limited scalability for large-scale scraping tasks.

2. Scrapy (Python)

- Best For: Advanced users and developers needing a powerful and scalable scraping solution.

- Description: Scrapy is a fast and open-source web crawling and web scraping framework for Python. It’s designed for large-scale and complex scraping tasks, with built-in support for handling many web scraping challenges.

- Pros: Highly scalable, supports asynchronous scraping, extensive community and documentation.

- Cons: Steeper learning curve, more complex setup compared to simpler tools.

3. Octoparse

- Best For: Non-programmers and users looking for a visual, no-code scraping tool.

- Description: Octoparse is a cloud-based, visual web scraping tool that allows you to set up and run scraping tasks without any coding knowledge. It’s user-friendly and suitable for both simple and complex scraping projects.

- Pros: No coding required, easy to use, supports cloud scraping, handles dynamic websites.

- Cons: Can be expensive for premium features, limited flexibility compared to coding-based tools.

4. ParseHub

- Best For: Users needing a visual scraper with robust customization options.

- Description: ParseHub is another visual web scraping tool that is capable of handling dynamic websites, including those that use JavaScript, AJAX, etc. It offers a simple interface for users to configure scraping tasks without coding.

- Pros: Powerful visual interface, handles complex sites, offers conditional logic.

- Cons: Higher learning curve for advanced features, pricing can be high for extensive use.

5. Web Scraper (Chrome Extension)

- Best For: Casual users and quick scraping tasks directly from the browser.

- Description: Web Scraper is a free Chrome extension that allows you to scrape data directly from your browser. It’s ideal for small-scale scraping tasks and for users who need a quick and easy solution.

- Pros: Easy to install and use, free, integrates directly with the Chrome browser.

- Cons: Limited to what can be done within the browser, not suitable for very large-scale or complex tasks.

6. Diffbot

- Best For: Businesses needing a robust, AI-powered data extraction tool.

- Description: Diffbot is a commercial service that uses AI to extract structured data from web pages. It’s particularly useful for large-scale, enterprise-level projects where accuracy and data quality are critical.

- Pros: AI-powered, highly reliable, supports complex data extraction needs, API-based.

- Cons: Expensive, requires technical knowledge to implement effectively.

7. Apify

- Best For: Developers and businesses looking for a cloud-based scraping platform with automation capabilities.

- Description: Apify is a platform that allows users to create, manage, and run web scraping and automation tasks in the cloud. It offers both coding-based and visual interfaces, making it versatile for different user levels.

- Pros: Cloud-based, scalable, supports large-scale projects, offers both API and visual tools.

- Cons: Can be costly for large-scale scraping, requires some technical expertise for advanced use.

#12: What does a scraper tool do?

A scraper tool is a software application designed to automatically extract data from websites. Instead of manually copying and pasting information from web pages, a scraper tool automates this process, allowing users to gather large amounts of data quickly and efficiently. Here’s what a scraper tool does:

1. Navigates Websites

- A scraper tool can be programmed to navigate through websites, either by following links or by directly accessing specific URLs. It can mimic a human user by loading web pages, scrolling, clicking buttons, and interacting with forms if necessary.

2. Identifies Specific Data

- Scraper tools are configured to identify and extract specific types of data from web pages. This could include text, images, URLs, tables, or any other visible content. Advanced scrapers can also extract data from hidden elements or dynamically generated content using JavaScript.

3. Extracts Data

- Once the target data is identified, the scraper tool extracts it from the web page. This data can be anything from product prices and descriptions to entire articles or user reviews. The tool pulls the data into a structured format that can be easily analyzed or stored.

4. Handles Large-Scale Data Collection

- Scraper tools are particularly useful for large-scale data collection, where manual extraction would be too time-consuming. They can scrape data from hundreds or thousands of pages in a relatively short time.

5. Processes and Cleans Data

- Some scraper tools also include features to process and clean the extracted data. This might involve filtering out irrelevant information, formatting the data into a consistent structure, or converting it into a different format (e.g., CSV, JSON, or XML).

6. Exports Data

- After extraction and processing, the scraper tool typically exports the data into a file format that is easy to use, such as a spreadsheet, database, or JSON file. This allows users to integrate the scraped data into their workflow, whether for analysis, reporting, or further processing.