The ability to capture and analyze data instantaneously enables businesses to react promptly to market changes, optimize operations, and enhance customer experiences. Unlike static data, real-time data provides up-to-the-minute insights that are crucial for various applications, ranging from financial trading to supply chain management. By leveraging web scraper APIs, technical experts can continuously gather and process data from diverse sources, ensuring they maintain a comprehensive and current understanding of their industry.

This ability to access and utilize real-time data supports decision-making processes and drives innovation and agility within an organization. Key benefits of real-time data access include enhanced decision-making, operational efficiency, better customer experience, faster market responsiveness, and innovation.

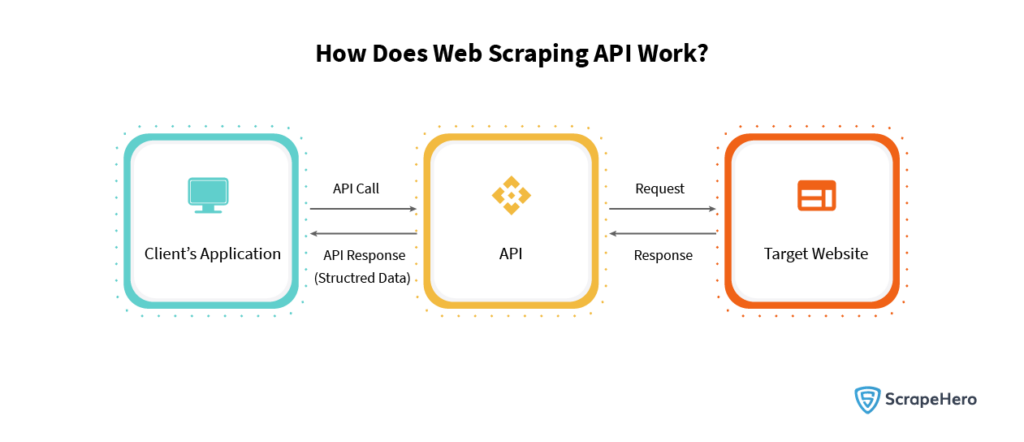

What is a Web Scraper API?

A Web Scraper API is a specialized tool designed to programmatically extract data from web pages by simulating a typical web browser’s interactions. It equips technical experts with an interface to send requests to a webpage, parse the HTML or other content formats, and retrieve structured data for their specific use cases.

By utilizing these APIs, users can bypass traditional browser limitations and automation, handle complex web scraping tasks, and effectively navigate issues such as CAPTCHA systems, IP blocking, and dynamic content loading. Web scraper APIs often come with built-in functionalities to manage and rotate proxies, handle cookies, and respect website terms of service by incorporating rate limiting and other ethical scraping practices.

They are essential for gathering large-scale data efficiently and accurately, contributing significantly to fields like market research, competitive analysis, and data-driven decision-making. The robust error-handling and logging capabilities included in these APIs also assist in maintaining the integrity and reliability of the data extraction process, making them a crucial resource in the technological toolkit of data professionals.

Image Source: ScrapeHero

What are the Key Features of An Effective Scraper API?

An effective Scraper API is essential for extracting accurate and reliable data from web pages. Technical experts prioritize several key features when choosing a Scraper API to ensure efficiency, integrity, and security. Here are the pivotal characteristics that define an effective Scraper API:

Comprehensive Data Extraction

A robust web scraper API supports multiple data formats (JSON, XML, CSV) and can precisely target complex HTML elements. It also handles dynamic content from JavaScript-heavy websites, ensuring thorough data collection.

Scalability and Performance

Efficient scraping tools manage high request volumes quickly, support parallel extractions without lag, and include rate-limiting to prevent IP bans and ensure consistent operation.

Robust Error Handling

Effective error handling includes immediate detection, automatic retries, and fallback strategies to maintain continuous data extraction even when issues arise.

Customization and Flexibility

Users can customize scraping rules, configure API parameters, and utilize built-in tools for data cleaning and transformation, allowing for tailored and flexible data extraction.

Data Quality and Consistency

Accurate data extraction is supported by real-time validation mechanisms and historical data tracking, ensuring consistency and reliability in the collected information.

Security Features

Key security features include data encryption, IP rotation for anonymity, and automated CAPTCHA solving to bypass anti-scraping measures and secure the scraping process.

Documentation and Support

Comprehensive guides, responsive customer support, and active community forums help users effectively utilize the tool and troubleshoot issues as they arise.

Compliance and Ethical Considerations

Web scraping tools should adhere to legal and ethical guidelines, prioritize data privacy, and practice responsible usage to avoid overloading target servers and ensure compliance.

Web Scraper APIs with these features are distinguished for their reliability and effectiveness, making them indispensable tools for technical experts aiming to derive valuable insights from web data.

How to Use a Scraper API?

To effectively use a Scraper API, technical experts generally adhere to a systematic approach to ensure optimized performance and data accuracy. First, they start by selecting a reliable scraper API that fits the specific needs of their project, considering factors such as rate limits, data type compatibility, and geographical reach. Once selected, the API documentation is meticulously reviewed to understand the endpoints, required parameters, and authentication methods. Setting up authentication, typically through API keys or OAuth tokens, is the next step to ensure secure access.

Following authentication, experts construct HTTP requests tailored to the API’s specifications. They often use tools like Postman to test these requests and validate responses before integrating the API within their applications. During this phase, the focus is on error handling and retry logic to manage potential issues like rate limiting or network failures. Parsing the received data into a usable format—such as JSON or XML—is critical, and this commonly involves libraries specific to the programming language in use.

Experts frequently implement scheduling mechanisms to automate data extraction processes, ensuring data is refreshed at desired intervals. They also set up logging and monitoring systems for ongoing performance assessment and troubleshooting. Finally, special attention is given to compliance with legal and ethical standards, particularly around data privacy laws like GDPR. By following these structured steps, experts can leverage a scraper API efficiently, ensuring they obtain reliable and accurate data for their analytical or developmental needs.

This methodology allows technical experts to harness scraper APIs effectively, facilitating a robust and scalable approach to data extraction.

What are the Benefits of Using A Scraper API?

Web Scraper API has become an indispensable tool for technical experts who require reliable data acquisition and integration into their systems. The benefits of using these APIs are multifaceted, offering substantial advantages across various dimensions.

Image Source: ScrapeHero

1. Efficiency and Automation

- Time-Saving: Reduces time spent on manual extraction and processing of data.

- Automation: Streamlines repetitive tasks, thus decreasing manual intervention.

- Scalability: Enables handling of large volumes of data effortlessly.

2. Accuracy and Reliability

- Precision: Ensures data is extracted accurately, minimizing errors.

- Consistency: Maintains uniformity in data collection processes.

- Error Handling: Incorporates mechanisms to manage and rectify errors in real-time.

3. Versatility and Flexibility

- Multiple Formats: Supports a wide range of data formats such as JSON, XML, and CSV for seamless integration.

- Customizable: Offers tailored solutions to meet specific business needs.

- Integration: Compatible with diverse platforms, enhancing interoperability.

4. Cost-Effectiveness

- Reduce Costs: Lowers operational costs associated with data collection and processing.

- Resource Allocation: Enables better allocation of resources, focusing on analytical tasks rather than data gathering.

- Maintenance: Lowers maintenance costs by providing a robust infrastructure for data extraction.

5. Legal and Compliance

- Data Anonymity: Ensures data is collected anonymously, respecting privacy guidelines.

- Regulation Adherence: Helps in adhering to regulatory standards like GDPR.

- Secure Data Handling: Implements secure practices to protect data integrity and confidentiality.

6. Performance and Speed

- High Speed: Extracts data at high speeds without compromising quality.

- Concurrent Requests: Handles multiple requests concurrently, enhancing performance.

- Real-Time Data: Provides real-time data updates, crucial for dynamic business environments.

How Scraper APIs Drive Innovation Across Industries?

Scraper APIs have become indispensable tools, providing precise and timely data for multiple industries. Professionals across various sectors rely on these solutions for a variety of use cases.

1. Market Research and Competitive Analysis

Companies harness scraper APIs to collect extensive data on competitors and market trends:

- Price Monitoring: Retailers can track competitor prices to adjust their strategies in real-time.

- Product Analysis: Marketers analyze competitor product listings to understand market positioning.

- Consumer Sentiment: Scraping review sites enables a deep dive into consumer opinions and trends.

2. Financial Services and Investment Analysis

Financial analysts and investment firms employ scraper APIs to manage vast datasets:

- Stock Market Prediction: Collecting historical prices and relevant news to predict stock trends.

- Economic Indicators: Extracting data from government websites and reports for informed decisions.

- Cryptocurrency Monitoring: Keeping tabs on cryptocurrency exchange rates and market activities.

3. E-Commerce and Retail Optimization

E-commerce businesses utilize scraper APIs to optimize their online presence:

- Inventory Management: Helping businesses monitor real-time stock levels and manage supply chains.

- Ad Campaign Optimization: Gathering data on ad performance across various channels.

- Customer Feedback: Aggregating customer reviews and feedback to improve products and services.

4. Real Estate and Property Management

Real estate professionals and property managers rely on scraper APIs for accurate real estate data:

- Listing Analysis: Extracting data from property listing sites to understand market dynamics.

- Price Estimation: Evaluating competitive pricing to set accurate rental or sale prices.

- Lead Generation: Collecting potential leads from multiple real estate portals.

5. Academic and Social Science Research

Researchers leverage scraper APIs for data collection and analysis:

- Sentiment Analysis: Analyzing social media and news to gauge public sentiment on various issues.

- Citation and Bibliometric Analysis: Gathering data from academic publications for comprehensive research analysis.

- Behavioral Studies: Studying human behavior through data aggregated from forums and social media platforms.

6. Travel and Hospitality Analytics

The travel and hospitality industry use scraper APIs to gain insights and improve services:

- Price Comparison: Continuously monitoring competitors for dynamic pricing strategies.

- Customer Reviews: Collecting and analyzing reviews from travel and hospitality sites.

- Availability Tracking: Keeping track of room and flight availability to optimize booking processes.

With these specific use cases, it is evident that scraper API proves a versatile and essential technology across diverse sectors, empowering professionals with actionable insights and competitive advantages.

Conclusion

Web scraper APIs have emerged as indispensable tools for technical experts seeking reliable data solutions. These APIs ensure robust, accurate, and timely extraction of data from diverse sources – an essential requirement for maintaining data-driven insights and strategies. By leveraging advanced algorithms and constant updates, web scraper APIs significantly reduce the complexity traditionally associated with data scraping, making them highly efficient and trusted by professionals.

Technical experts should consider partnering with PromptCloud to streamline their data scraping processes. By using PromptCloud’s state-of-the-art web scraping solutions, organizations can ensure they are always ahead in the data game, continuously feeding their data pipelines with the most relevant and timely information. For enhanced data strategies and seamless integration, explore what PromptCloud can offer and take a decisive step toward data excellence.PromptCloud’s dedicated team is ready to assist you in customizing a data extraction plan that fits your unique needs, ensuring that you harness the full power of reliable, scalable, and precise data solutions. Schedule a demo today!