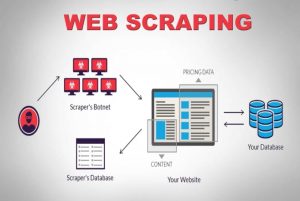

We are living in a data-centric world where data is the most powerful commodity of all. With the right data, we wield power. It is omnipresent: machine learning, data mining, market research, financial research, to name a handful. The big question remains — How do you retrieve all the data that is available for consumption? To get data of such scale and complexity, we crawl the source websites. Hence, web scraping services are not optional anymore. They are essential if you have any conceivable data-driven strategy.

An interesting fact about data crawling is that it says what it does, it is not an out-of-the-box solution. So, how do companies scrape the web as a means of obtaining data? Do they build an in-house team or do they outsource to dedicated web scraping services companies? Since we are talking about scraping vast amounts of data of varying complexity, the DIY scraping tools are out of the question.

Let us consider the very first option. We can always hire a team of experts in the field to train an in-house team that can understand the nuances of web crawling. The companies need not worry about the privacy of the data being scraped. While it sounds like the ideal option right off the bat, there are a few disadvantages as well.

The sheer cost of setting up and maintaining a dedicated internal team will be gigantic. This can be entirely bypassed by outsourcing it entirely to a professional data scraping service whose expertise lies in web scraping projects by and large. You save time, energy, most importantly money.

Still not convinced? Here are some other reasons to choose a dedicated web scraping service.

a). The Increasing Complexity Of Websites:

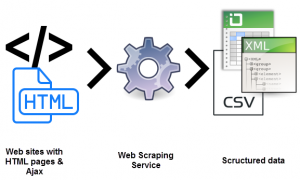

The law of demand plays here as well. The more the demand, the more is the complexity involved in crawling it. This stumps not only the DIY tool options available, but even the personnel that has been recently trained in scraping the web. On top of that, many sites are adopting AJAX-based infinite scrolling to improve the user experience. This makes scraping more complex.

Such dynamic coding practices would render most DIY tools and even some in-house teams inefficient and useless. What’s needed here is a fully customizable setup and a dedicated approach. A combination of manual and automated layers is used to figure out how the website receives AJAX calls to mimic them using the custom-built crawler. As the complexity of websites keeps increasing over time, the need for a customizable solution becomes glaringly obvious.

b). Scalability Of The Extraction Process:

Many entrepreneurs feel the need to reinvent the wheel. They have the urge to carry out a process in-house rather than outsourcing it. Of course, some processes are better done in-house and a great example of that is customer support. Since the complexities associated with large scale web data extraction are too niche to master by a company that doesn’t do it exclusively, it probably isn’t a great idea. The biggest of companies outsource services that fall in the technical niche bracket. (s)

Extracting millions of webpages simultaneously and processing all of them into structured machine-readable data is a real challenge. One of the USPs of a web scraper solution is scalability. With clusters of high-performance servers scattered across geographies, services like PromptCloud have built up a rock-solid infrastructure to extract large-scale web data.

c). Data Quality And Maintenance:

It is one thing to extract data. And another to convert unstructured data to machine-readable data. Scraping as a means of maintaining the quality of data is what services like PromptCloud advocate.

Crawling an overwhelming amount of raw, unstructured data won’t make sense if it is not readable. At the same time, we cannot set up a fully functioning web crawling setup and relax. The worldwide web is highly dynamic.

Maintaining data quality needs consistent effort and close monitoring using both manual and automated layers. Websites change their structures quite frequently which would render the crawler faulty or bring it to a halt, both of which will affect the output data. Data quality assurance and timely maintenance are integral to running a web crawling setup. Look for services that take end-to-end ownership of these aspects.

d). Hassle-Free Data Extraction With The Help Of Web Scraping:

Businesses need to channelize the entire force of energy on what their core offering is. Hence, the need to hire a web scraping service that has channelized its entire force of energy on exactly what you seek.

The setup, constant maintenance, and all the other complications that come with web data extraction can easily hog your internal resources, taking a toll on your business. The pitfalls are far too many.

e). Crossing The Technical Barrier:

Web scraping requires a team of developers to set up and deploy the crawlers on optimized servers for extraction. It is technically demanding. Why train when you can hire? At one-tenth the cost. With years of expertise in the web data extraction space, dedicated services can take up website scraping projects of any complexity and scale. Here is an article that showcases the template for a web scraping service that can be used in any project.

Conclusion:

It is inevitable for companies to explore ways to efficiently acquire immensely cast and powerful data. There’s data, there’s information, and then there’s the uno-numero, knowledge. Knowledge is where we make sense out of and organize information that we have pieced together from what was just random, unstructured, and otherwise (and seemingly) useless data. For that and everything else, there’s Promptcloud.