Bhagyashree

- July 6, 2023

- Blog, Web Scraping

In the vast world of online treasure hunting, web scraping has become the ultimate tool for extracting valuable gems of information. Whether you’re a lone adventurer or a data-hungry enterprise, web scraping is the trusty pickaxe in your digital toolkit.

However, not all web scraping techniques are created equal. It’s like choosing the perfect fishing rod for the task at hand. After all, you wouldn’t bring a tiny hook to catch a colossal creature like Moby Dick, right? In this blog, we’ll unveil the secrets behind manual, automated, and advanced web scraping techniques.

Just imagine assembling a team of superheroes, each with their own special powers and weaknesses. Likewise, each scraping approach has its strengths and limitations.

But let’s not forget the importance of responsible actions in web scraping. Just as a knight would never break their oath, it’s crucial to scrape ethically and respect the terms of service of the websites you wish to scrape.

Automated Web Scraping Techniques

Automated web scraping refers to the process of using software or tools to automatically extract data from websites. This automated approach eliminates the need for manual copying and pasting of data, allowing for efficient and large-scale data collection from various online sources.

1. Web scraping Libraries

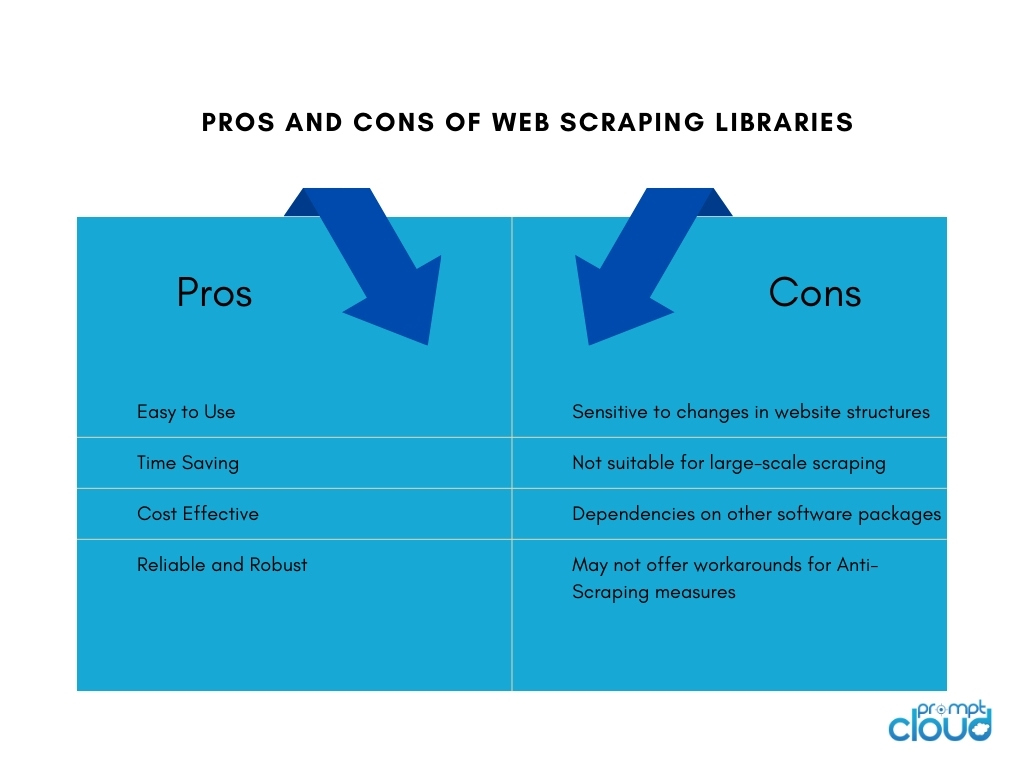

Web scraping libraries are software tools or frameworks that provide pre-built functions and utilities to facilitate web scraping tasks. They offer a simplified and efficient way to perform web scraping without the need to write everything from scratch. They save time, increase productivity, and enable more efficient data gathering from diverse online sources.

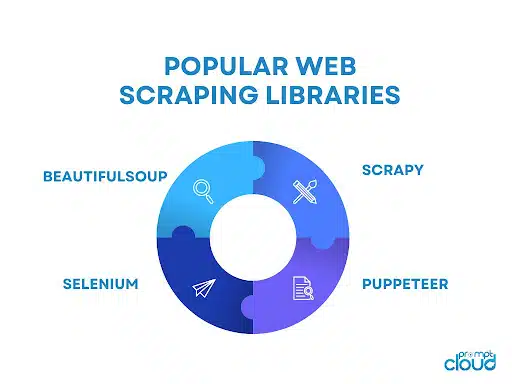

Some of the popular web scraping libraries include:

- BeautifulSoup: A widely-used Python library for web scraping that provides an intuitive API to parse HTML and XML documents, allowing users to navigate and extract data effortlessly.

- Scrapy: A powerful Python framework for web scraping that provides a comprehensive set of tools for building scalable and efficient web crawlers, with features such as automatic request throttling, item pipelines, and built-in support for handling pagination.

- Selenium: A versatile library that allows automated browser interactions for web scraping, particularly useful when dealing with dynamic content and JavaScript-heavy websites.

- Puppeteer: A Node.js library that provides a high-level API to control a headless Chrome or Chromium browser, enabling web scraping and interaction with web pages using JavaScript.

2. Web Scraping Tools and Services

Web scraping Tools

Web scraping tools are software applications or platforms designed specifically for automating and simplifying the process of web scraping. These tools often provide a user-friendly interface that allows users to specify the data they want to extract from websites without requiring extensive programming knowledge.

They typically offer features like point-and-click selection of data elements, scheduling and monitoring capabilities, and data export options. Web scraping tools can be beneficial for individuals or businesses that require data extraction without the need for extensive coding or development resources.

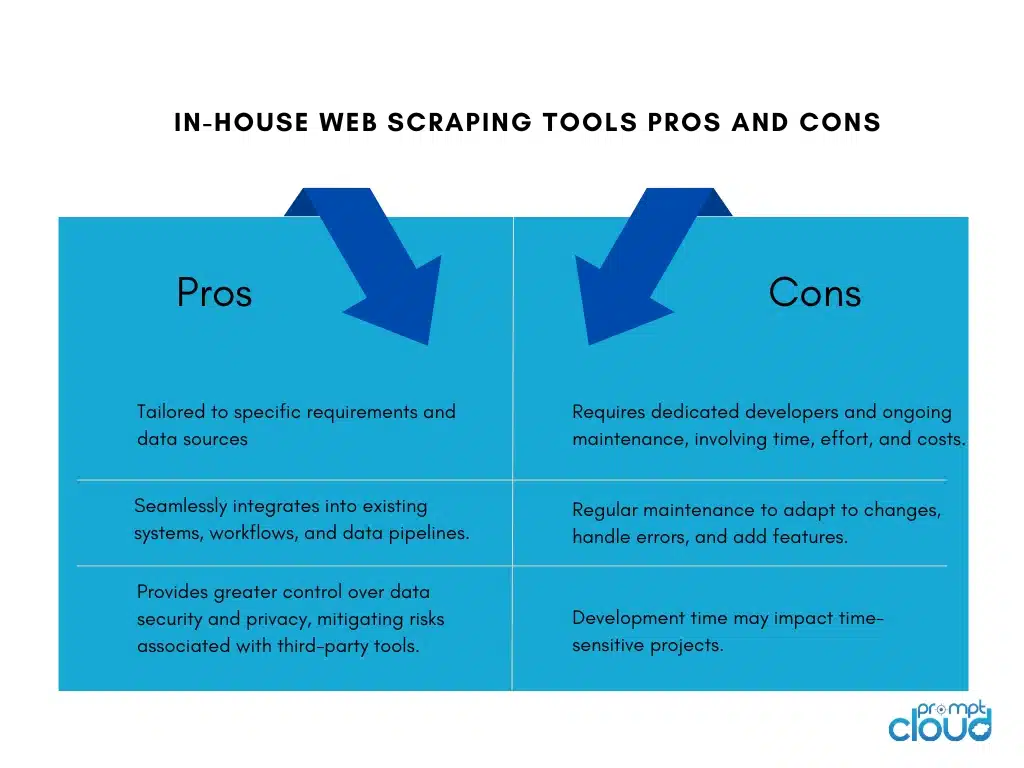

Building In-House Web Scraping Tools

Building an in-house web scraping tool for a company has its pros and cons:

Web scraping services

Web scraping service providers offer specialized services for data extraction from websites. These providers typically have infrastructure, tools, and expertise dedicated to handling web scraping tasks on behalf of clients.

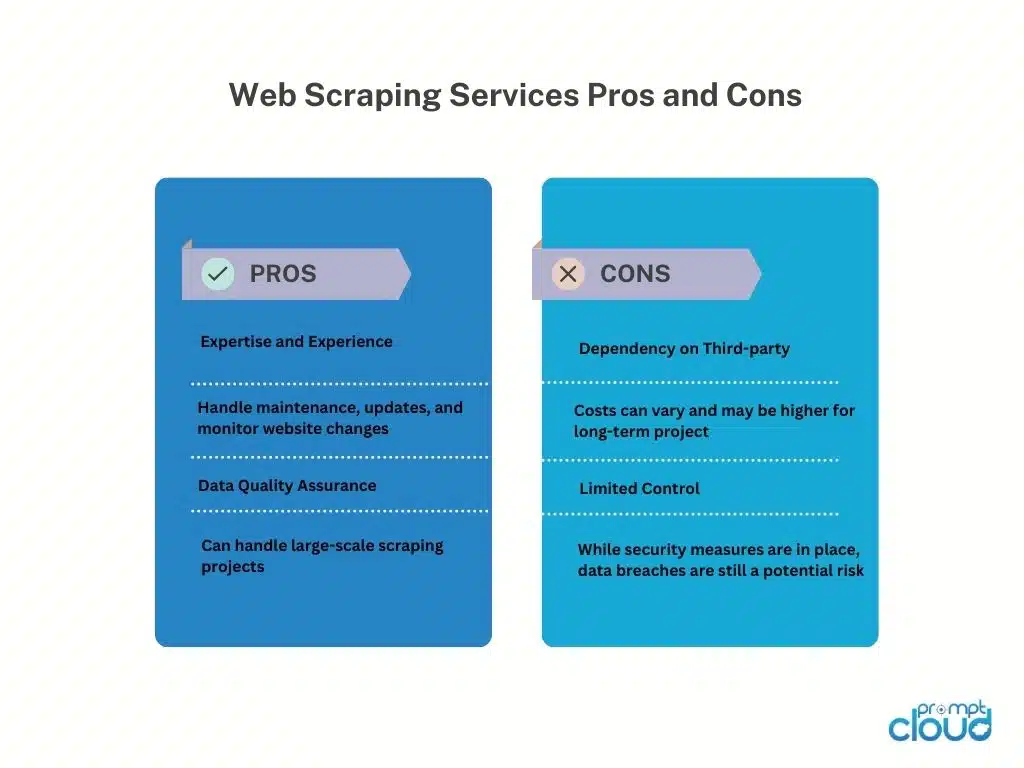

Here are the pros and cons of using web scraping service providers:

Web Scraping APIs

Web scraping APIs provide a programmatic interface that allows developers to access and retrieve data from websites using standardized methods. These APIs offer a more structured and controlled approach to web scraping compared to traditional scraping techniques. Developers can send requests to the API, specifying the data they need and receiving the scraped data in a structured format, such as JSON or XML.

Pros

Web scraping APIs simplify the scraping process, allowing developers to focus on integrating the API and handling the data. They offer reliability and performance due to provider maintenance, and may include features like authentication and rate limiting. APIs also aid compliance with terms of service and legal requirements.

Cons

Using web scraping APIs has limitations. Data availability and capabilities depend on the API provider, with possible restrictions on supported websites and scraping limits. External API reliance introduces dependency on provider availability and performance, impacting data retrieval. Additionally, there may be costs associated with high-volume or commercial usage.

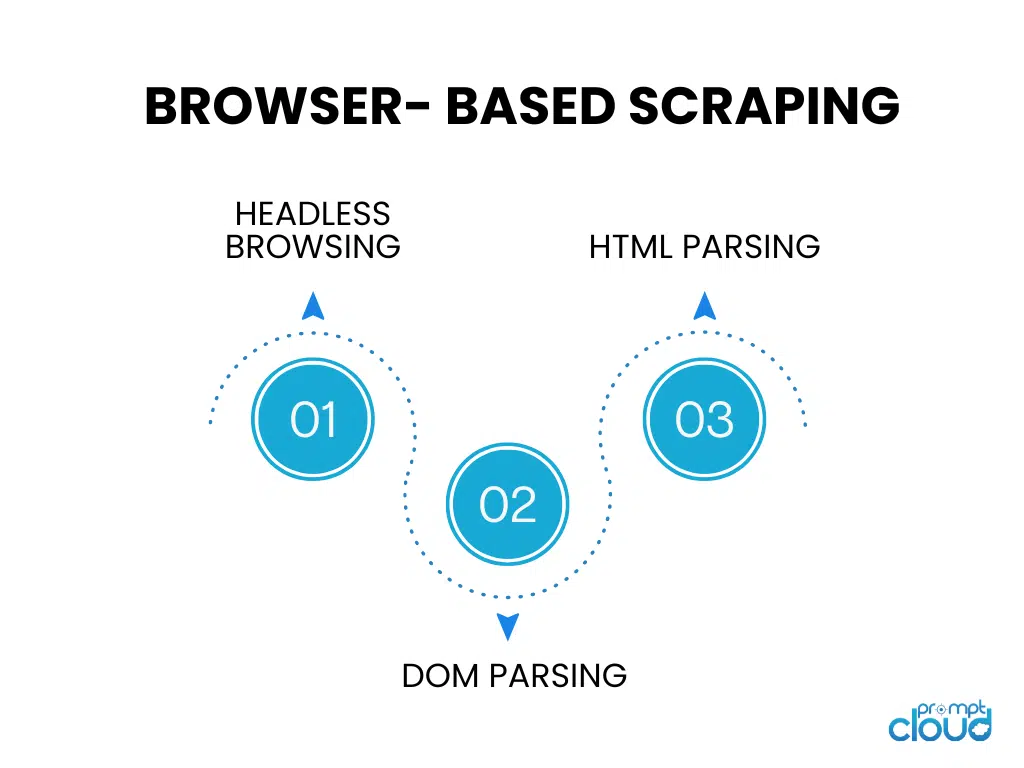

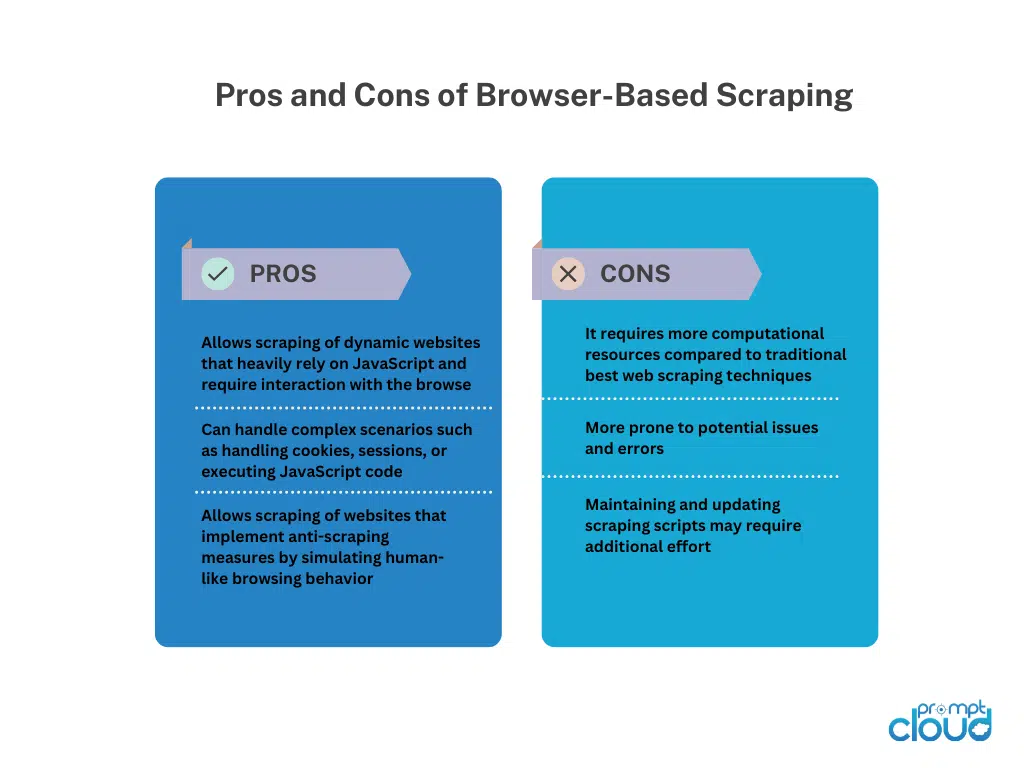

Browser- Based Scraping

Headless Browsing

Headless browsing runs a web browser without a graphical user interface, allowing automated browsing and interaction with websites using code. It’s ideal for scraping dynamic websites that heavily rely on client-side rendering.

DOM Parsing

DOM parsing involves manipulating the HTML structure of a web page by accessing its Document Object Model. This enables targeted extraction of elements, attributes, or text programmatically.

HTML Parsing

HTML parsing analyzes the HTML source code of a web page to extract desired data. It uses libraries or parsers to interpret the HTML structure and identify specific tags, attributes, or patterns for data extraction. HTML parsing is commonly used for scraping static web pages without JavaScript execution.

Manual Web Scraping Techniques

Manual Web Scraping Techniques

Manual web scraping refers to the process of extracting data from websites manually, without the use of automated tools or scripts. It involves human intervention to navigate websites, search for relevant information, and extract data using various techniques.

Techniques for Manual Web Scraping:

Screen Capturing: This technique involves capturing screenshots or videos of web pages to extract visual data like images, charts, or tables that are difficult to parse programmatically. Manual extraction from the captured media enables data retrieval.

Data Entry: In data entry, required information is manually copied from web pages and entered into a desired format like spreadsheets or databases. It involves navigating web pages, selecting data, and entering it into the target destination. Data entry is suitable for structured data that can be easily copied and pasted.

Manual web scraping offers flexibility when dealing with complex websites, JavaScript interactivity, or anti-scraping measures. However, it is time-consuming, less suitable for large-scale tasks, and prone to human errors. It requires human effort, attention to detail, and careful execution.

Hybrid Web Scraping Techniques

Hybrid web scraping combines automated tools and manual intervention for efficient and accurate data extraction from websites. Automated tools handle repetitive tasks like navigation and structured data extraction, while manual techniques, such as screen capturing or data entry, address complex scenarios and visual/non-structured data.

Hybrid Web Scraping offers scalability and speed of automation, along with the flexibility of human judgment. It suits websites with diverse structures, dynamic content, or anti-scraping measures. The choice depends on the website’s complexity, data type, and available resources, providing a balanced approach for comprehensive data extraction.

Sharing is caring!

Manual Web Scraping Techniques

Manual Web Scraping Techniques