The typical uses of web scraping are only limited by our own imagination. It crawls and extracts large amounts of data from literally all websites for a plethora of uses, such as price monitoring, financial data spidering, analyzing news aggregation, to name a few. Scraping and crawling are empowering businesses to create new products and innovate faster and better.

Like, in a price juxtaposition website like Kayak, an SEO product like Botify, or a job aggregator that is built from multiple sources, these websites are built on scraping websites solely. By guaranteeing ease of access to data, web scrapers enhance your value proposition. Before we unravel the mystery of why web scraping is such a game-changer and which industries need it the most, let us walk you through what website scraping really is.

What is Web Scraping?

Web scraping (and web crawling) is the automated identification and retrieval of data from websites. The prominence and need for aggregation have multiplied beyond measure. More than that, the want of quality data for the analytics industry is under-supplied. Web scrapers are essentially spiders and provide every bit of information available out there. No matter what industry you are in, data scraping will be the solution to at least one of your problems.

Applications of Website Scraping Services

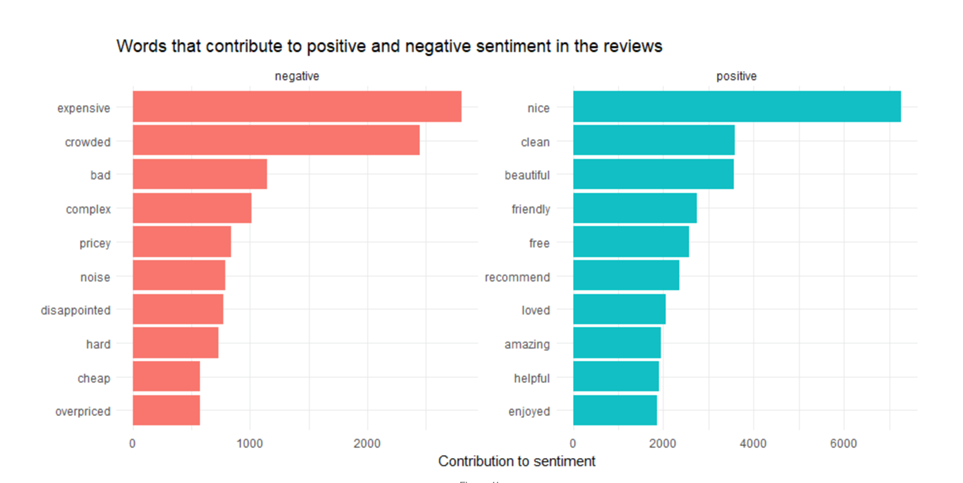

A). Sentiment Analysis

Every social media post put out there in a stipulated period of time invariably reveals a larger picture and helps analysts understand consumer sentiment and behaviour. The in-built APIs in all social media platforms may be inadequate. Social media crawling is needed to understand where the conversation is going and what micro trends are gathering most eyeballs, say by analyzing the use of hashtags.

B). eCommerce Pricing and Price Monitoring

Price wars have reached a new tangent with eCommerce data scraping. In an oligopolistic and price-sensitive market, it is very important to keep an eye on how the product is priced across the board. As a seller, you can also see which platform offers the best margin on your products.

C). Job Aggregators

Job aggregators use scraping services to crawl all career web pages and consolidate them all in one place. They basically work as search engines for job ads thanks to their advanced search functionality. The scraping happens regularly to make sure that only real-time and relevant openings are shown to the talent pool.

D). Machine Learning

Artificial Intelligence and Machine Learning need continuous feeds of quality data so that they can emulate and replicate a human. They need to constantly be fed with the latest piece of information so they can keep adapting. Web crawling services scrape a large number of data points, text, and images to aid this. ML is propelling technological marvels such as driverless cars, smart glasses, image, and speech recognition. However, to be able to scale it up exponentially, these models need regular data updation to improve their accuracy and reliability.

E). Brand Monitoring

Most e-commerce players (here’s looking at you Amazon) work solely on reviews and ratings. Consumers trust other consumers more intrinsically. How do you, as a brand cash in on this to push your image and digital publicity?

You can scrape product reviews and ratings from each website listing your products and then aggregate them. You could kick it up a notch by monitoring social media platforms and combining it with sentiment analysis to quickly respond to naysayers or reward and incentivize users who love you. The industries which need this are endless: tourism, hospitality, e-commerce, all online aggregators, app developers.

F). SEO

If it is not on the first page of Google, it doesn’t exist. Hence, SEO. And if you are working towards SEO, you probably use tools such as SEMrush or Ubersuggest. Fun fact: these tools would literally not exist if it wasn’t for web crawling and scraping.

The very tools you can use to find out your SEO competitors for a particular search term. You can figure the title tags and the keywords they are targeting to figure what is redirecting traffic to their websites and driving sales.

How do We Set up a Web Mining Project?

A). Identify the goal

This is a no brainer. Figure out what is it that you need. How do you do that? Answer the following set of questions.

a). What kind of information do you seek?

b). What do you expect as an outcome?

c). Where is the data you seek usually published?

d). Who is this data for?

e). In what format should this data be presented to its end-users?

f). The typical shelf life of the data? How often do you have to perform this activity?

B). Web crawling service analysis

Since data scraping is highly automated, the kind of web scraping service you use is paramount. These are what you should keep in mind before selecting the scraping service:

a). Project dimensions

b). Supported OS

c). Does it support your enterprise requirements?

d). Scripting language support

e). Built-in data storage support

C). Designing the Scraping Schema

Maybe our scraping job is to collect data from job sites about vacancies posted by recruiters. The source of data would determine the schema attributes. It would look like this:

a). Title

b). ID number

c). Description

d). URL used to apply for the position by the candidate

e). Location

f). Remuneration

g). Job type

h). Experience required

D). Feasibility Check and Pilot Run

A pilot run is always a good idea before taking on a full-blown scraping project. How do you do that?

a). Check the scraping feasibility of the source websites

b). Scrape the HTML

c). Retrieve the desired item

d). Identify the URLs leading to subsequent pages

If you are happy with your results, you can move ahead with a larger scrape. You might need to catch the corrected Xpaths and replace them with hard-coded values. An external library may also be needed to act as inputs for the source.

Now that we have walked you through web crawling and scraping, by and large, you may think it is a gargantuan task tart needs technical supervision. Well, yes and no. While you can choose to do this in-house by upskilling your staff. Or by using the plethora of DIY tools available. But websites are becoming more and more complex by the day. The need to outsource web scraping to a premium service provider is probably the best way forward to scrape data at scale.